Methods

Participants

We tested 60 participants in our virtual scenario. Our participant sign-up materials described the experiment as “Decision Making In Virtual Environments.” All participants completed the scenario, but 4 data sets were thrown out due to technical issues.

Ages of the participants ranged from 18 to 39, with an average of 22.0 years old and a standard deviation of 4.4 years. Self-reported ethnic backgrounds varied, with Asian/Asian-American most represented at 43%, then Caucasian at 32%, and Chinese/Chinese-American at 10%. Indian, Taiwanese, “human,” and unreported made up the following 15%. The gender of our participants were mainly female at 57% (male: 43%). All participants were students or recent graduates of Carnegie Mellon University.

Procedure

Participants were placed into one of three groups: lever, non-haptic, or haptic. Group placement was randomized such that within the first 55 trials, we would have 15 in the lever group and 20 in both the non-haptic and haptic groups. Trials past the first 55 were also randomized.

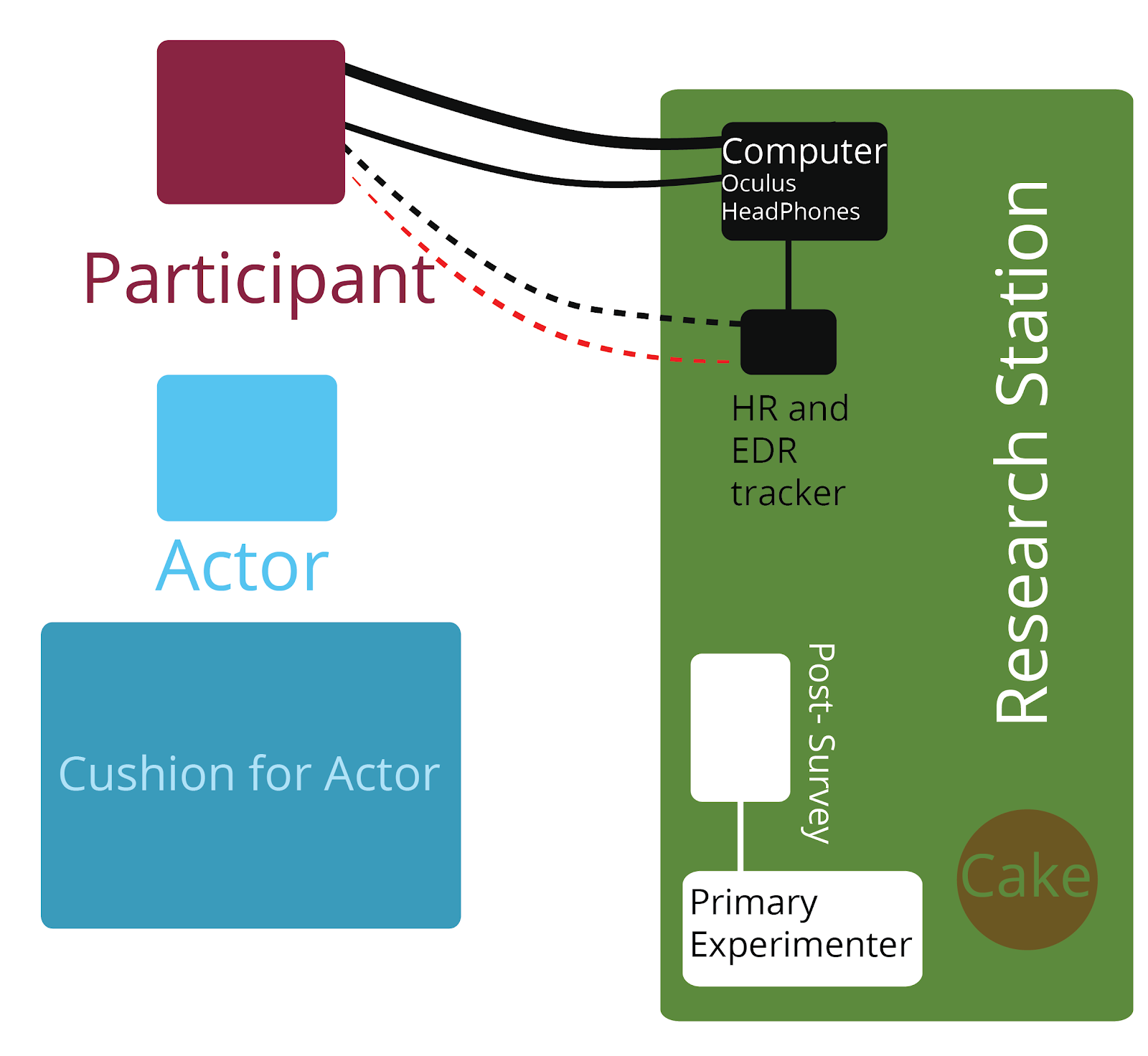

Each participant was put into a virtual environment. The environments were created with Unity and displayed on an Oculus DK2 head-mounted virtual reality headset and positional tracker. Sound was heard via headphones. Participants could use their hands to interact with the environments via head-mounted Leap Motion hand-tracking. Heart rate (HR) and electrodermal response (EDR) were collected by an Arduino-based biometric reader. Additionally, an actor stood in the haptic scenario for tactile feedback (detailed below). Due to hardware failings, hand tracking, HR, and EDR all had more variability than we would have liked. Hand tracking failures caused us to remove 4 participants. HR and EDR sometimes did not log data into our system. The entire setup ran on a Lenovo Y50-70 with an NVIDIA GEFORCE-GTX graphics card, 8GB of RAM and an Intel i7 processor. This was an additional constraint as few modern laptops can run Oculus-based experiences at the recommended specifications.

In each group, participants were presented with a console directly in front of them with two buttons. Each button controlled an elevator with one or four people. Participants were initially asked to move each elevator down to a particular location by a voice in their ear, a step intended to train participants for elevator movement. After both elevators were in their specified locations, most of the lights went out and the floor looked and sounded as though it were electrified. Participants then heard that there was a problem in the system and that they must make a decision. Decision sets varied per group.

Lever Group

The lever group acted as a control between our scenario and the original thought experiment. Participants in this group moved the elevators down before witnessing an “electrical malfunction.” Their choices were to either (1) let the four-person elevator fall into the electricity and thereby die or (2) push the buttons to have the one-person elevator fall. In either case, at least one person died by touching the electricity.

Non-Haptic Group

The non-haptic group went through the same setup as the lever group. However, once the lights went out, a worker walked onto the platform they were on to “fix” the console in front of the participant. The voice in their ear presented the choice to either (1) let the falling, four-person elevator hit the electricity, thereby killing everyone or (2) reach out and push the worker in front of them, thereby killing him but saving the four-person elevator by shorting the electricity. When reaching out, participants would not feel anything.

Haptic Group

The haptic group went through the same setup as the non-haptic group. However, these participants were told prior to the experiment that they “would experience tactile feedback as they interacted with the world.” When these subjects reached out to push the buttons at the beginning of the scenario, they felt a button surface. If they were to reach out and push the worker to his death, they would feel a human before they physically and virtually pushed him, standing in the same place as the virtual worker. The voice in their ear presented the same choice that the non-haptic group heard: (1) let the falling, four-person elevator hit the electricity, thereby killing everyone or (2) reach out and push the worker in front of them, thereby killing him and saving the four-person elevator. We used a box lid to simulate the control panel buttons, held over tape on the floor for consistency. Later, an actor stooped in front of the subject over a different line of tape at exactly the same distance as the virtual worker. The virtual worker’s push animation was triggered if and when the actor was physically pushed; taps on the back did not count towards a push. A cushion was placed in front of the actor for safety.

Phases

We defined each portion of the scenario as a phase where

1. Experiment starts: participant is told about elevators; used to get a baseline reading of the participant

2. Participant is asked to move elevators to a certain height via buttons; used to train participants on our interface

3. Lights go out and seemingly there is an electrical malfunction

4. Electricity activates: participant told that there was an electrical malfunction, elevator with multiple people on it begins to sway as though it will fall

5. Decision time: participant told that they can choose the outcome of the elevators and worker(s); whoever first touches the electrified floor dies

6. Post decision: participant hears the screams of the electrified worker(s) and the screen fades to black

At the end of each participant’s scenario, the subject filled out a post-survey that included demographic information, level of immersion, whether they would act differently in real life or in a repeat virtual scenario, and prior experience or knowledge of virtual reality and the trolley problem (see Appendix 1). After the survey, participants were allowed to ask about the scenario and give verbal feedback. Lastly, we offered the participants cake and asked that they not share their experience until we had concluded our experimentation.

Results

Death Cases

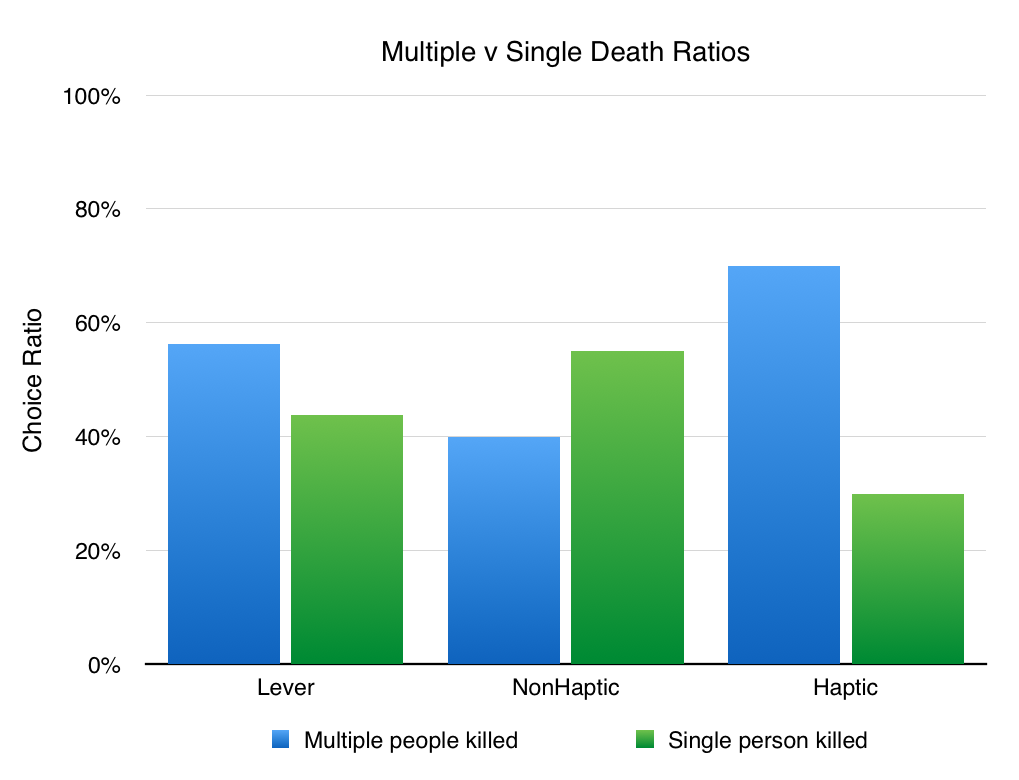

Non-haptic participants pushed the worker 55% of the time. Conversely, haptic participants pushed the worker 30% of the time. A chi-squared analysis shows the haptic and non-haptic group decisions to be non-significant where p=.1573. However, we suspect that due to our sample size, the chi-squared test is inaccurate. Using Fisher’s exact test, we find the difference between the haptic and non-haptic groups to be significant at p≤.05 (p=.0014).

Lever group participants chose to terminate the single person elevator 44% of the time. Testing against the Navarrete et al. study action condition (where 133/147 participants chose a utilitarian outcome, to kill the single person), we find that our lever condition differs significantly where p=0.000026 using Fisher’s exact test.

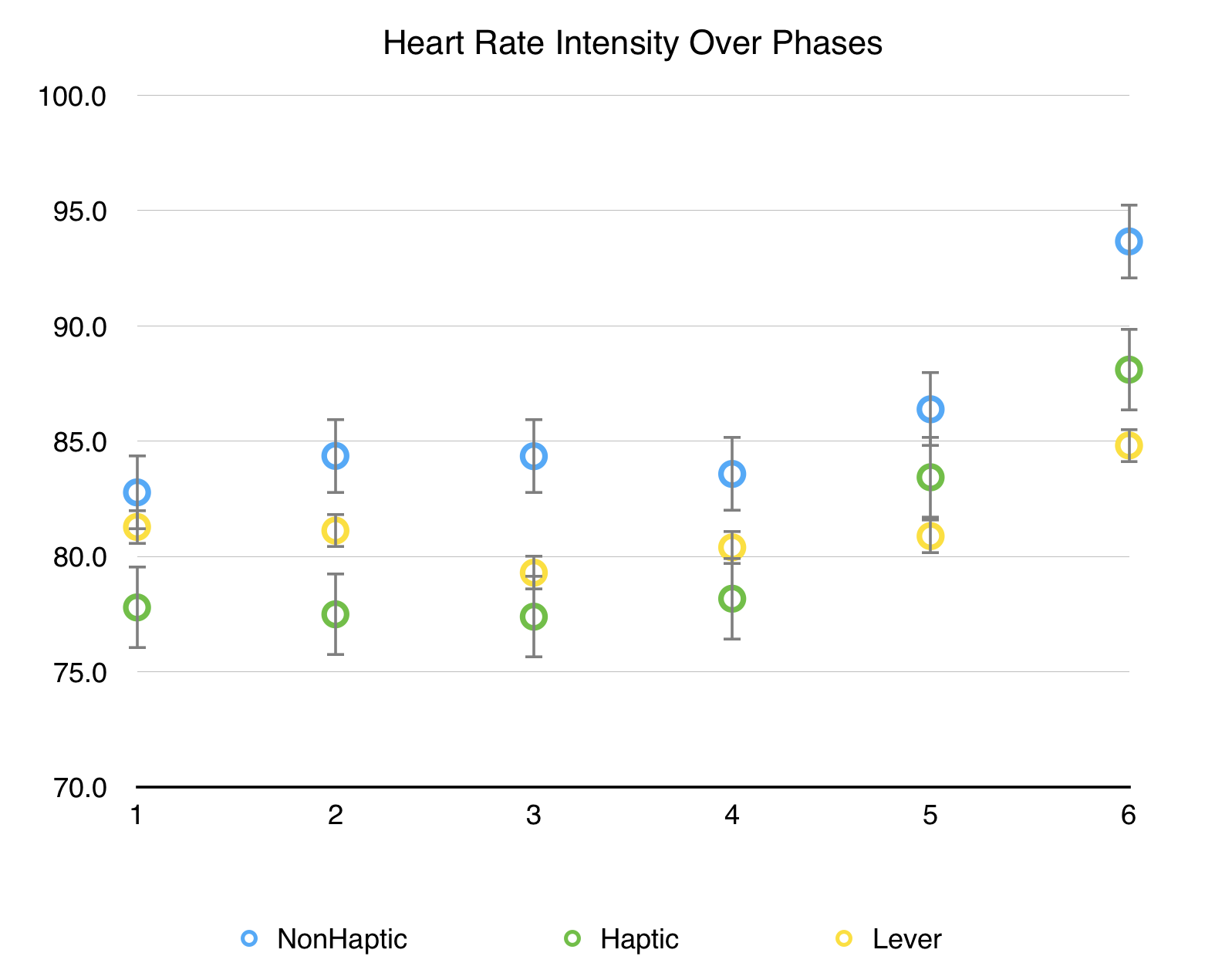

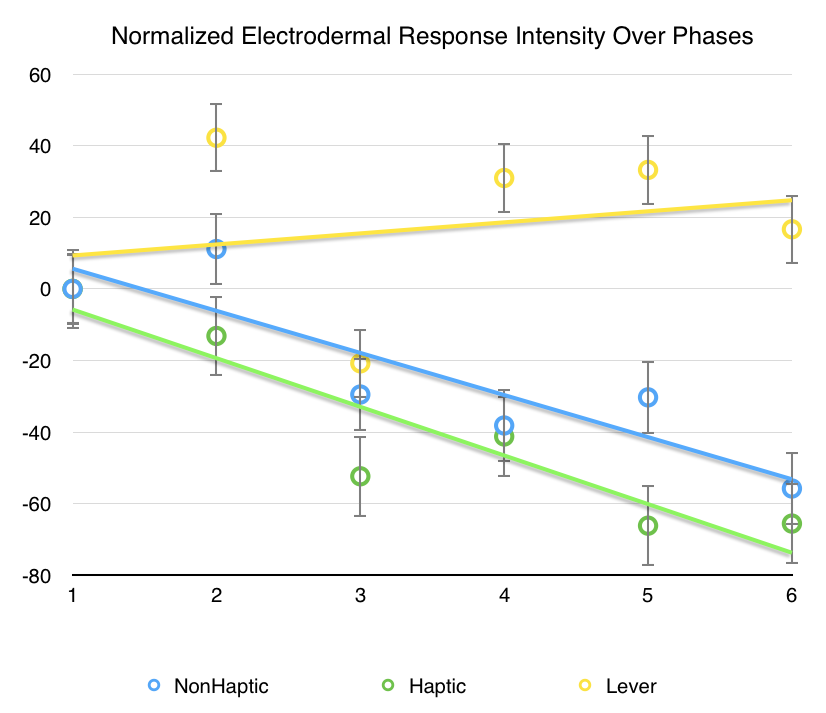

Heart Rate & Electrodermal Response

For each phase of the scenario, heart rate (HR) and electrodermal response (EDR) data was collected. Above figures show the averages of the data per phases for each participant group with the standard error variation. The bottom two figures show the normalized responses, taking the first phase of the scenario to be the average baseline. After normalizing the data, participant heart rate greatly increased over the phases to approximately 10 additional beats per minute in the final phase. Additionally, the decision period (phase 5) has a higher relative heart rate when pushing a worker instead of controlling buttons.

Electrodermal response decreases over the phases. We should expect EDR to increase with arousal. The limbic system triggers a sweat response to arousal, which can be measured as EDR via skin conductance.

Survey

Just over half of participants had been in a virtual reality experience before (59%) and just over half of participants had heard of the trolley problem before (57%).

For a series of questions, we used a five point Likert scale and coded it as: -2 = Strongly disagree, -1 = Somewhat disagree, 0 = Neither agree nor disagree, 1 = Somewhat agree, 2 = Strongly agree.

When asked if they cared about the outcome, participants in the non-haptic group averaged around “somewhat agree” (M: 1.0, SD: 0.76) while participants in the haptic group averaged halfway between “somewhat agree” and “neither agree nor disagree” (M: 0.50, SD: 1.15). When asked if they would make the same decision in real life, participants in the non-haptic and haptic groups differed again (M: 0.40, SD: 082) and (M: 0.25, SD: 1.02) respectively.

Regarding “I enjoy video games,” participants from all groups had a mean at or above 1.25 (lever: M: 1.25, SD: 0.86; non-haptic: M: 1.55, SD: 0.76; haptic: M: 1.55, SD: 0.76). The statement “I play video games often” was agreed with at 1.20 for the haptic group (M: 1.20, SD: 0.95) and 0.80 for the non-haptic group (M: 0.80, SD: 1.15), though it should be noted that a well-defined “often” was not measured.

For each of our Likert scale questions, we ran two-tailed t-tests assuming unequal variance looking for differences between the haptic and non-haptic group responses. There was no statistically significant difference between groups for any of the Likert scale questions.

In the open feedback section, some participants expressed frustration with the interface design, frustration with hardware errors, and feelings of sadness. There was a wide range purported immersion. Raw feedback can be examined in Appendix 2.

Discussion

Haptic & Non-Haptic Choices

Participants in the haptic and non-haptic groups responded significantly differently to the choice of pushing a worker. Haptic participants were hesitant to push the worker to his death, some to the extent that after reaching out to touch the actor, they changed their mind. Coupled with survey and open feedback, we conclude that haptic feedback does indeed change moral decision making in virtual environments. Participants only slightly agreed that they would make the same decision in “real life,” but we take this response lightly. Differences between purported choice and actual (immersive) choice was a motivating factor for us to create this experiment. Though we also note, that for participants who did not feel immersed, their responses seem more arbitrary (e.g. “I wanted to see what would happen if I pushed the worker”).

Hardware Issues

Some of our data suffers from a high degree of variability due to hardware glitches. It should be noted that we did this experiment under the constraint of a short timeline and with limited equipment. Future studies would benefit from more immersive scenarios with better motion-tracking, more realistic graphics, a faster frame rate, and allow the user to walk around, potentially even letting them jump off the platform in the worker’s place.

With regards to our electrodermal response measurements, we report a decrease over time per each phase of the experiment. This is the opposite of our expectations as EDR increases with arousal. We assume that due to the poor manufacturing, this data is unreliable.

Lever Group

Our lever group does not follow previous empirical work. We suggest that it is due to three causes: (1) our hardware difficulties, (2) our virtual interface, and (3) humans in stressful situations. Difficulties in positional hand-tracking, as well as floating “ghost” hands mistakenly picked up by the sensor, distracted participants from the choice and, for some, broke immersion. Regarding our interface, we designed it to be simple and usable after a short training period (before the electricity starts). However, many subjects in the lever group purported that they did not understand why they couldn’t save the people they wanted to. We saw a characteristic misunderstanding of our interface. Participants wanted the pressed button to stop its corresponding elevator, however this was opposite the defined mechanic. Third, we posit that when humans are in stressful environments and lives are on the line, they have a hard time quickly figuring out what to do for a positive outcome, especially when faced with a novel interface. Participants in the haptic and non-haptic groups had an easier time expressing their choice in a way the system would understand. So, while our lever group results are confounded, the scenario illuminates a real-world correlate missing from the textual scenario: the inability to respond effectively.

Level of Immersion

Participants expressed a range of immersion. Some participants told us that, while they had read the trolley problem, they were much more affected by experiencing it. These participants left the room saddened. Others reported that they felt like the whole thing was a game or extremely fake. We think that this is largely due to our hardware limitations and the current state of virtual reality technology. In the future, we should expect higher degrees of immersion as technology continues to improve.

“I want to jump.”

Some users told us after their session that they would have jumped themselves, but not pushed someone else. It would be an interesting path to follow in future research to allow users to move and even jump to their own virtual death.

Conclusion

After running our variant of the trolley problem, we find that participants are less likely to push a person to his or her death when they feel the tactile feedback of that person. Our work goes beyond previous studies in experimental philosophy by immersing the moral actor in a virtual world. Our findings have implications in military applications where users make life or death decisions, far removed from the physical and visceral act of killing. Additionally, there are implications in the entertainment industry as consumer virtual reality headsets are about to hit the marketplace. As more of our information and decisions are mediated by intangible interfaces, we should expect our moral decisions to also change. We hope that our work serves as a starting point and inspiration for other researchers in this field.

Acknowledgements

We’d like to acknowledge a few people who helped make this project a reality. Tom Corbett (Electronic Arts, Entertainment and Technology Center at Carnegie Mellon University) and our fellow classmates helped us refine the project over the course of six weeks. Jessica Hammer is our knowledgeable faculty advisor (Human-Computer Interaction and Entertainment and Technology Center at Carnegie Mellon University). Mike Christel (Entertainment and Technology Center at Carnegie Mellon University) helped us work with the Institutional Review Board as well as Jessica Hammer. Paula Halpern (College of Fine Arts at Carnegie Mellon University) helped with voice acting. We thank them all.

Citations

Foot, Phillipa (1967). The Problem of Abortion and the Doctrine of the Double Effect in Virtues and Vices. Oxford Review, No. 5.

Mill, J. S. (1863). Utilitarianism. London, U. K.: Parker, Son, and Bourn.Parsons, T. D., & Rizzo, A. A. (2008). Affective outcomes of virtual reality exposure therapy for anxiety and specific phobias: A meta-analysis. Journal of Behavior Therapy and Experimental Psychiatry, 39, 250–261. doi:10.1016/j.jbtep.2007.07.007

Navarrete, C. D., McDonald, M. M., Mott, M. L., and Asher, B. (2012). Virtual morality: emotion and action in a simulated three-dimensional trolley problem. Emotion 12, 364–370. doi: 10.1037/a0025561

Singer, Peter (2005). Ethics and intuitions. The Journal of Ethics.

Thomson, Judith Jarvis (1985). The trolley problem. Yale Law Journal, 94, 1395–1415. doi:10.2307/796133

Appendix 1: Decision Making in Virtual Environments - Post Survey Questions

For Likert scale questions, the order of (a) through (d) was randomized by Google Forms per each survey. Our scale was coding was: Strongly disagree, Somewhat disagree, Neither agree nor disagree, Somewhat agree, Strongly agree.

To what extent do you agree or disagree with the following statements? [Likert scales]

In the scenario, I cared about the outcome.

In the scenario, I would make the same decision again.

I would make the same decision I made in the scenario in real life.

I expected to feel something physical when I reached out in the scenario.

To what extent do you agree or disagree with the following statements? ("Video games" include console games, mobile games, and online games.) [Likert scales]

I was physically comfortable during the experiment.

I was emotionally comfortable during the experiment.

I enjoy video games.

I play video games often.

Have you experienced virtual reality before today?

Have you heard of the Trolley Problem?

Which program and school are you in at Carnegie Mellon? (What you are studying.)

Your program is for

Undergraduates

Masters

PhDs

Post Doctorates

Other: open text answer

Age in years

Gender

Ethnicity

Is there anything else you'd like to comment on about the experiment?

Appendix 2: Open Survey Feedback

Is there anything else you'd like to comment on about the experiment? |

Twas interesting. |

I had some trouble understanding what to do when the malfunction happened. I think I misheard and wasn't sure how to save anyone. I was trying to save the platform with multiple construction workers on it, but I alternated buttons because I wasn't sure if anything I was doing was working. |

Better graphics? just kidding. / I was expecting it to be longer |

To give clearer instructions about how you have to see the hands for pressing buttons |

It was kind of confusing on what to do since I had never used VR before. |

Weight of the ear heart rate monitor distracting/uncomfortable. Having it hooked to the head gear to support its weight would be nice.

When informed about tactile feedback I had expected it to come in the form of the pads on my fingers which is what I think took me aback most about feeling something physical in front of me. |

That was really difficult to choose. |

It was funny. |

The immersion was really cool, though me having to decide between those two options took me out a little - in real life I would probably ask him or let him know, double check that it actually would save the other people. If time was slowed down and it was really a split-second decision where you wouldn't have this time to do anything else I think I might have responded differently. |

I was somewhat surprised to be spared consequences for not acting. |

Touching things was harder in the experiment than in real life, which took away from the experience a little. |

I think my knowing about the trolley made it less real. |

Fun! The announcer's voice could have been louder/clearer. |

I thought it was really cool! Not a very useful comment, but I thought I'd let you guys know. |

Fingers don't display correctly all the time. |

Fun |

Kept thinking that I wasn't supposed to put my hand any farther since I didn't have a good reference for distance, and the sensor didn't seem particularly reliable from when I waved my hand in front of it. |

Hands were not very responsive so I suppose that could have impacted the immersion. |

It was rather strange to have the worker appear as if he had walked through me. |

Good one - just tht it could hve been a tad bit longer - got over just when I was beginning to be comfortBLE :) AWESUM! |

I wanted to lower the trolley with only one person in it but everything happened so quickly and I forgot how the controls worked and tried to lower the one-person trolley so only one person would die but while I was trying to figure that out the other trolley with three people in it hit the floor and electrocuted everyone. |

I WOULD HAVE JUMPED IF THAT WAS A POSSIBILITY. I'D SACRIFICE MYSELF BUT NOT SOMEONE ELSE. |

IT WAS REALLY DISORIENTING, I COULDN'T FOCUS ON THE TASKS BECAUSE I WAS SO FOCUSED ON THE EXPERIENCE AND THE SMALL DETAILS. ALSO, SOME OF THE THINGS MENTIONED I DIDN'T KNOW. EX- I DIDN'T KNOW WHAT THEY MEANT BY BAR. |

T-T I'm sorry guys on the elevators |

When the work first arrives to fix the control panel it seems as if he is walking right through the space I was occupying |

Framerate was fairly low, which can lead to a lot of discomfort and lack of presence, with a powerful computer there's almost no lag and it's a lot more immersive, to the point where people are afraid to say, walk off of a virtual building. But understandably those are expensive. |

The buttons were hard to press so I was not able to properly control the movement of the trolleys |

Put me in a tough spot! Nicely conducted experiment! :) |

Experiencing a simulation of the Trolley Problem was much more poignant for me than just discussing it in a hypothetical discussion. |

The ear piece falls off too easily, and constrains freedom of motion. |

I wasn't expecting to have to make a choice like that. |

I was a little confused during the "decision making" session. I didn't know what I was supposed to do to "make a decision" therefore I felt that I didn't make that decision completely on my own. |

I thought the design of the game was really well done. I think it was a bit confusing in the beginning because I expected the button to be closer. However, I thought the graphics where really good and well thought-out it made me feel like I was actually in the situation. Also, the voice choice was good to make the experiment feel more realistic and urgent. |

This experiment was particularly interesting to me as I'm a Design and HCI major and especially interested in designing video games but also designing virtual reality experiences (I just got an Oculus but haven't done anything yet with it so this was cool). There were lots of glitches with the game itself. For a good portion of the experiment, an image of my hand was floating directly in front of me severed at the wrist? It was there for most of the time that the worker was in front of me. The hand motion was not right for holding onto or twisting the buttons and it felt really weird to be grasping for nothing also there wasn't any feedback for me to know that I had actually pushed the worker until I felt what was like someone's stomach (I'm guessing it was one of the people in this room standing in front of me). Overall, I had no reactions to the actual point of pushing the worker. There was no guilt or emotion or anything probably because nothing felt real enough. |

nope |

:( |

WHAT??? |

I got a bit panicked for time when they said to push the person because the left trolly looked like it was going to fall. but at the same time i didn't wait to see if it gave me any other options. And then I was confused as to what I should be doing and believing that this is just a game I decided to push the guy based on numbers. I do not know if I would actually do the same in a real life scenario. |

The initial section was more unnerving than it might have needed to be, or perhaps, if it was intentional, it was effective in inducing slight fear / disorientation in the user |

Some of the instructions were a little hard to hear. So I wasn't quit sure what they were asking. I had trouble with hitting the buttons. So, I ended up saving the cart with only one person, but my intention was to save the one with many. |