Positioning math

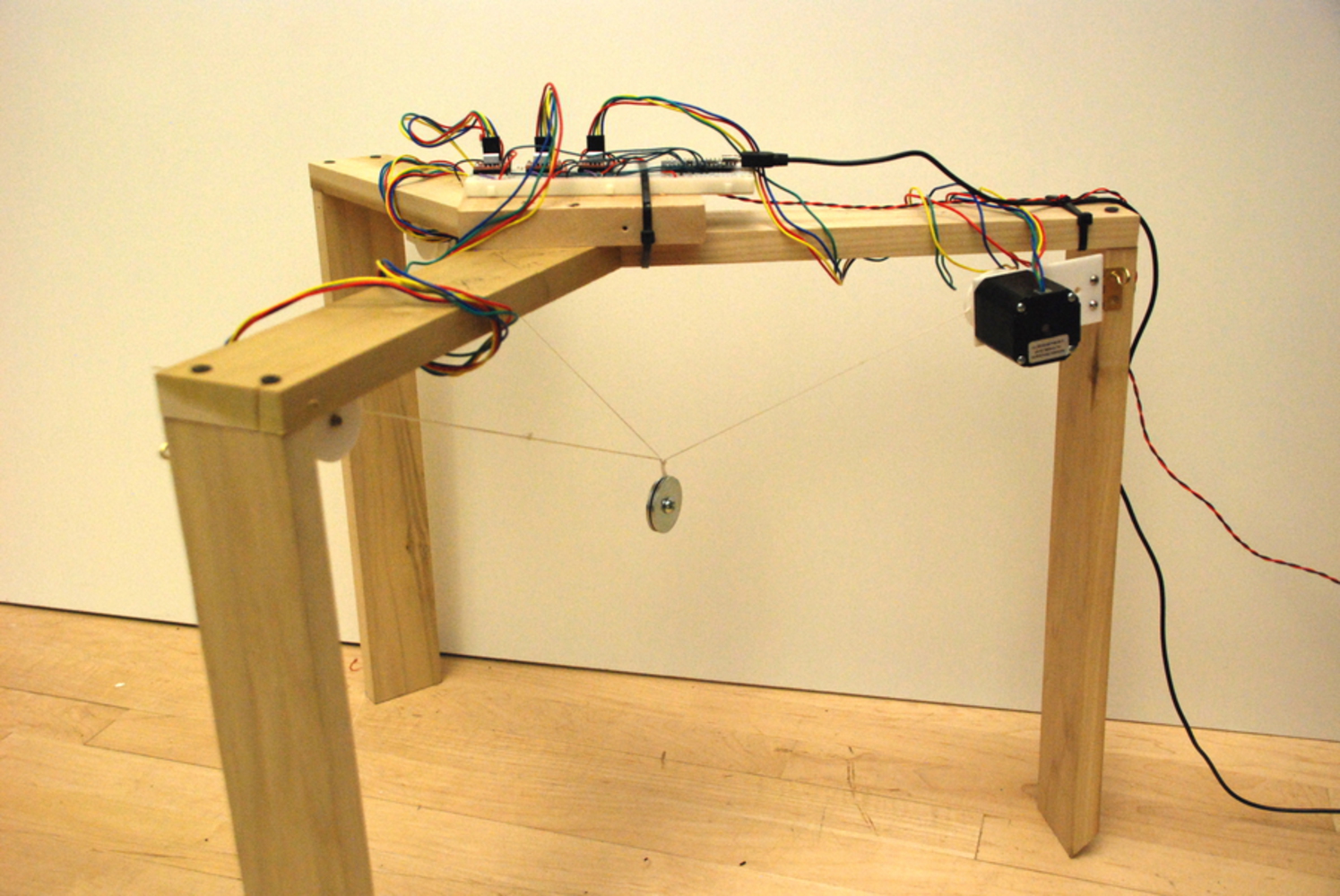

The inverse kinematics for moving the three motors into the right positions isn't as hard as I originally suspected it would be. The operation of the cable-driven system can be conceptualized simply as three line segments of different lengths, each connecting the lightbulb to one of the motors.

The question is, Given a particular lightbulb destination position, what position should the motors rotate to? Reformulated, the question really is, How far is it from the desired lightbulb position to each of the three motors? It turns out that this is an especially easy question to answer.

To find the distance between any two points, in a vector system of any dimensional cardinality, simply apply the distance formula! In a 2D cartesian plane, this is familiar from 8th grade:

D = sqrt( (x1 - x2)^2 + (y1 - y2)^2 ).

In words, the distance between two points is the square root of the x difference squared plus the y difference squared. So: how far apart is the point (8, 4) from (13, 12)? It's sqrt( (8-13)^2 + (4-12)^2 ), or ~9.4.

How does this generalize into three-dimensional space? Very simply!

D = sqrt( (x1 - x2)^2 + (y1 - y2)^2 + (z1 - z2)^2 ).

Just add the z term. For instance, what's the distance from the point (8, 4, 3) to (13, 12, -5)? It's sqrt( (8-13)^2 + (4-12)^2 + (3 + 5)^2 ), which is ~12.4. No matter how many dimensions you're doing math in, you can find the distance between two points like this.

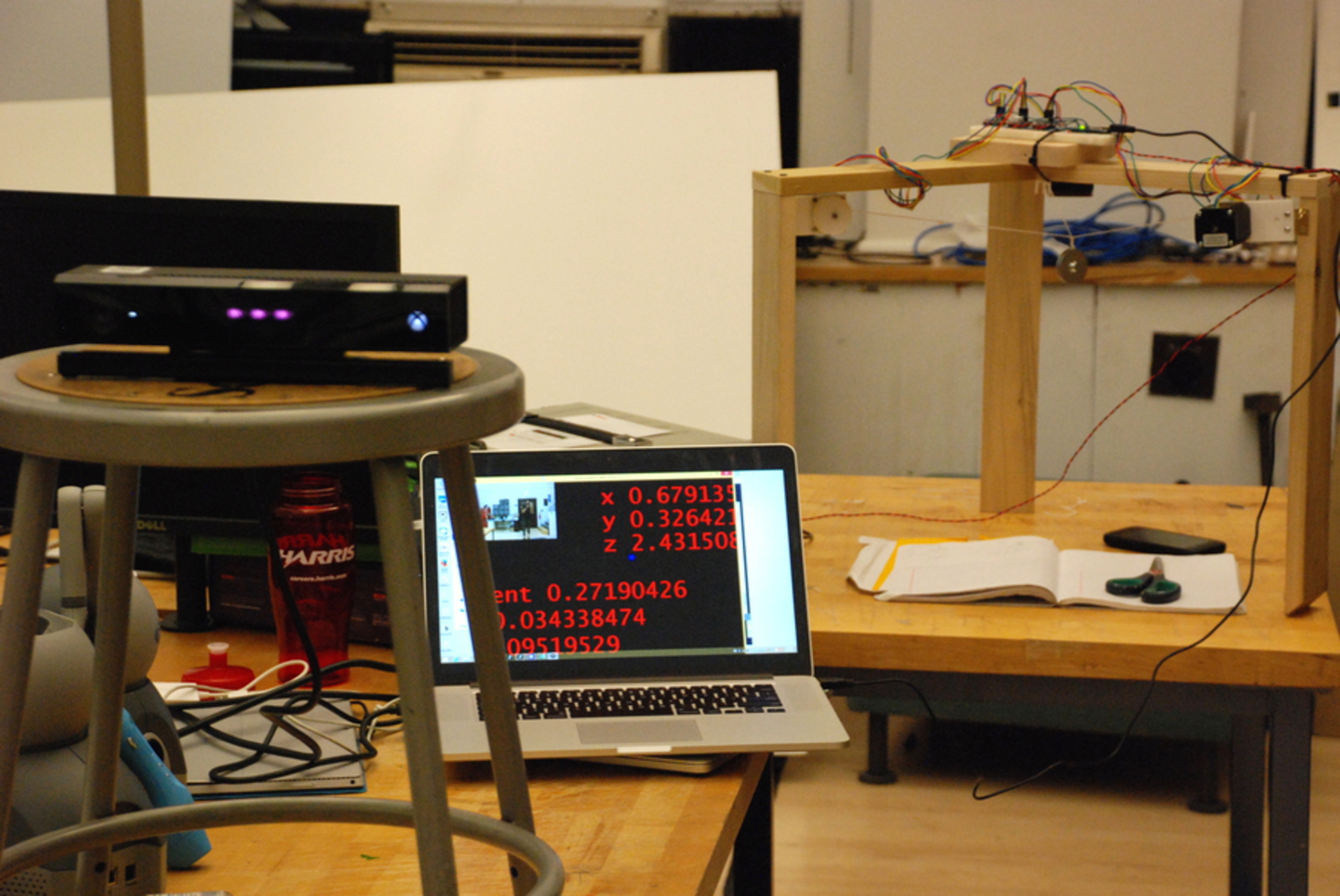

The function below, threespaceToTriangle, takes three arguments which are the x, y, and z coordinates of the desired lightbulb position, and uses the 3D distance formula to calculate the appropriate motor position for all three motors. It's got the motor positions baked in as the starting point, and uses the input values as the other point to measure distance to. (It loads the resulting values into a previously declared array, tri[], because unfortunately C functions cannot return multiple values. You can get around this with referencing and dereferencing, or using structs, but I wanted to keep it simple.)

Note that the coordinate system I built is structured so that the definition of one unit is the distance from the center of the top planar triangle (between the motors) to one of the vertices.