Intention

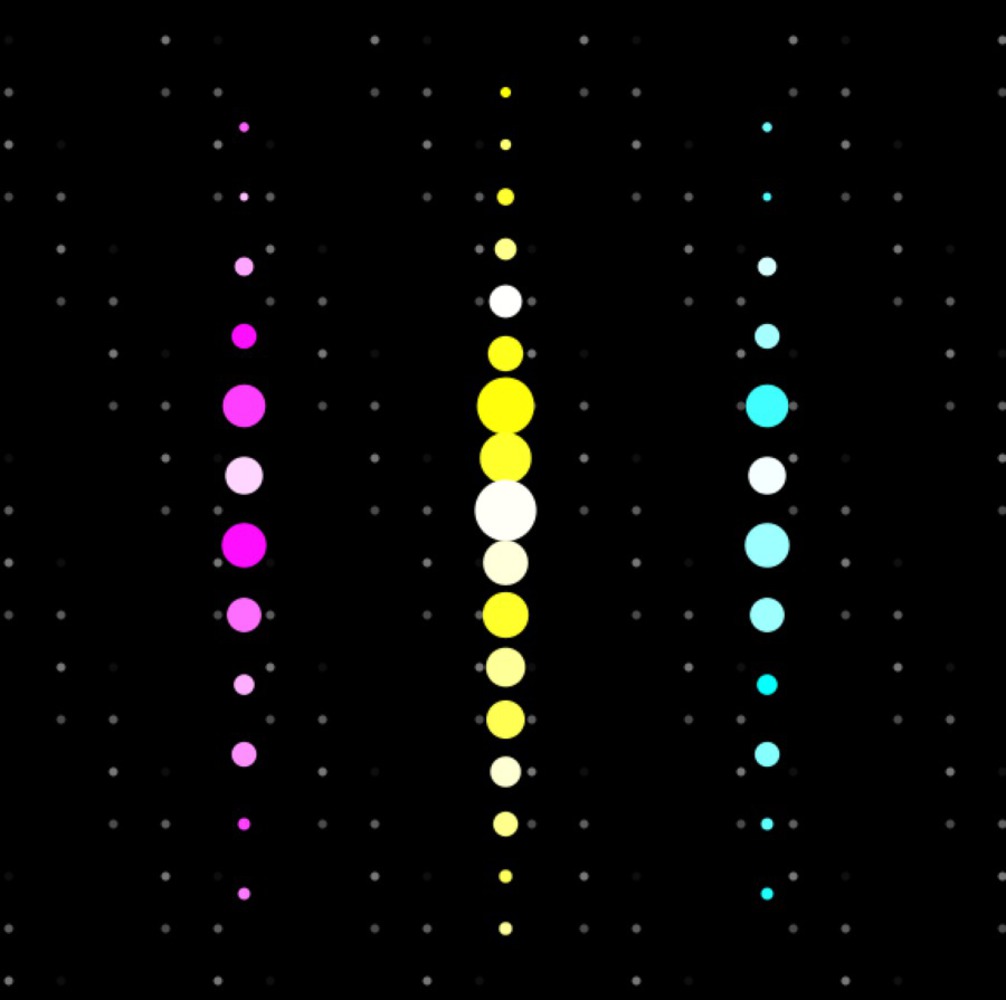

The idea of this project was twofold: to allow the audience to create their own audio while at the same time being able to visualize what they were making. We wanted to create the capability to visualize rhythm in vibrant visual patterns. Not only did we want to emphasize the link between audio and visuals, but we also want to create a unified experience for the audience as they come together to create the sounds that generate, in real time, the visuals they see on screen in front of them. We were motivated by wanting to encourage collaboration and create an engaging experience, one in which the audience could actively participate and also clearly witness the effects of their participation. This, we believed, would create a unique experience since we would be combining concepts of interactivity with performance art.