Process

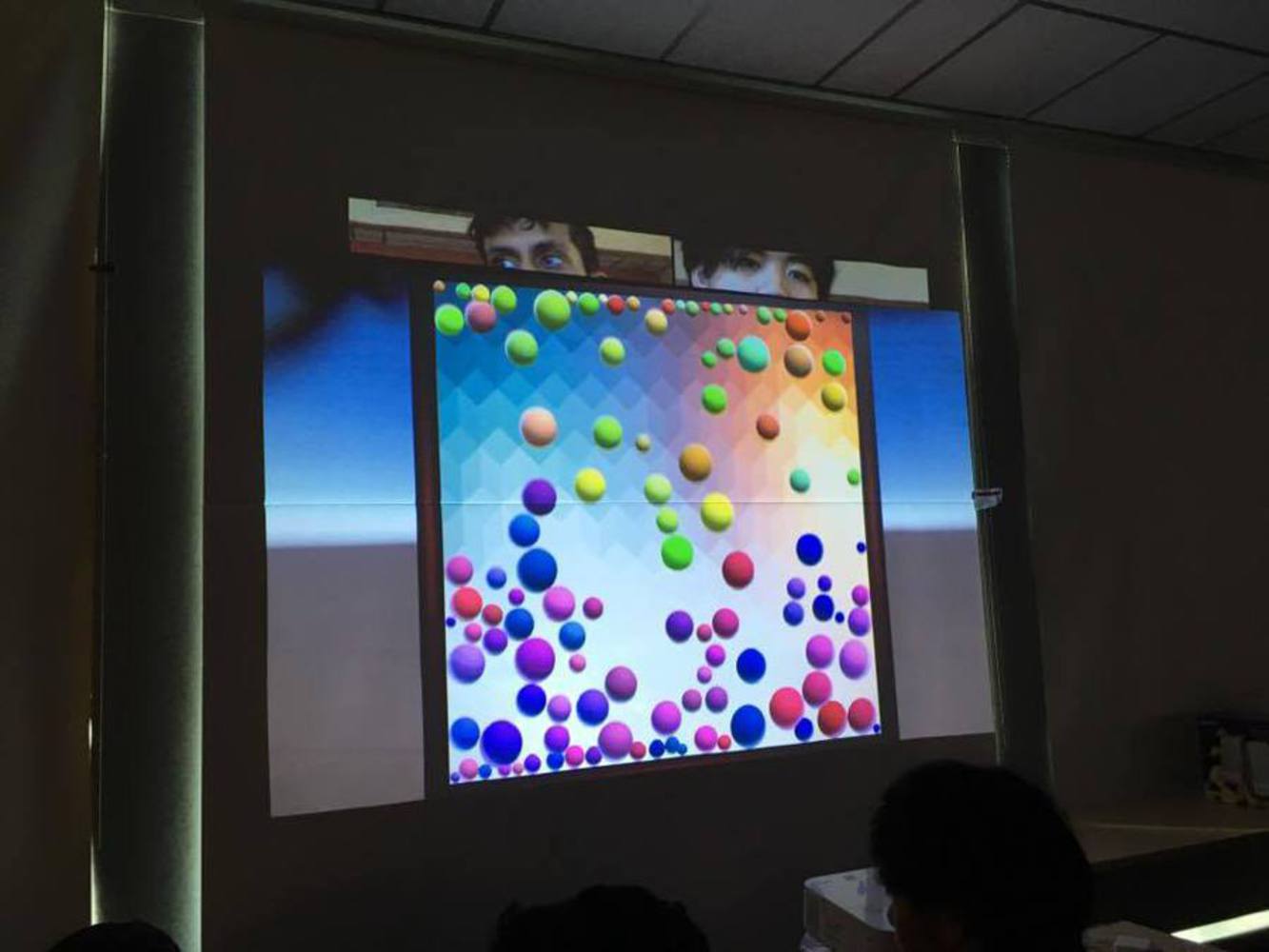

We chose Unity to visualize the eye blinks. The participant can trigger a new ball by clicking the mouse when they see the other participant blinking. For every mouse click, a random note from C3 to C5 will be played, and the color and size of each ball is generated randomly using the unity engine. Each participant will trigger a different color scheme of balls during the experience. We tried to incorporate the sounds and the visual effects together into the program to present the process of blinking naturally to the audience.

Also, we designed an algorithm based on the time interval between two blinks, so the program could adjust the quantity of the balls generated. More specifically, when the two participants blink really fast, or when they blink as the same time, several colorful balls will burst out on the board. We made this intentionally to emphasize the interaction between the participants.

Timeline:

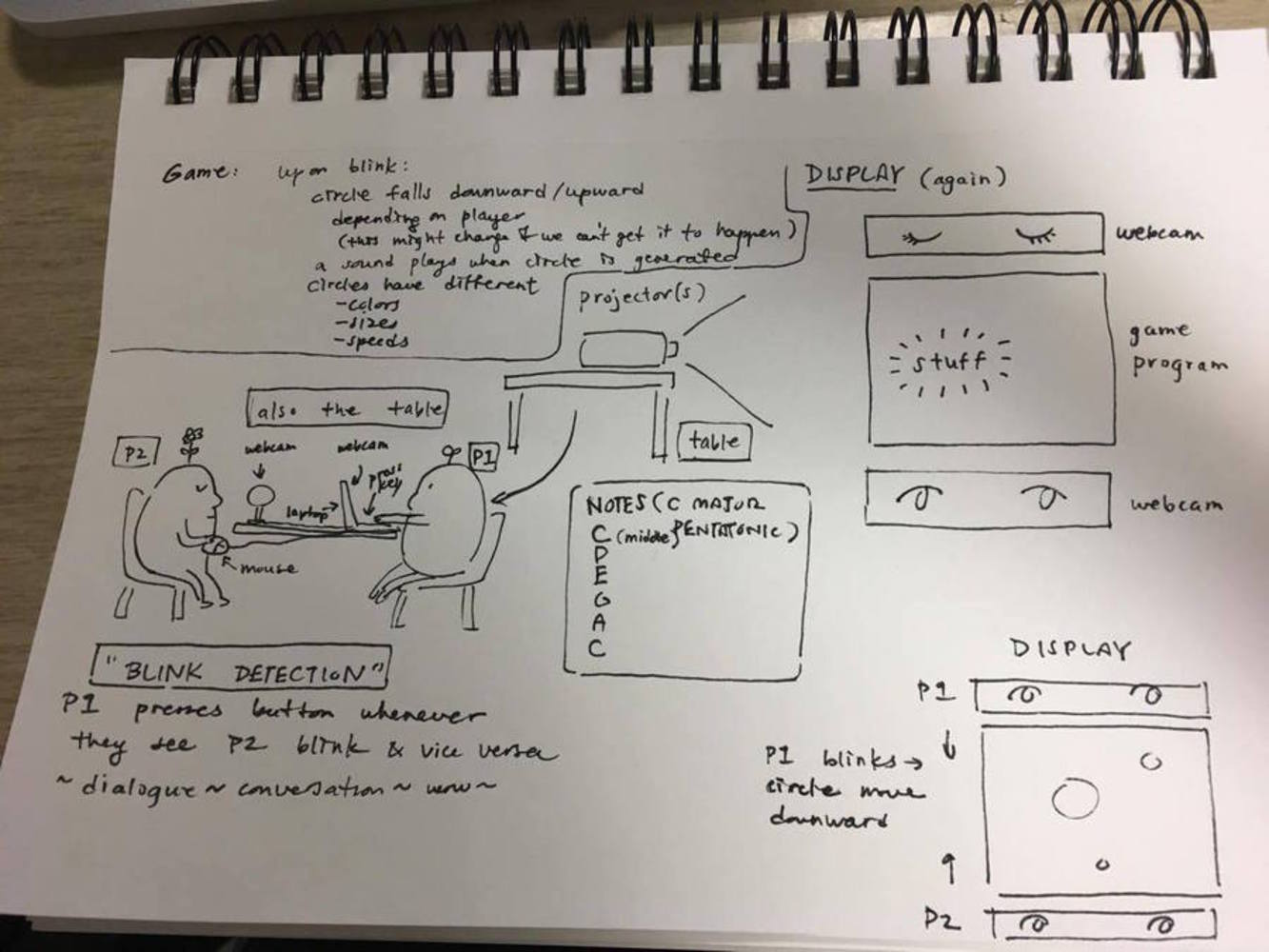

Initially the idea for the eyeblinks were variations on a sort of eyeblink game, where two people would try to synchronize eyeblinks. Making it visually appealing yet fast in such a visualization turned out to be challenging, as a few ideas were considered. For example, at one point the idea to implement a sort of rhythm matching game was considered, where one eye from each performer would blink as a pair of eyes, and the goal would be to match average eyeblinks while making the blinks as similar as possible. However, it was determined that such a project's scale was larger than what we could reasonably finish, and as such, through multiple reevaluations, we decided to consider a variant of a "game" where circles would drop, the sizes and mass determined by accuracy of blinks. However, it would still be too complicated to implement, and finally we settled on a variant on the idea, in which two participants in the performance would try to match a buttonclick to the eyeblink of the other person. Such clicks would be visualized as one of two different spheres with colors and size selected from two different pools of color.

After the simulation (in Unity) was finished, we worked to combine the projections of the eyes and the game screen with a program called Millumin. However, a few obstacles appeared in the process of implementing the visual. The first was that we attempted to attach a second camera in order to capture two sets of eyes independently. Unfortunately, we were using an IDeATe computer for the project, and the cameras required software not already installed, and therefore we were unable to use two cameras. In the end we settled on using the single facetime camera to take two pairs of eyes from either side of the camera, and although the image zoomed in was slightly grainy, they were effective in showing the eye movements (albeit with a slight delay attributed to the software and the camera).

The second and final problem was that the program was unable to show the desktop with the camera views. However, we quickly fixed this by layering two projectors on top of each other, and the result was reasonable in visual quality.