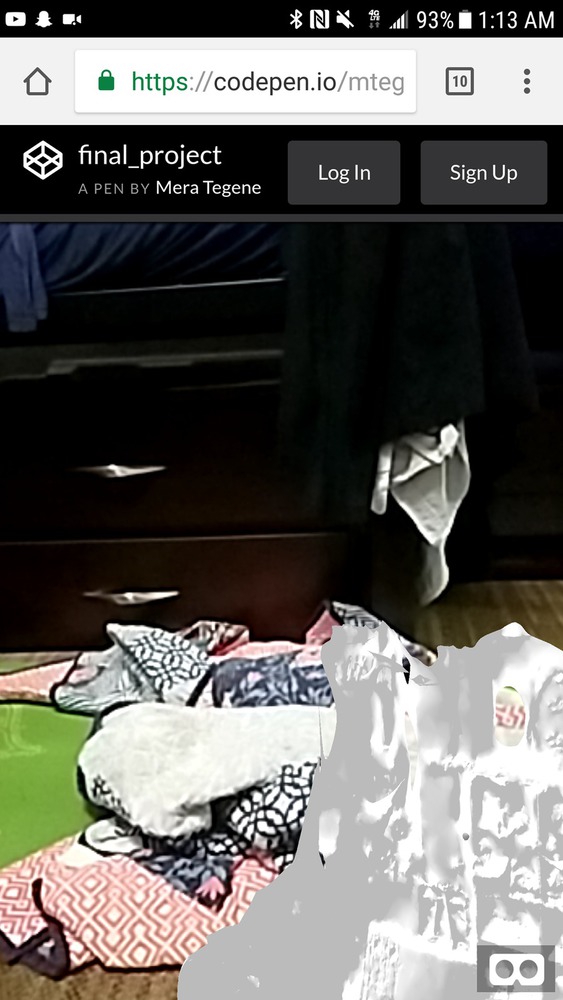

DISCLAIMER: All the technology I need for my project to work as envisioned does not exist or is not readily available right now (technology like object recognition) so my project in a sense is "hard-coded" to only work in one specific place (one room) currently. The hope is, as technology advances and becomes more readily available, I can pop that into the project and make it work as envisioned.

GENERAL PROJECT PROCESS DESCRIPTION:

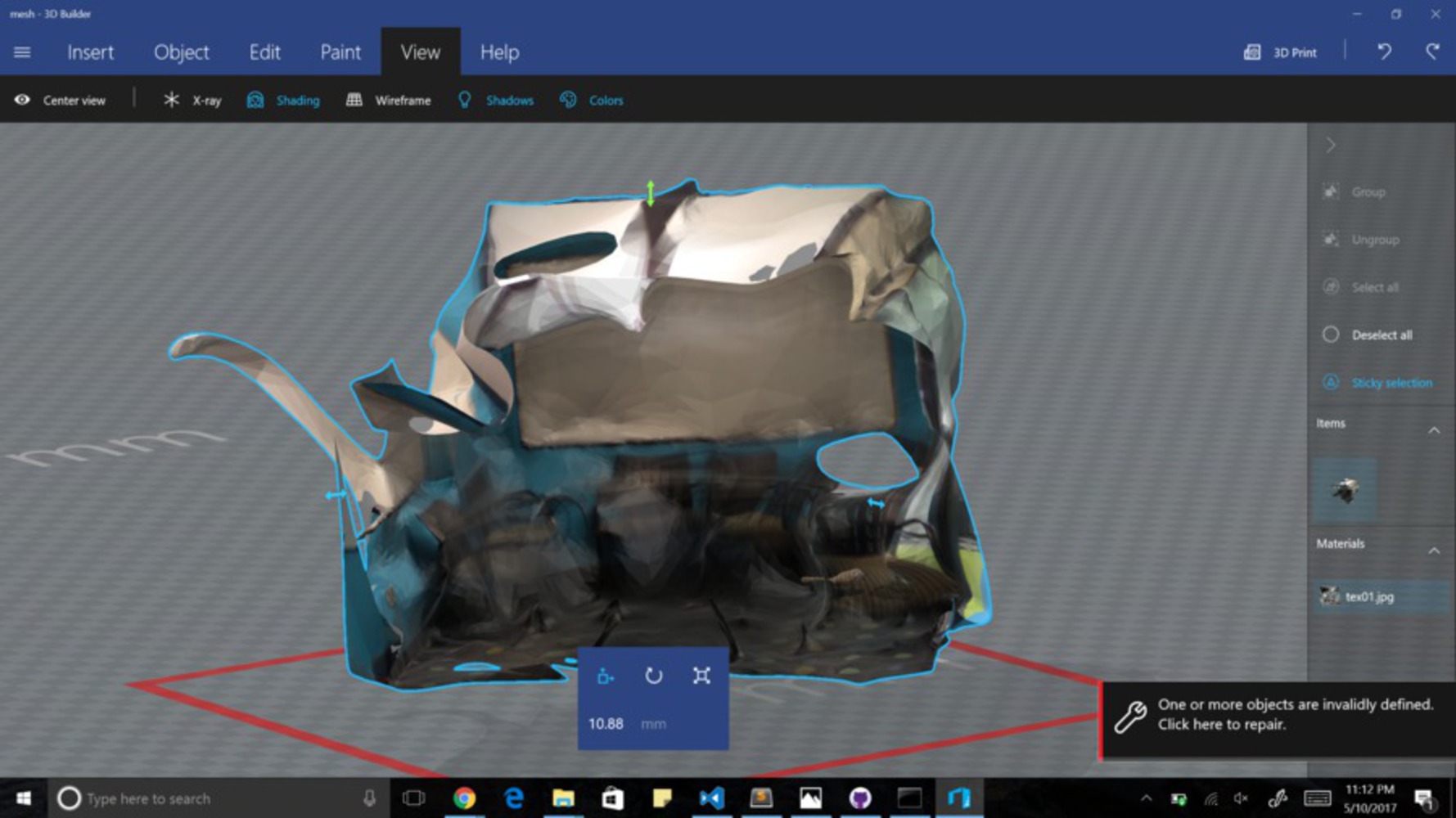

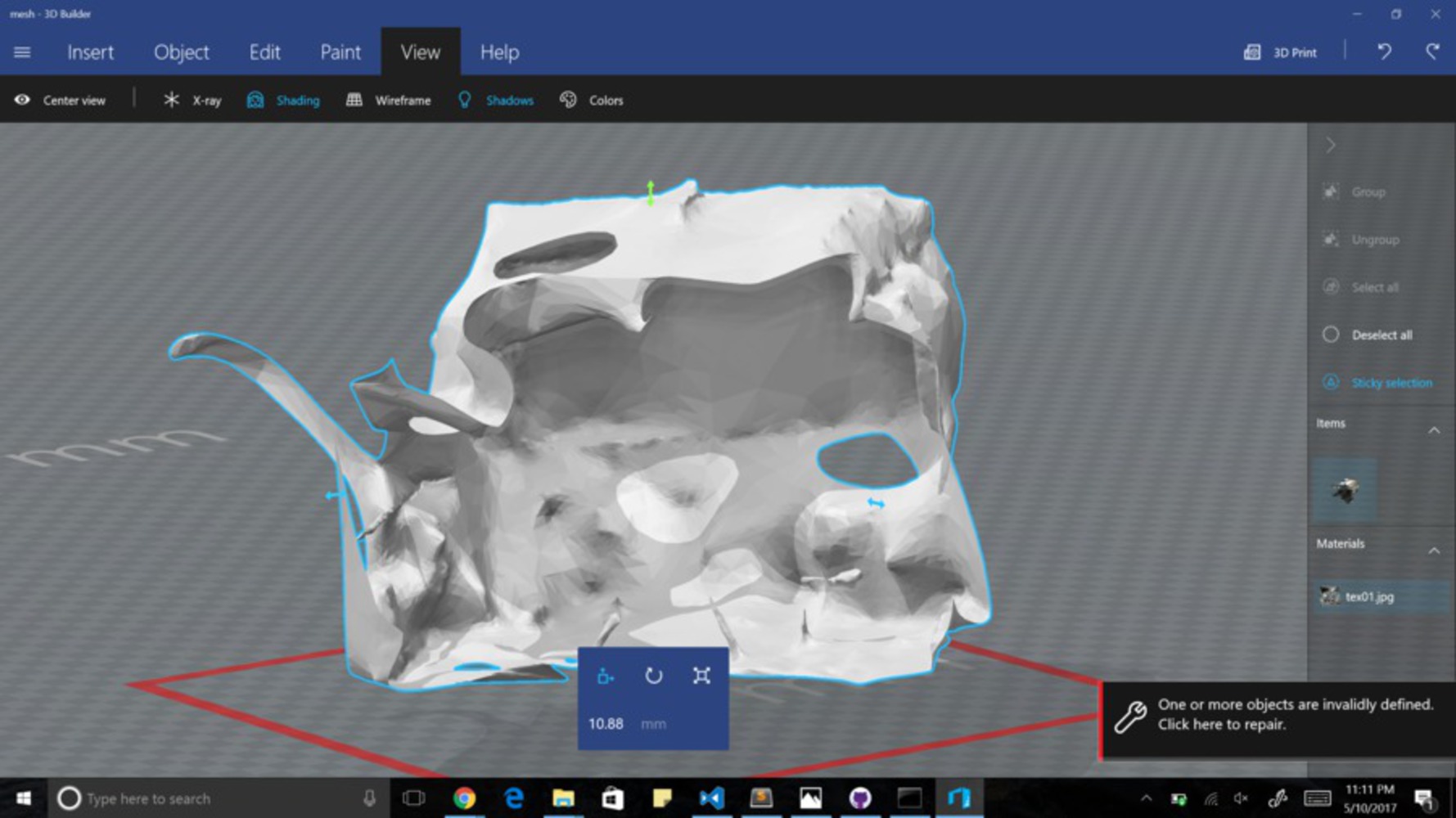

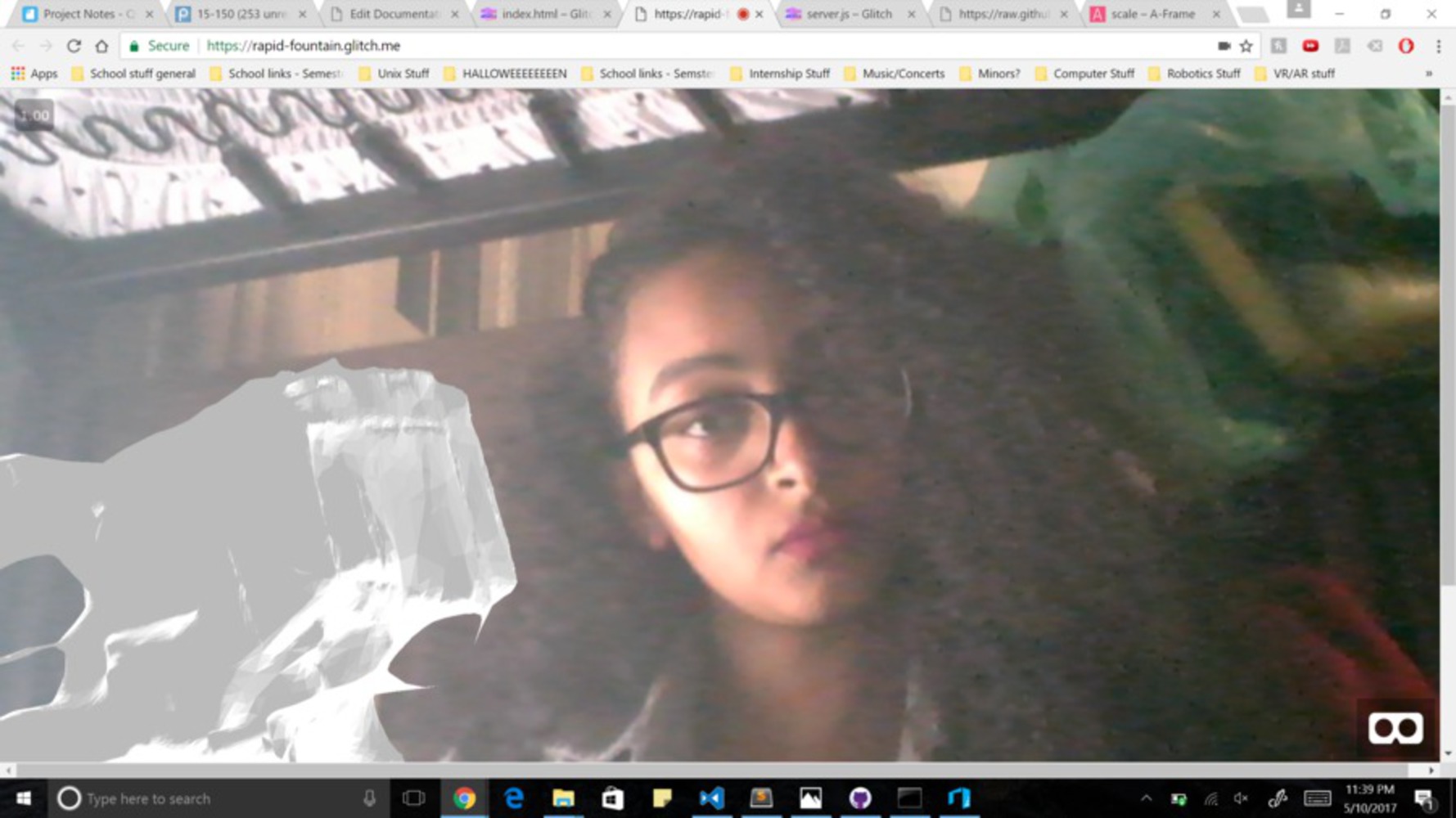

Creating A Rendering of the Room: Because efficient, accurate, readily-available object recognition does not currently exist, I have to recreate the room in an online rendering so that I can manually annotate the objects in a different language (and display these annotations in Augmented Reality). The technology to create a room rendering that is accurate is not readily available, so I tried doing it through photogrammetry and ReCap/ReMake. I used a Ricoh to take panoramic pictures of the room, used my code to change those panoramic pictures to 6 side-view pictures, and funneled those pictures into ReCap/ReMake to try to produce a rendering of the room.

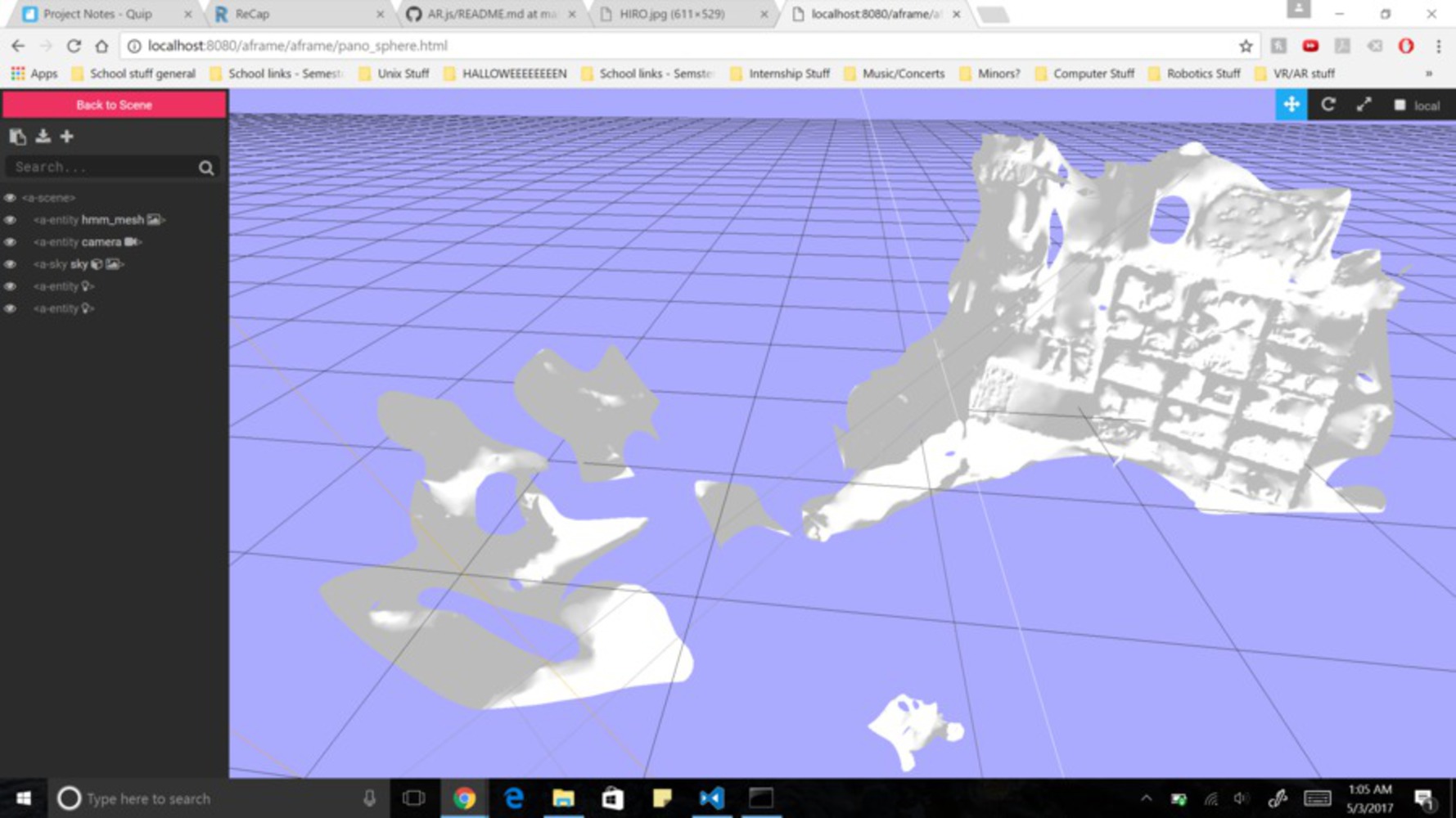

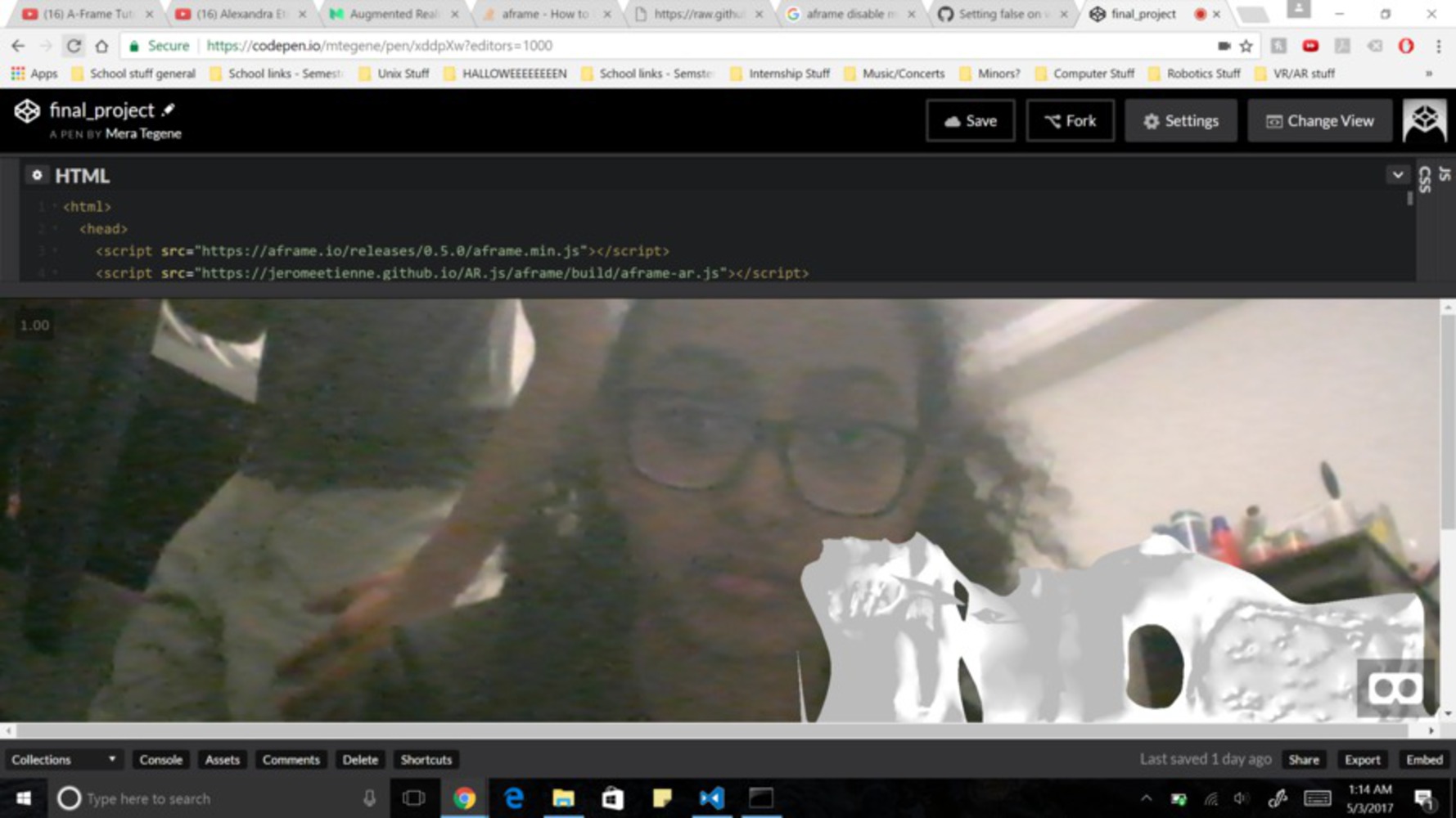

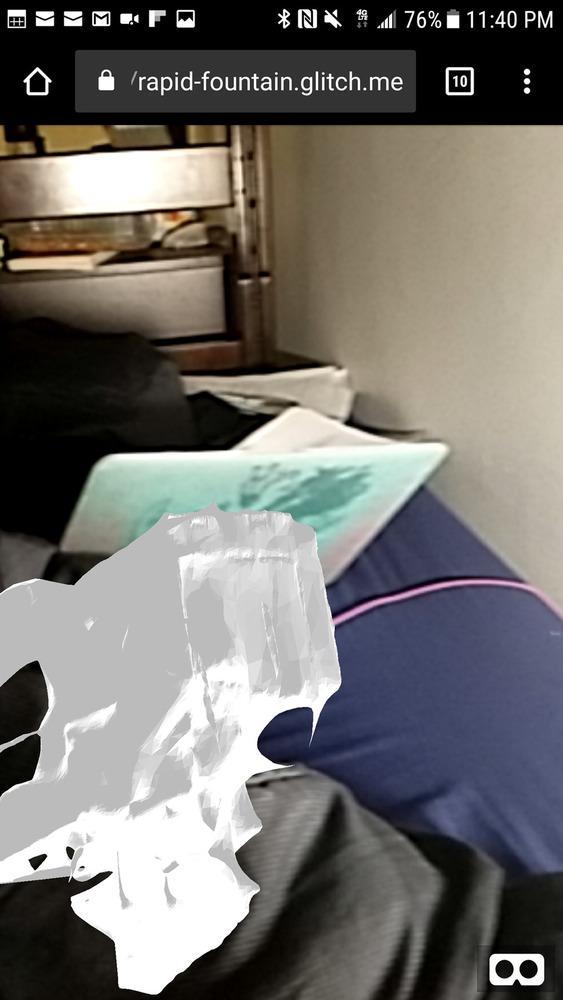

Displaying renderings in AR: I used AR.js and Aframe to display the rendering in Augmented Reality (AR). I hosted everything on glitch because I use WebSockets to communicate with the Vive Tracker which should be attached to the mobile device displaying everything. I need the Vive Tracker because mobile devices do not have accurate enough location tracking that I can easily use to figure out how the device is moving. By using the Vive Tracker, I can know where the mobile device is in the room and that allows me to know portion of the rendering I should be displaying (that's what the mover code does).