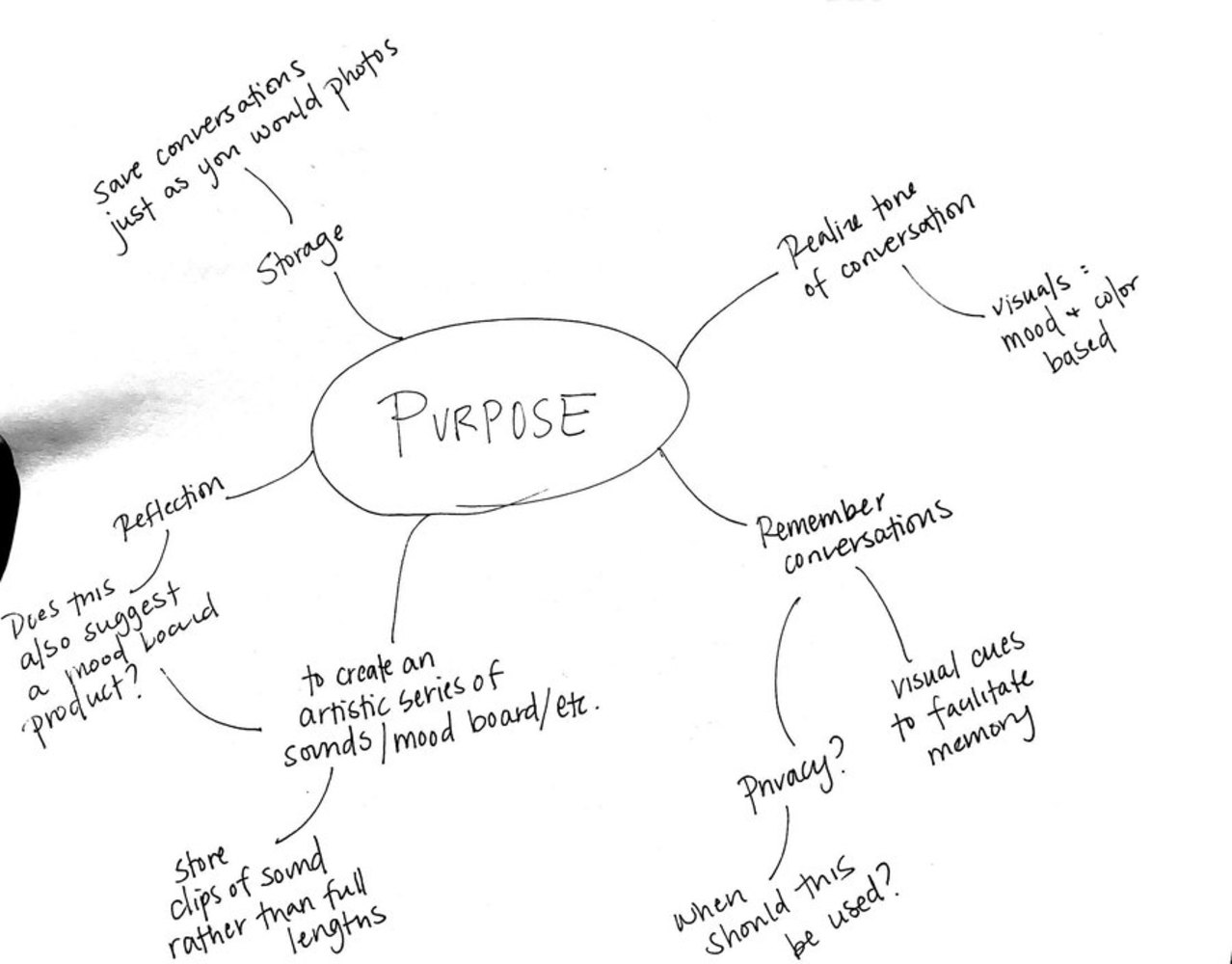

Intention

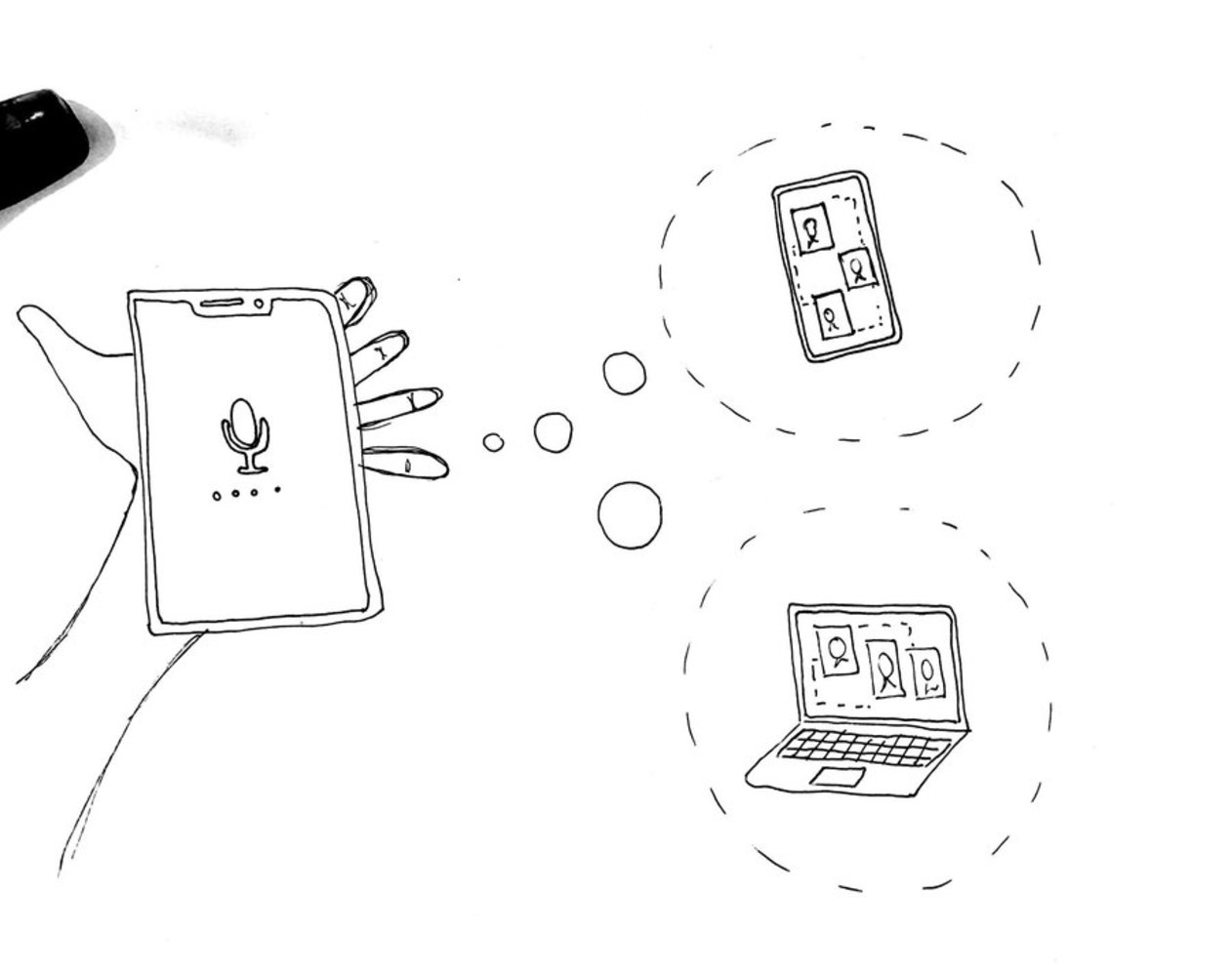

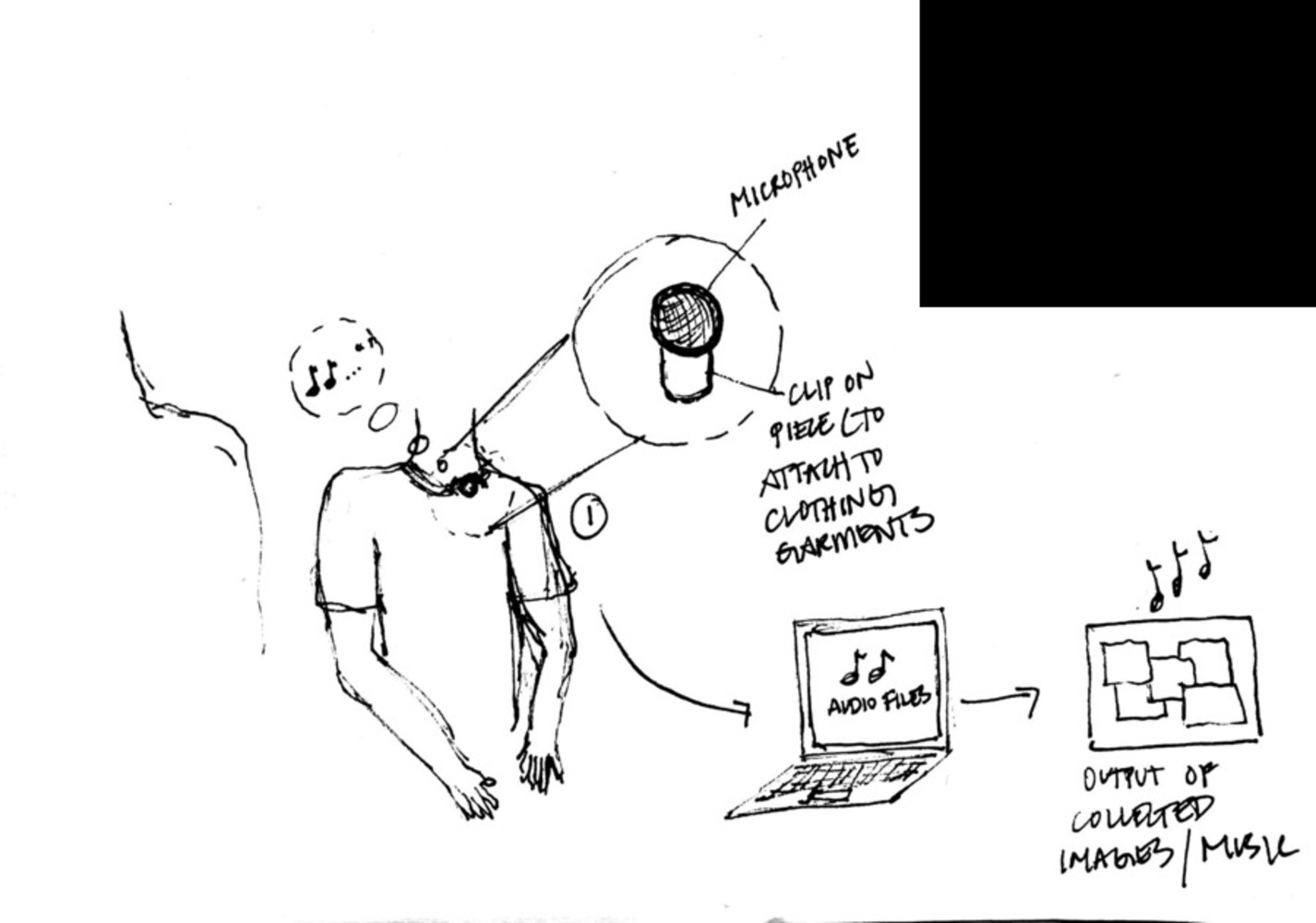

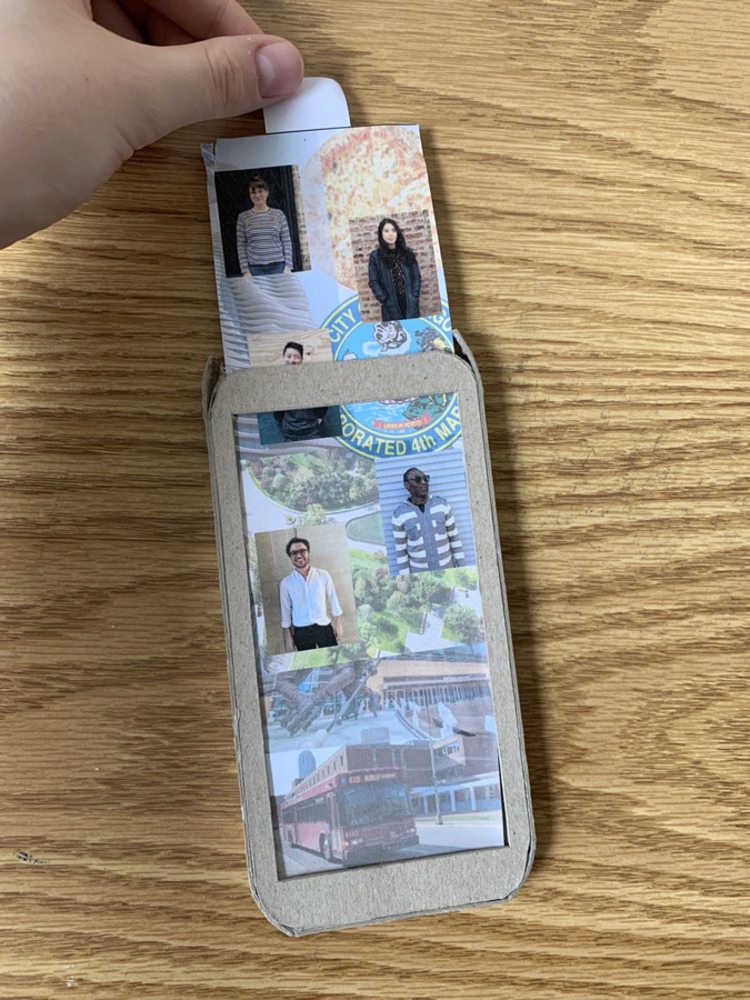

Sound is the worst of our senses in the recollection of memories, giving new meaning behind the phrase “in one ear and out the other.” This inherent lack of capability of our brain storing auditory information allows meaningful conversation to be completely erased forever. In response, this proposal explores the possibilities of saving that auditory information; when an individual records their conversations, with the use of a phone recorder or microphone with storage, a page is produced based on content and speakers of the conversation. This page includes images of the participating speakers of the conversation and collects photos from the internet of topics relevant to the conversation, overlaying the two image types to establish hierarchy between the factors of conversation.