Prototype

Exhibit Design

For the exhibition, we decided to build a story that showed the progression of the development, release, and recall of the Aura Carbon. Since we were telling a time-based story, we decided to utilize multiple touchpoints as a way to focus on different elements of the story. We also decided to incorporate a timeline which would be an easy way for the viewer to see the story in entirety.

Designing/testing the exhibit space

Aura Carbon Prototypes

We prototyped 3 versions of the Aura Carbon for our exhibition to help support the story we were telling.

(1) A model to represent a first prototype of the Aura Carbon that was built by the Aura engineers.

The purpose of this model was to support the development phase of the Aura Carbon. We decided to make the prototype look distinctly different than the product released version of the Aura Carbon. This contrast makes the product released version look for real and finished.

(2) A model to represent the first publically released version of the Aura Carbon.

The purpose of this model was to support the released portion of the story. This prototype was to be used for the interactive/conversational element of our exhibit design. Because this was supposed to represent a commercially released product, we 3d printed and finished it by sanding it smooth.

Making the Aura Carbon Model

(3) A model to represent a hacked version of the Aura Carbon that was used in the financial fraud scandal that was part of our timeline story

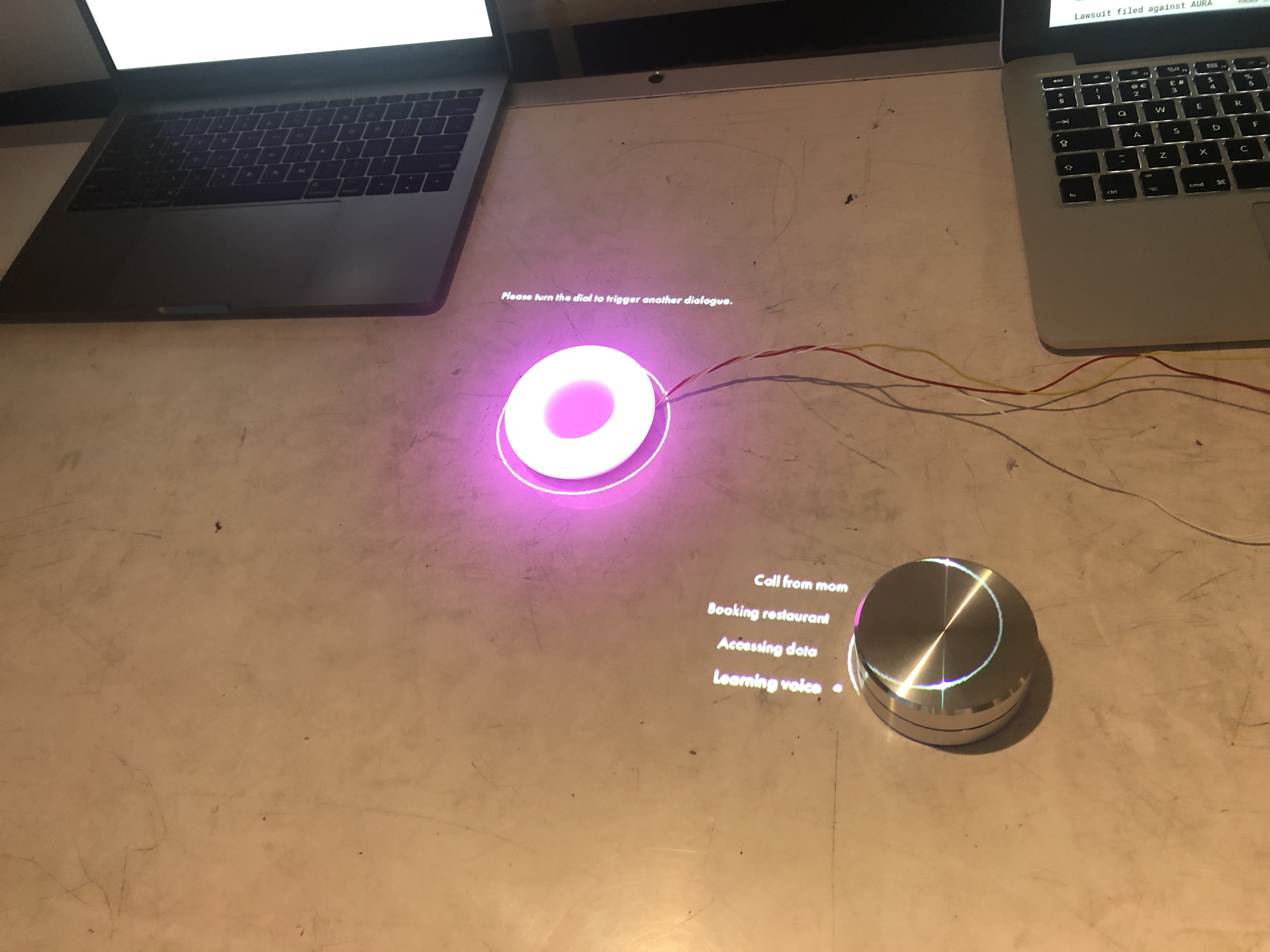

The purpose of this model was to support the hacking scandal portion of our story. We made the prototype look like someone tried to crack into it by making a finished version and breaking into ourselves. We also incorporate things like wires coming out of it to imply it was hacked.

Physical Computing Prototype

For the final product, we added a few lighting effects to communicate different states of the device, including a listening state, a speaking state, and a rest state. We used a Particle Photon and NeoPixel 24 to prototype the LED lighting effects. The listening state is a spinning ring; the speaking state is a randomly changed color from blue to purple that matches with Aura's branding; the rest state is a slow breathing effect that also transitions from blue to purple color.

Conversational Interaction

For the conversational interface, we decided to pre-record a few key scenarios rather than prototype a functional voice recognition system. Because it is hard to anticipant how the audiences interact with our device, and we expect the exhibition would be very noisy for real voice recognition. But allowing audiences to trigger different scenario individually, we can demonstrate all the key contexts of how this device would be used.

Then we finalized four key scenarios, including onboarding of voice mimicking, access to a user's personal data, reserve a restaurant and answer the user's mom call. We write down the scripts for each dialogue and record audios for each.

We also want to build a visual interface that helps audiences see the conversation, and understand the role of each dialogue. As such, we built a processing prototype, that can display captions along with the recorded audios. The processing code reads audio files paired with text files, and the text files contain all the scripts, roles, as well as the timing of every dialogue. Therefore, when an audio file is playing, a related caption will display simultaneously.

We also want to build a visual interface that helps audiences see the conversation, and understand the role of each dialogue. As such, we built a processing prototype, that can display captions along with the recorded audios. The processing code reads audio files paired with text files, and the text files contain all the scripts, roles, as well as the timing of every dialogue. Therefore, when an audio file is playing, a related caption will display simultaneously.

In order to allow audiences to interact with the dialogue easily, we used a physical dial to trigger it. Daragh borrowed us a PowerMate dial, and we coded it to trigger different contexts of dialogue.

Ultimately, we use projector mapping to project images onto the table from the top down. So the image aligns with the physical objects as if the projected image is an extension of the objects. The processing code is also real-time communicate with Particle Photon, so it can trigger different lighting mode while playing the dialogue.

Timeline and Supporting Articles

Product Video

We developed a product video that was incorporated into the timeline to support the product release Aura Carbon prototype. This video mimicked the product videos that current tech companies release to promote their products. The purpose of the video was to help show the tension and differences between the intention of the product and the misuse of it.