StudSeer

How Machine Learning is changing our relationship with our tools

A the outset of this project, I interviewed a friend who I knew had an interesting relationship with modern technology. While talking about social media ad placement and personalization, she mentioned that sometimes she would try to trick or train the algorithm with her behaviors. She called it her personal algorithm, and when asked to visualize what it looked like, she said

“When I think of it, its an old-school computer with an old monitor and a separate keyboard with mitten glove hands. It’s the size of the computer I had when I was a kid although I’m sure it’s not as big as I thought it was.”

From these two characterizations of her algorithm, I became curious about the way that we relate to machine learning differently than we do other physical or digital entities.

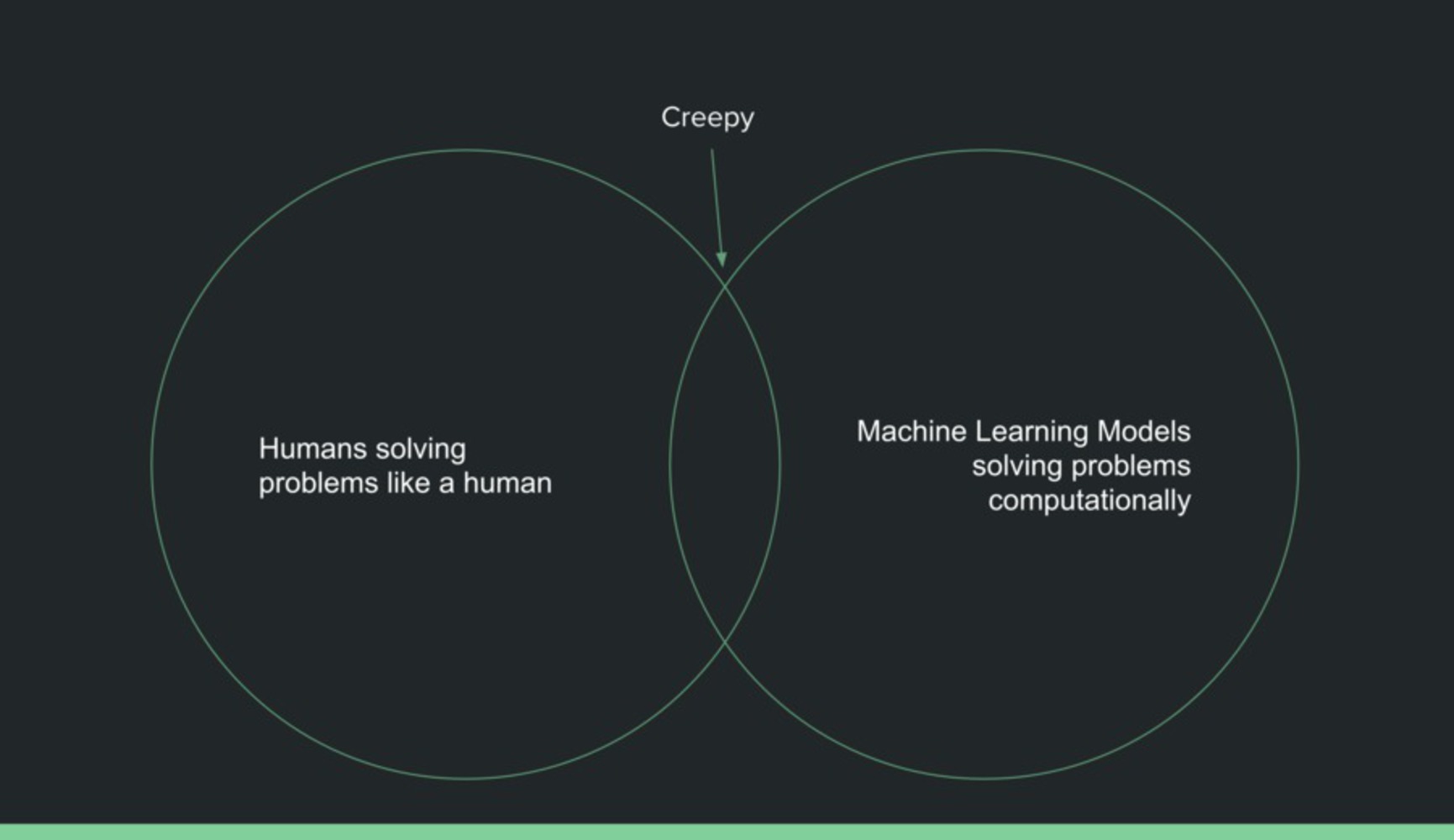

For this project, I wanted to explore what is spooky about our relationship with Machine Learning as it becomes a common facet of our daily life?