Process

Throughout the process of developing our final design, we went through 3 main iterations:

// 1

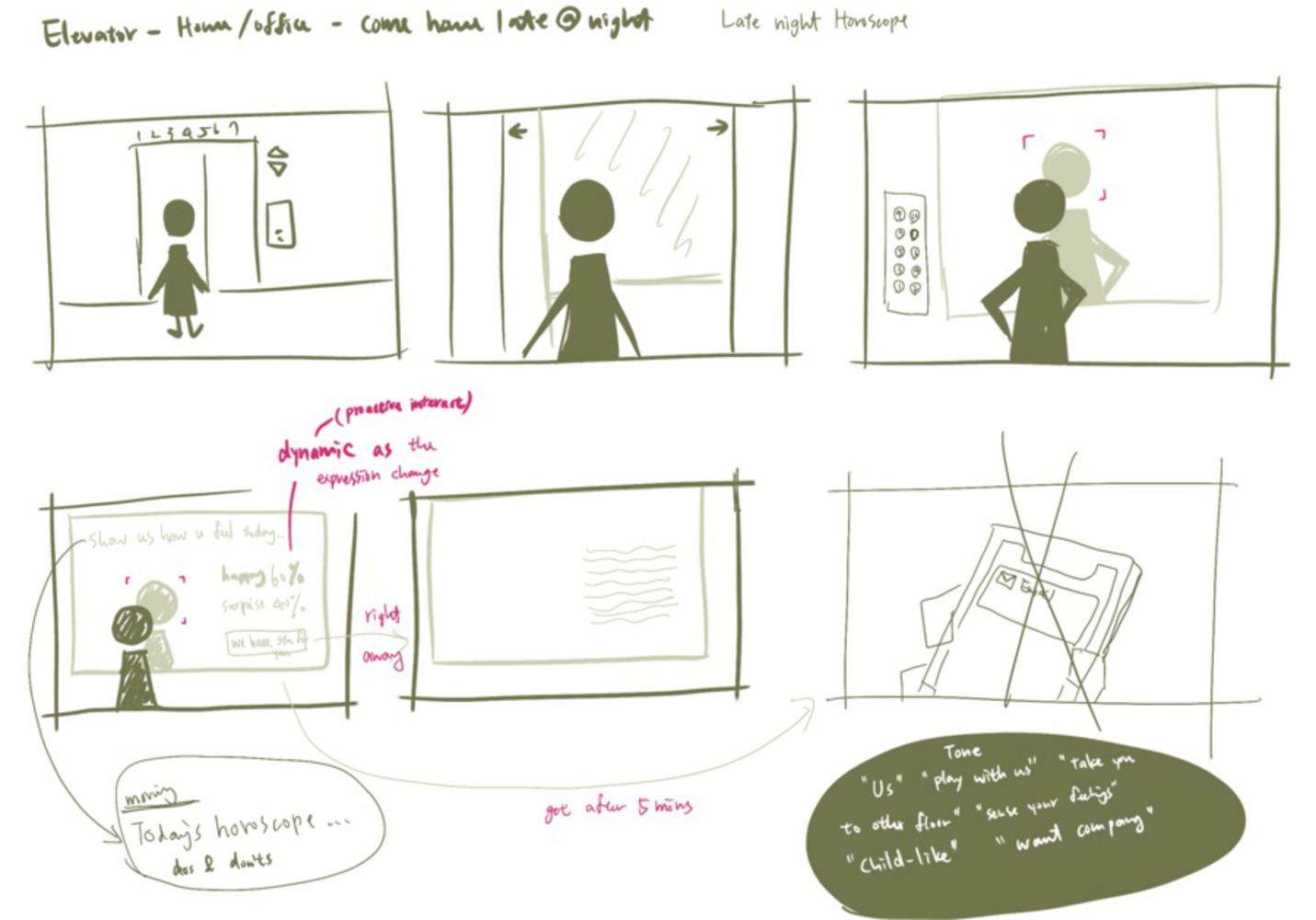

At the very beginning, we were inspired by how Ouija boards pick up people’s unconscious inputs and form outputs that can be seemingly unexpected. And we came up with two ideas that centered around the concept of “AI prediction and fortune-telling” and “generative diaries”

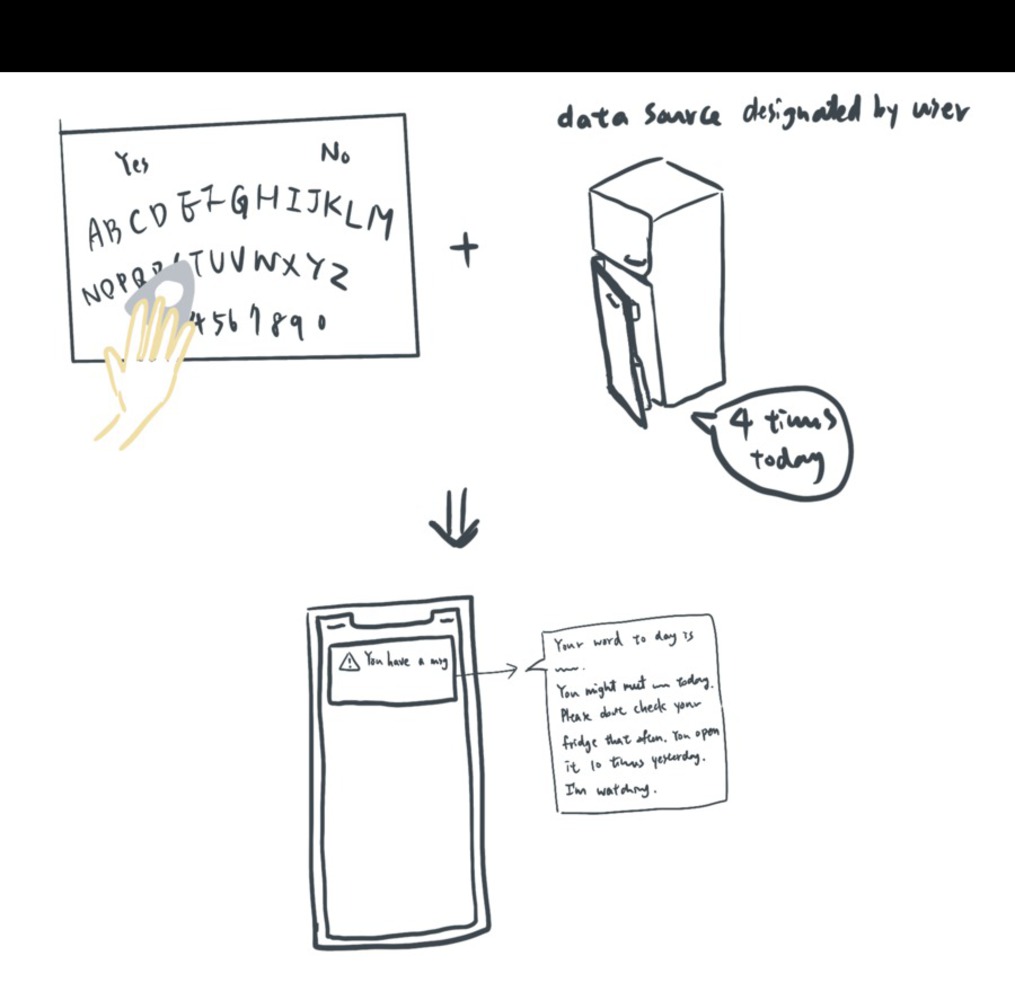

Idea 1: an Ouija board-like device that provides the user with daily notes or agenda.

By either collecting the user’s surrounding environment information through a webcam or recording the user’s trivial interactions with other household products as the data source, the device would gradually form outputs that can get very personal to an unsettling level. The interaction mode would be similar to how one would use an Ouija board, by placing their hands onto the board and moving around the sensing area will trigger the service to generate outputs based on the accumulated data.

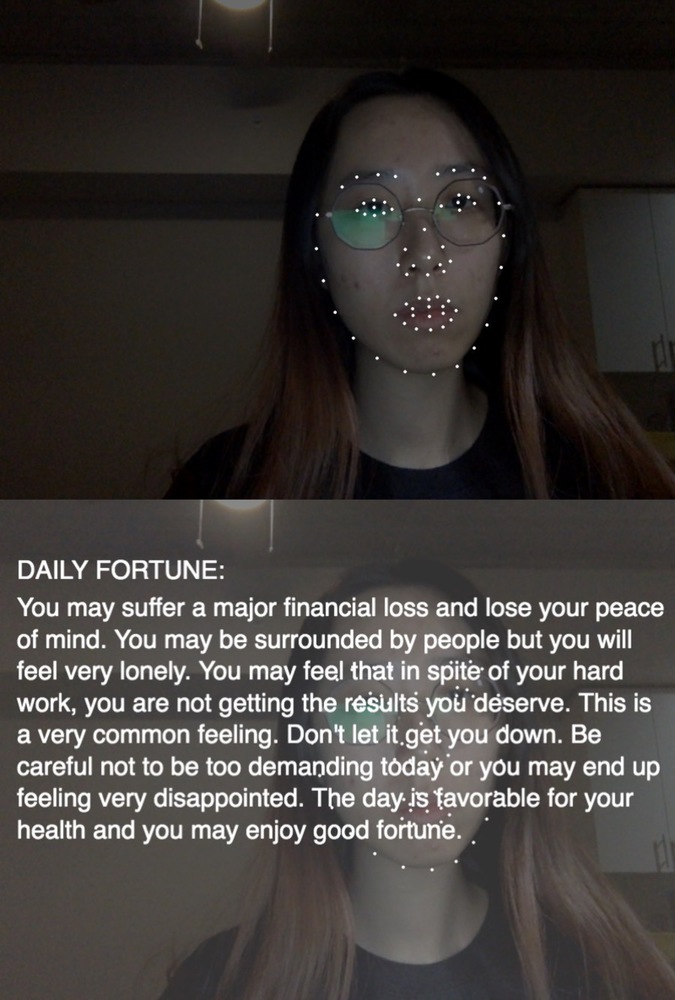

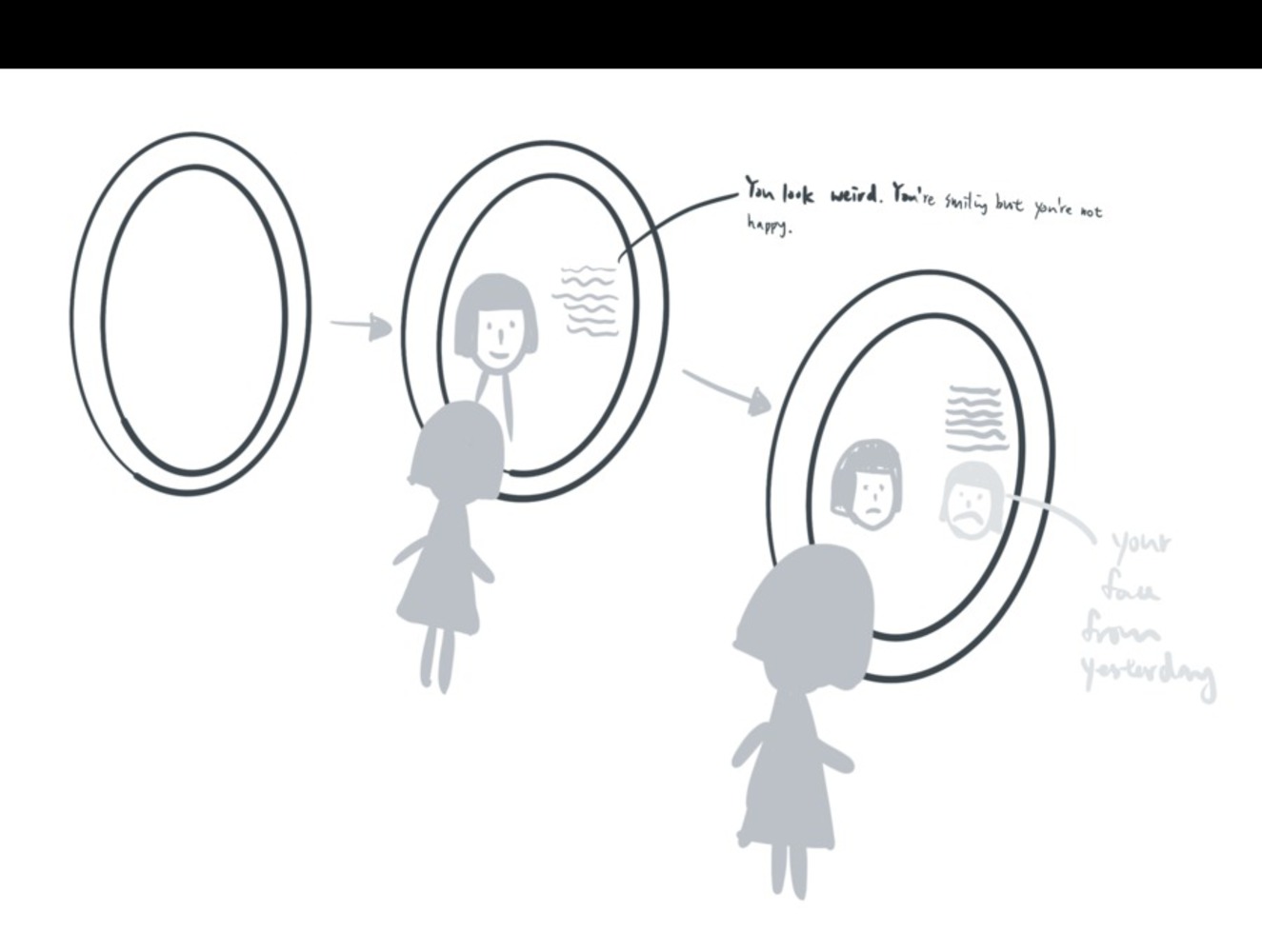

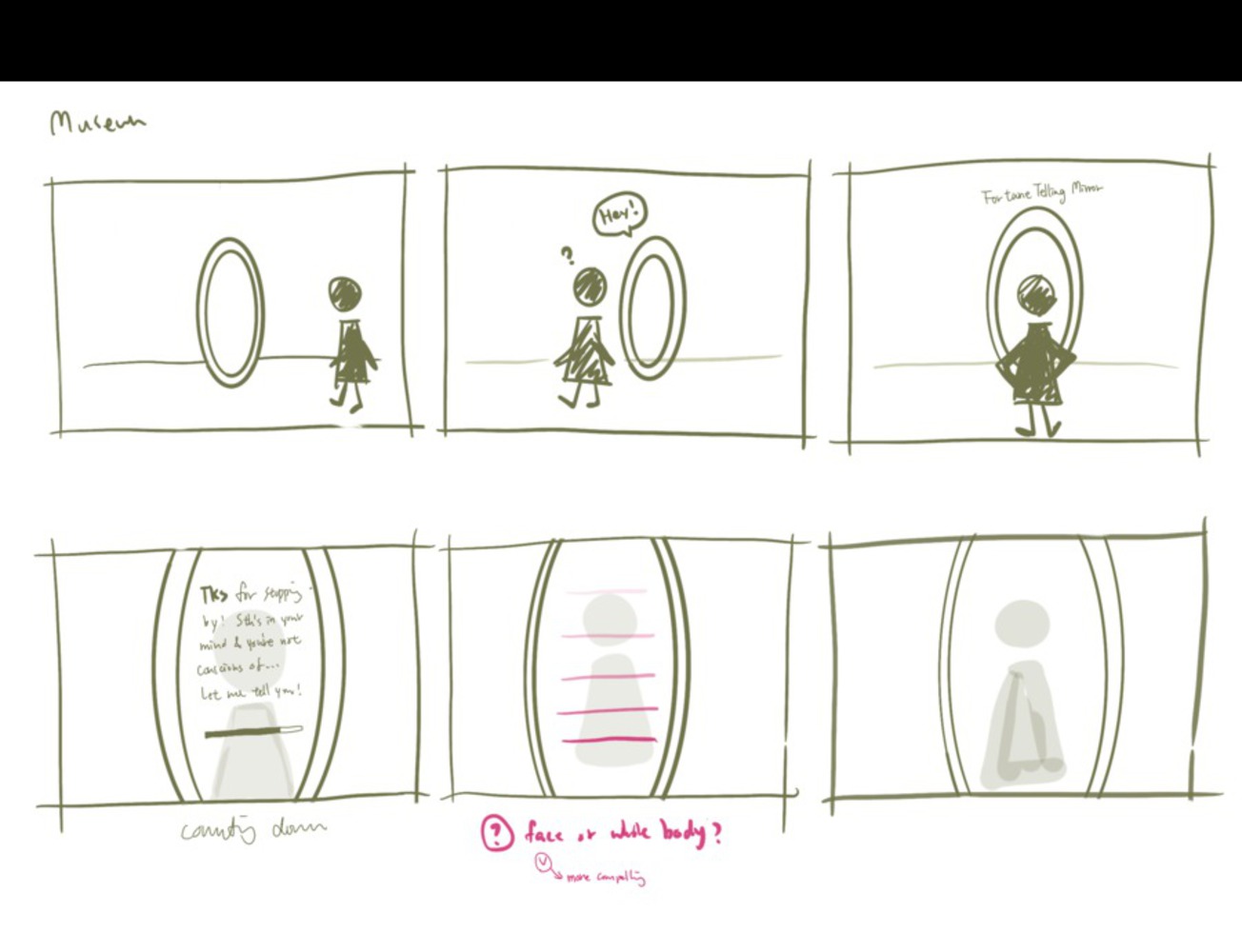

Idea 2: a mirror that uses machine learning to create diaries for the user.

The mirror can be activated by letting the user stand in front of the device and show their reflection in the mirror as the input. By using technologies that could recognize and process the input (e.g. text recognition, image recognition), the mirror would generate a paragraph of diary that summarize your day. Besides recognizing the reflection, the device would also store historical inputs (e.g. mirror “selfie” from yesterday or days before) for updating the generating outputs.

Both ideas involve generated text, we want to explore the ways of forming the language for the outputs as well. Right now we are thinking about using poem-like structures so that the output can leave space for users’ own interpretations.

Feedback & Iteration

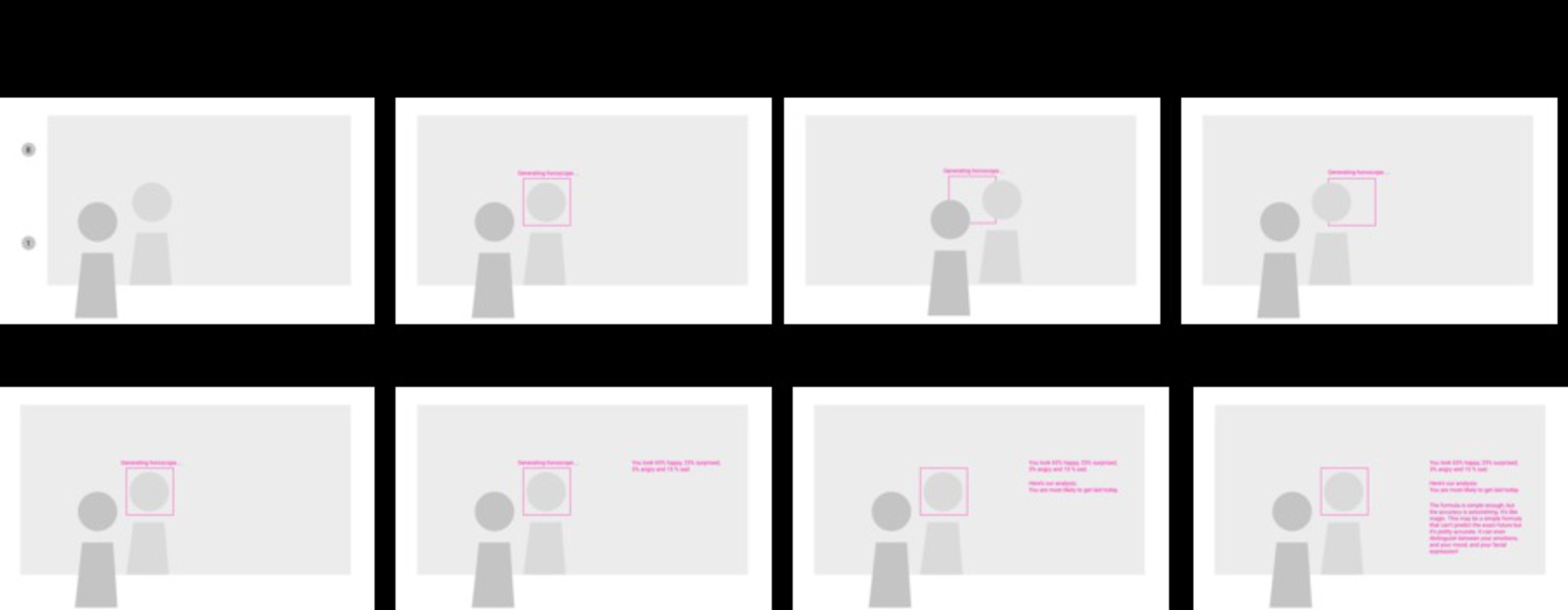

The main feedback we got from Daragh and Policarpo was that we need to consider the time constraints and the technical complexity of building networked systems, and we need to strengthen the story and understand what kind of emotion we’d like to trigger. Based on the feedback, we decided the input might not data from other IoT devices and apply the existing display such as iPad to act as the ouija board or the mirror.