Intention

Write about the big ideas behind your project? What are the goals? Why did you make it? What are your motivations?

The intent of this project is to increase awareness of our everyday interactions with intelligent voice assistants. By masking Alexa’s replies with voice changing capabilities, which alludes to the idea that devices could be emotional and embody different personalities, we encourage people to consider alternative interactions with voice assistants and engage in proactive critique regarding what we truly value from our devices.

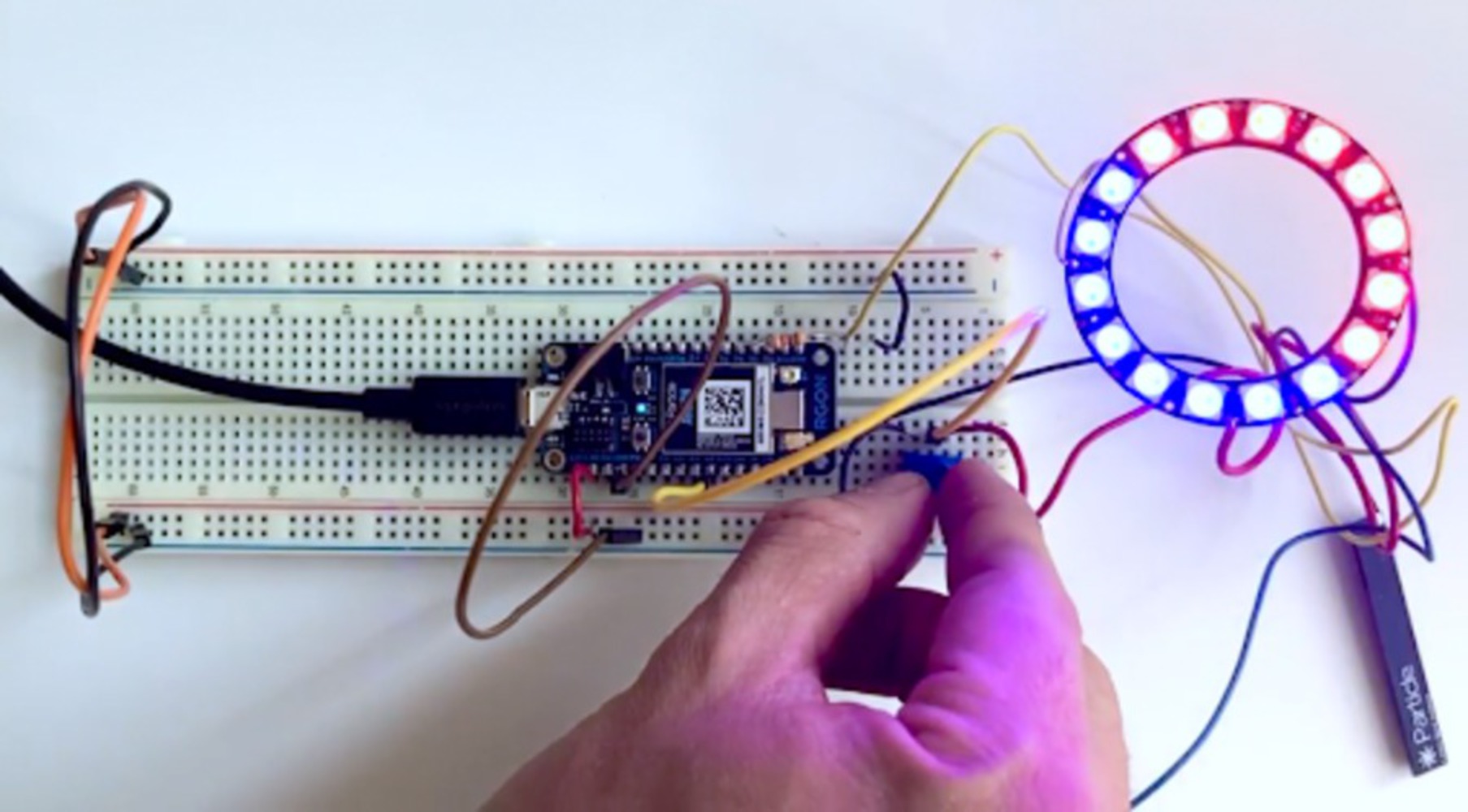

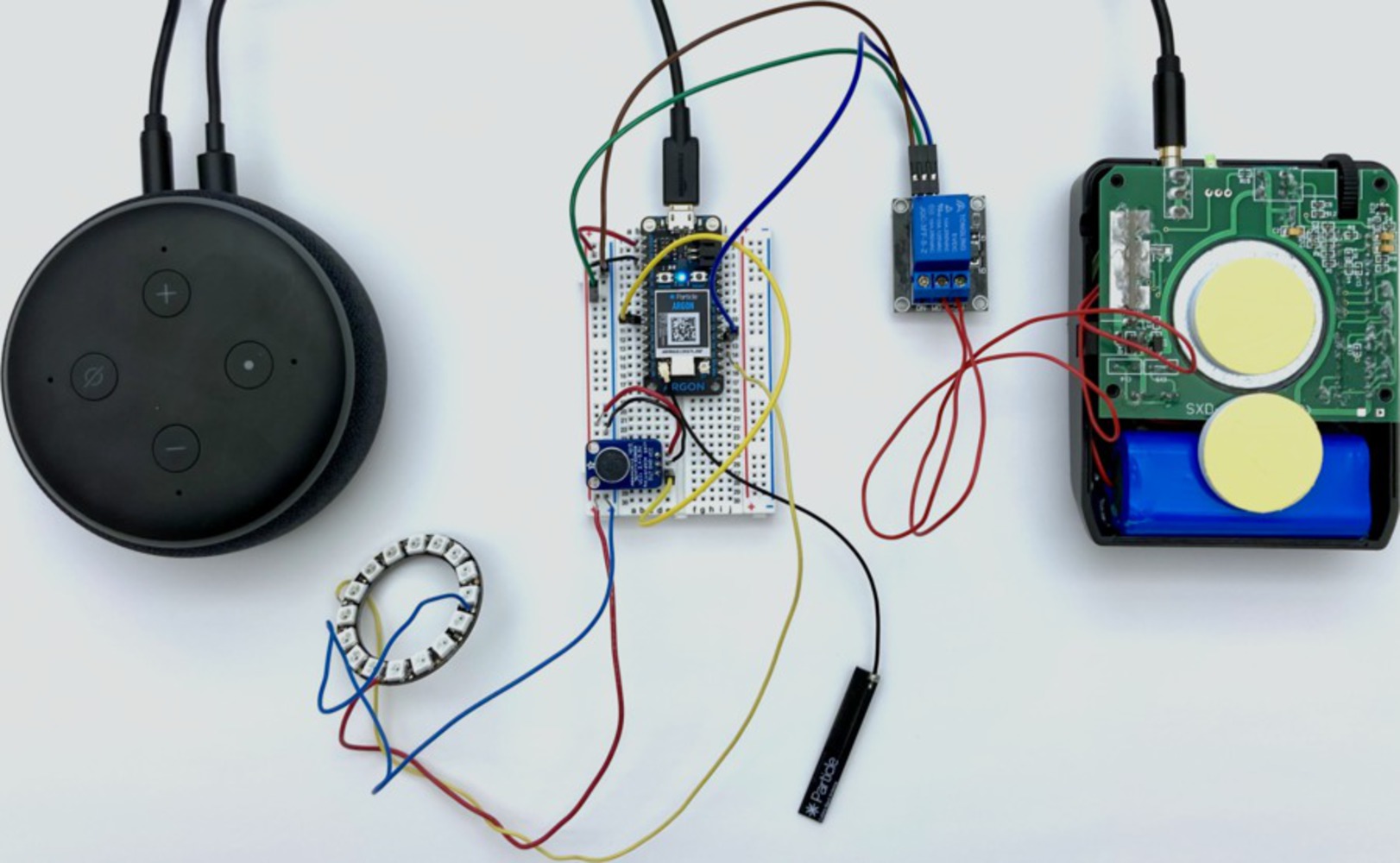

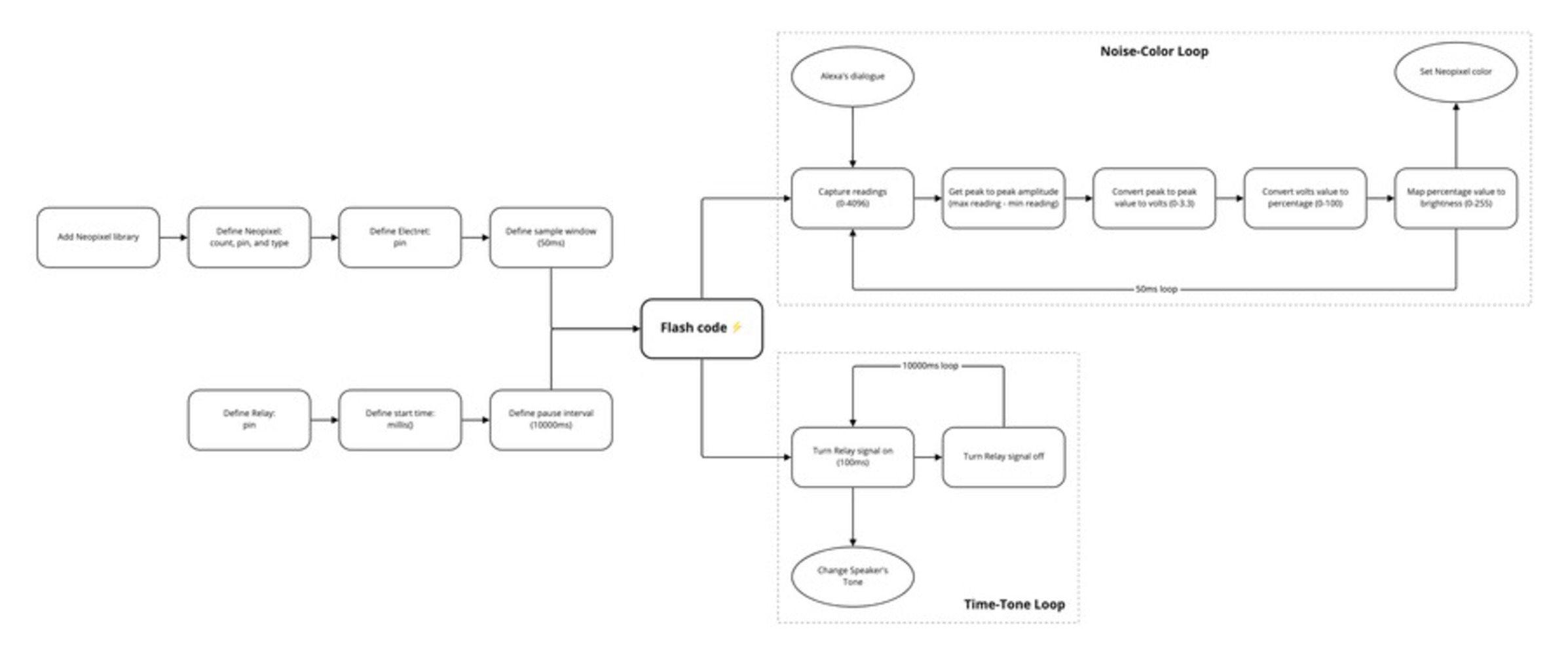

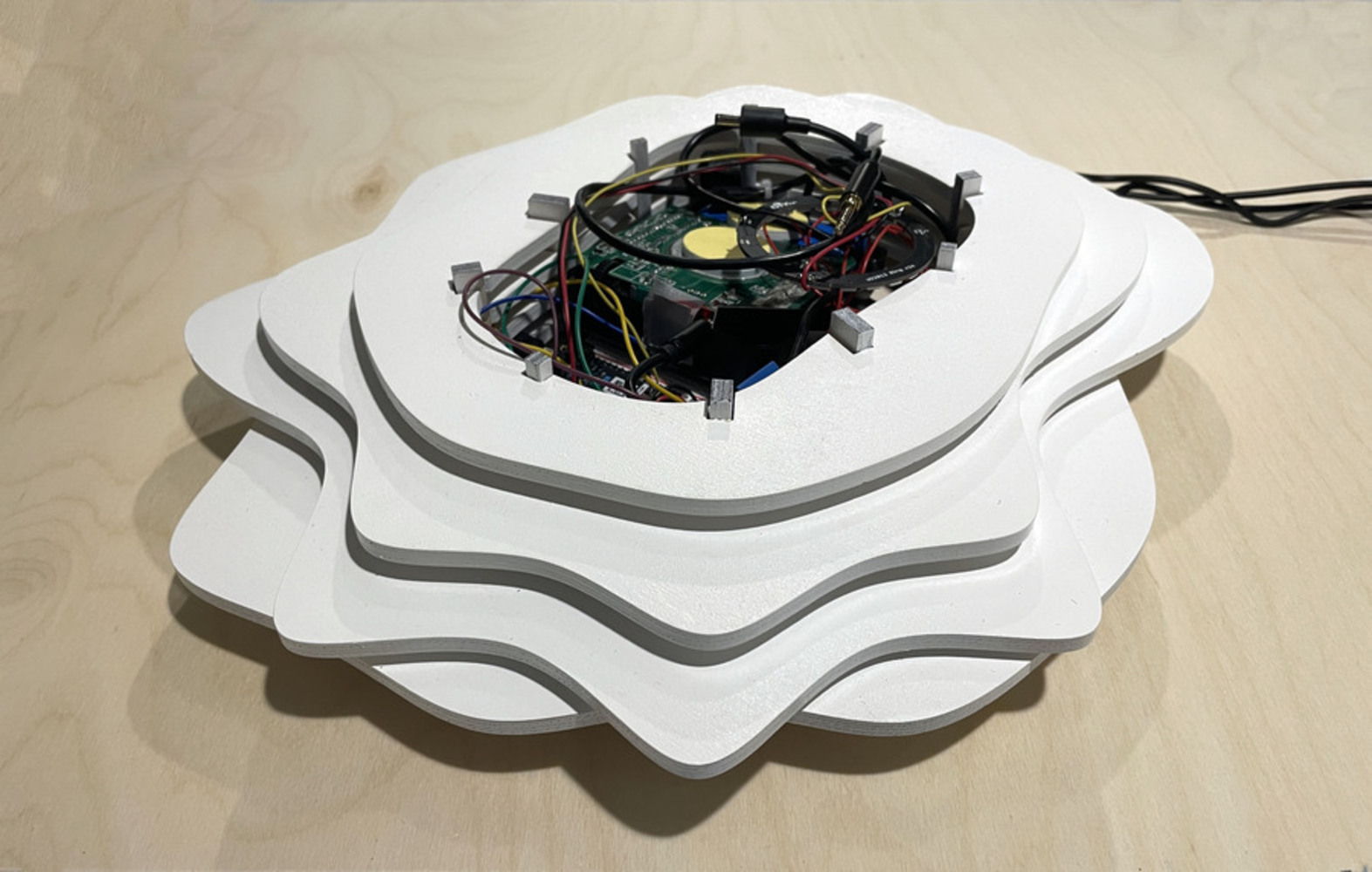

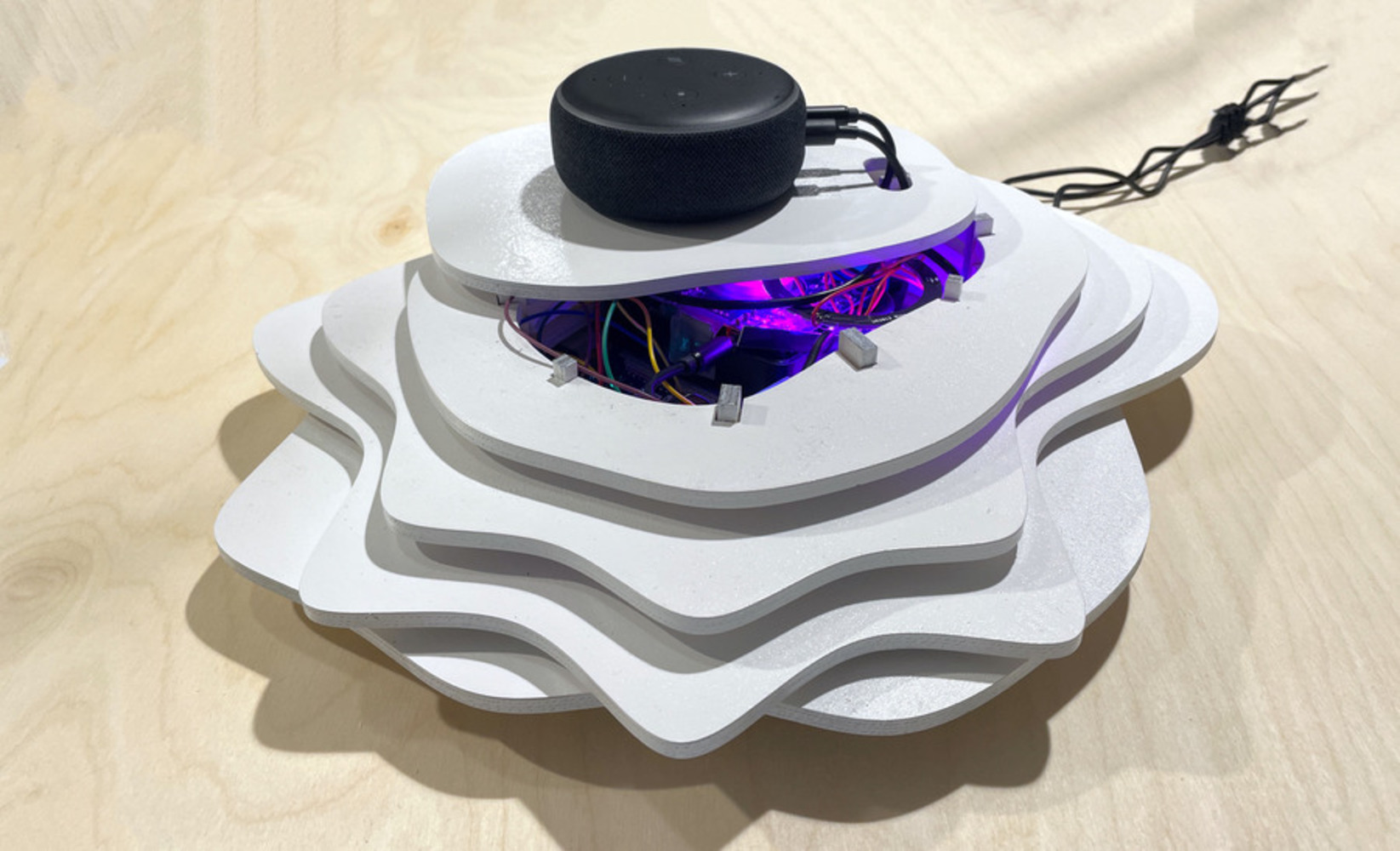

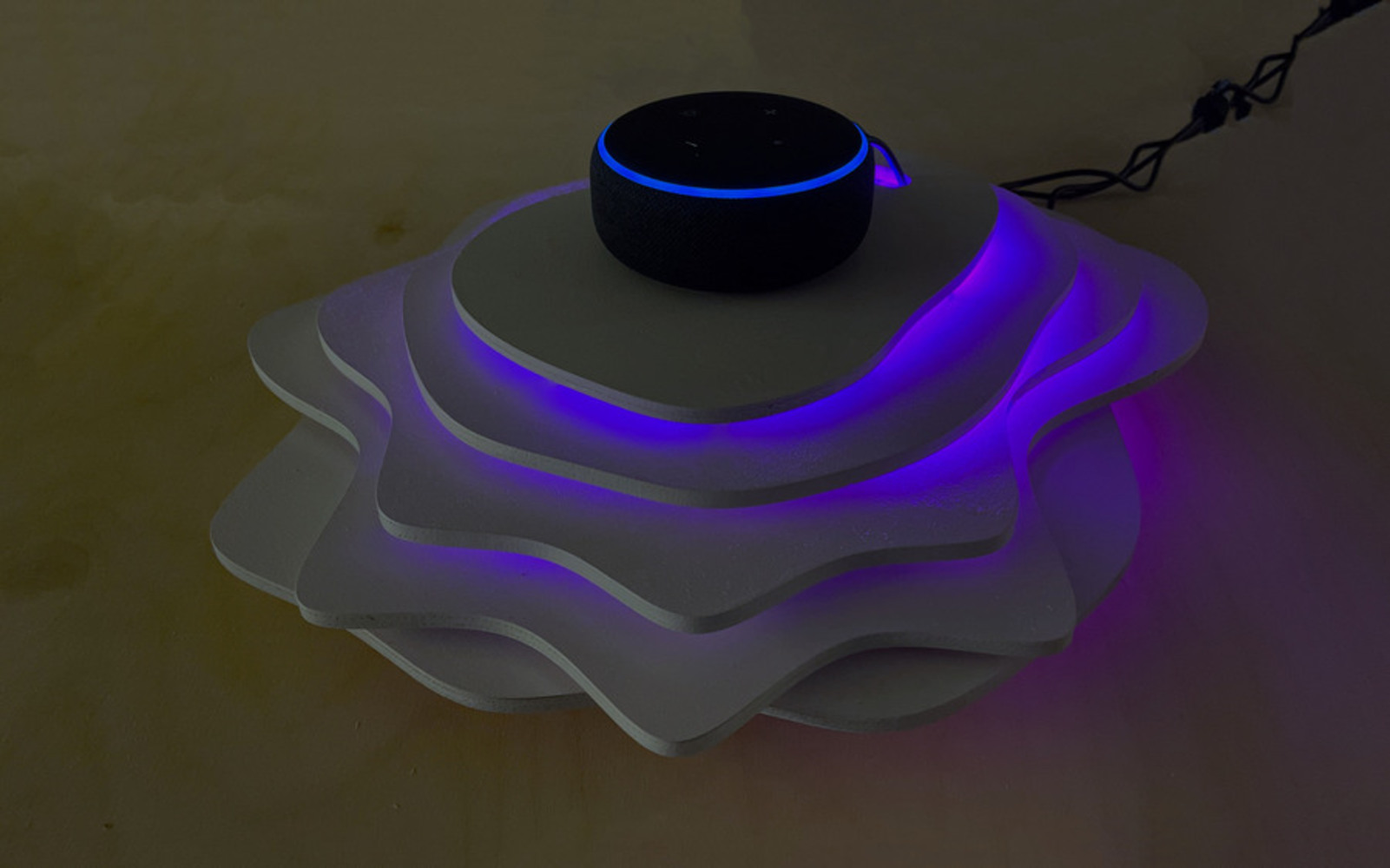

We designed an artifact that generates cognitive dissonance through the constant changing of Alexa’s tone of voice. The change in voice is further enhanced through the changing color intensity of lights that permeate the object, where the intensity of lights is modulated by Alexa’s speaking volume. After a few turns in a conversation, Alexa’s voice will begin to change and enact different personas. For example, when she talks about anger, her voice would sound intense and robotic. Alternatively, when speaking about something lighthearted and fun, her voice would sound high pitched like that of a child.

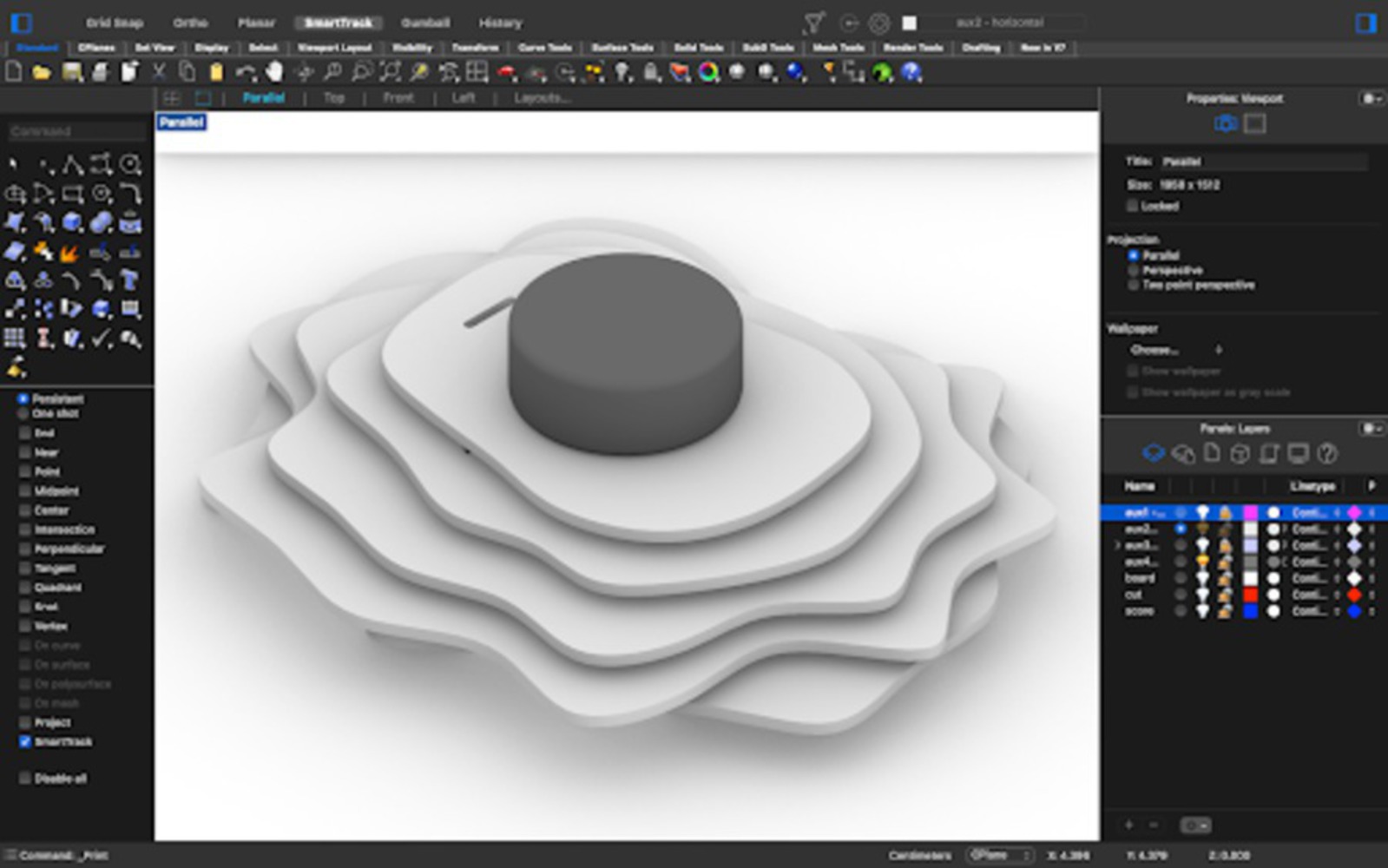

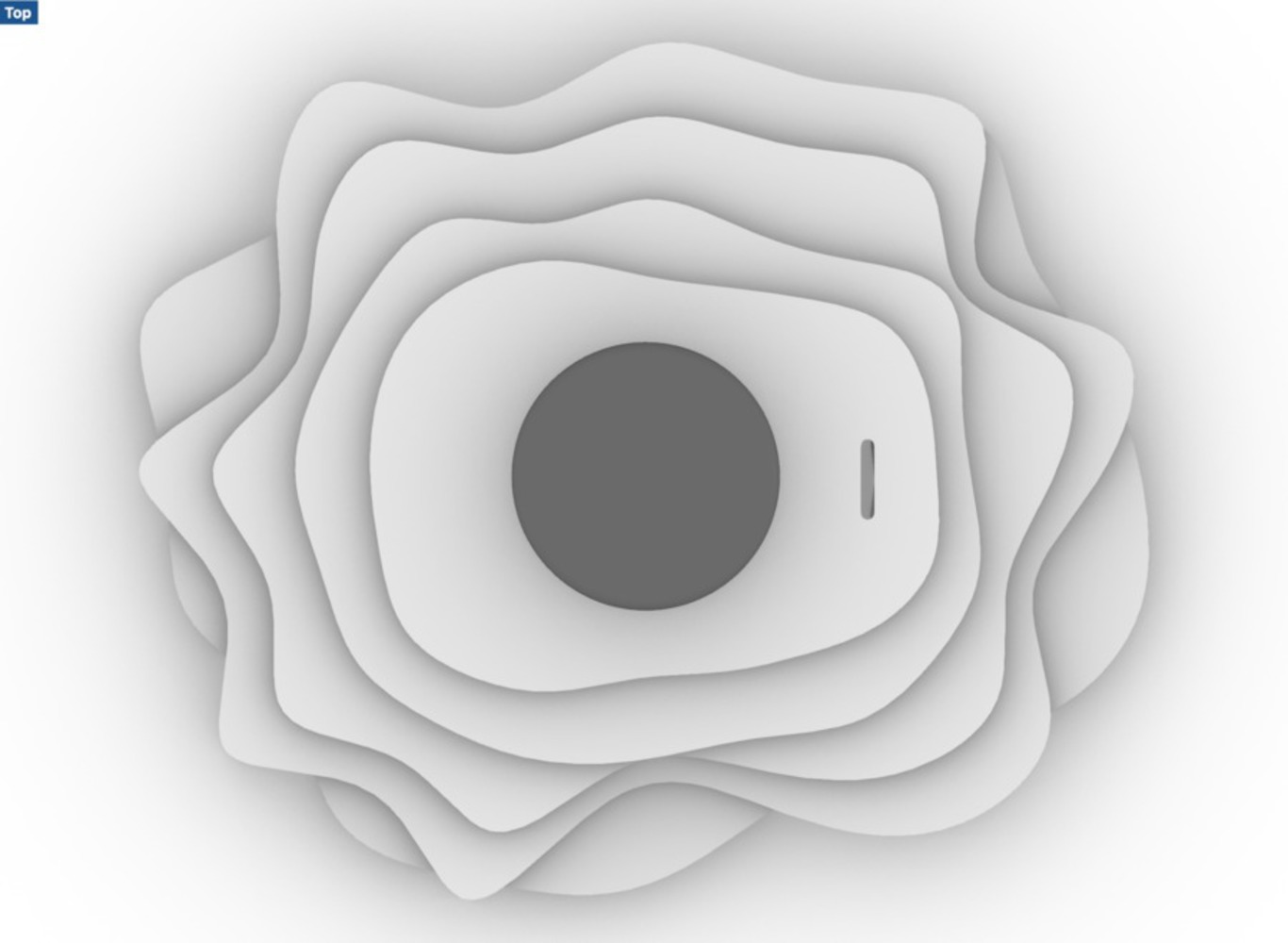

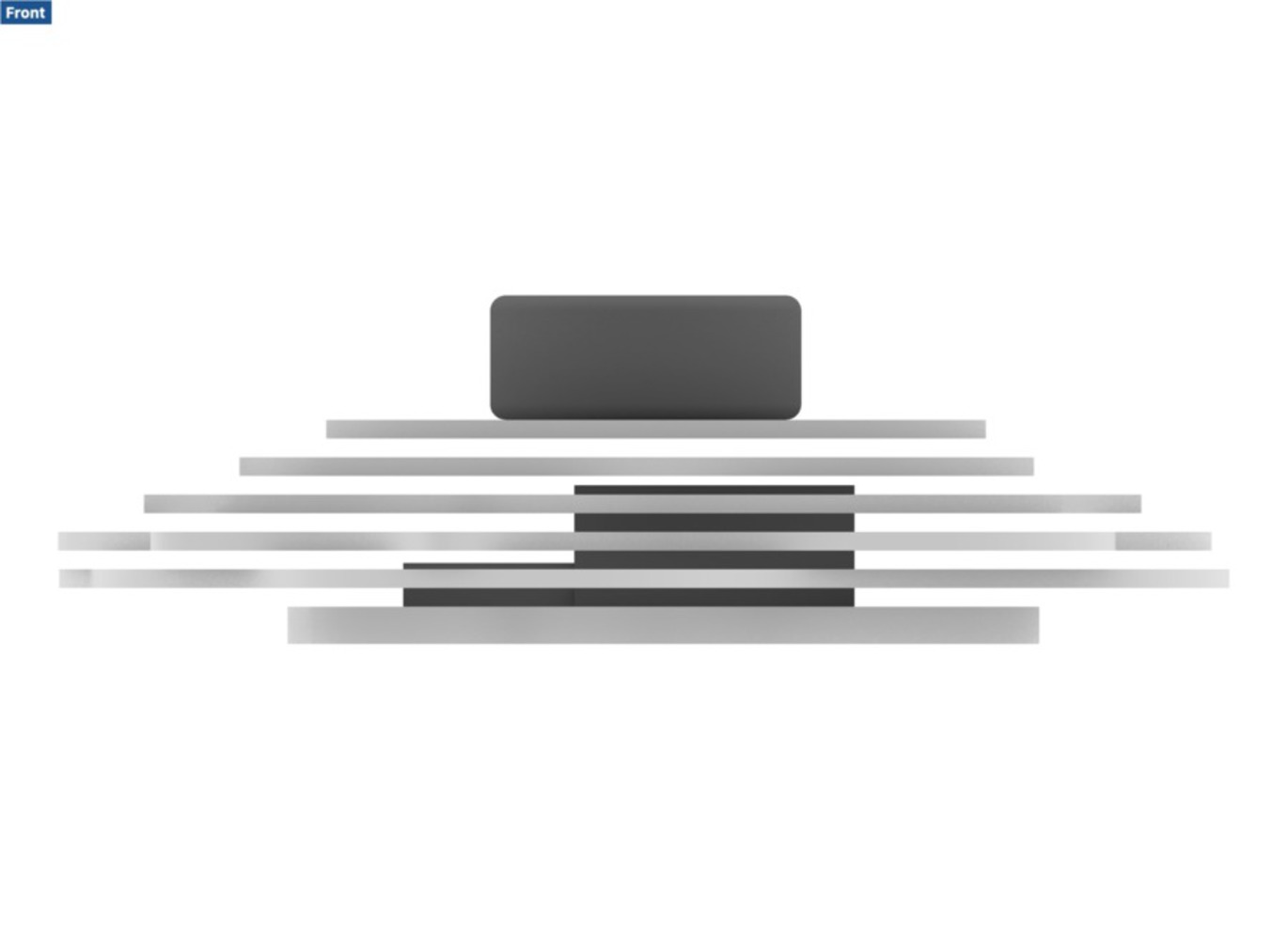

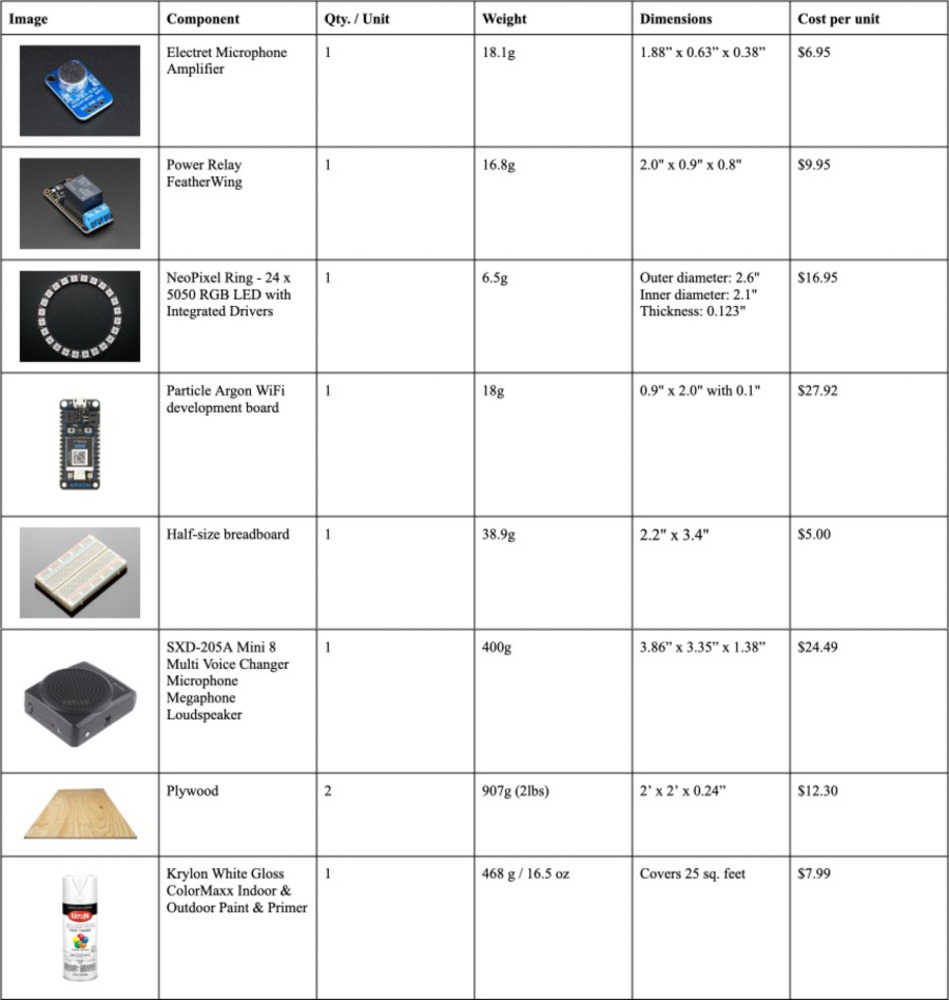

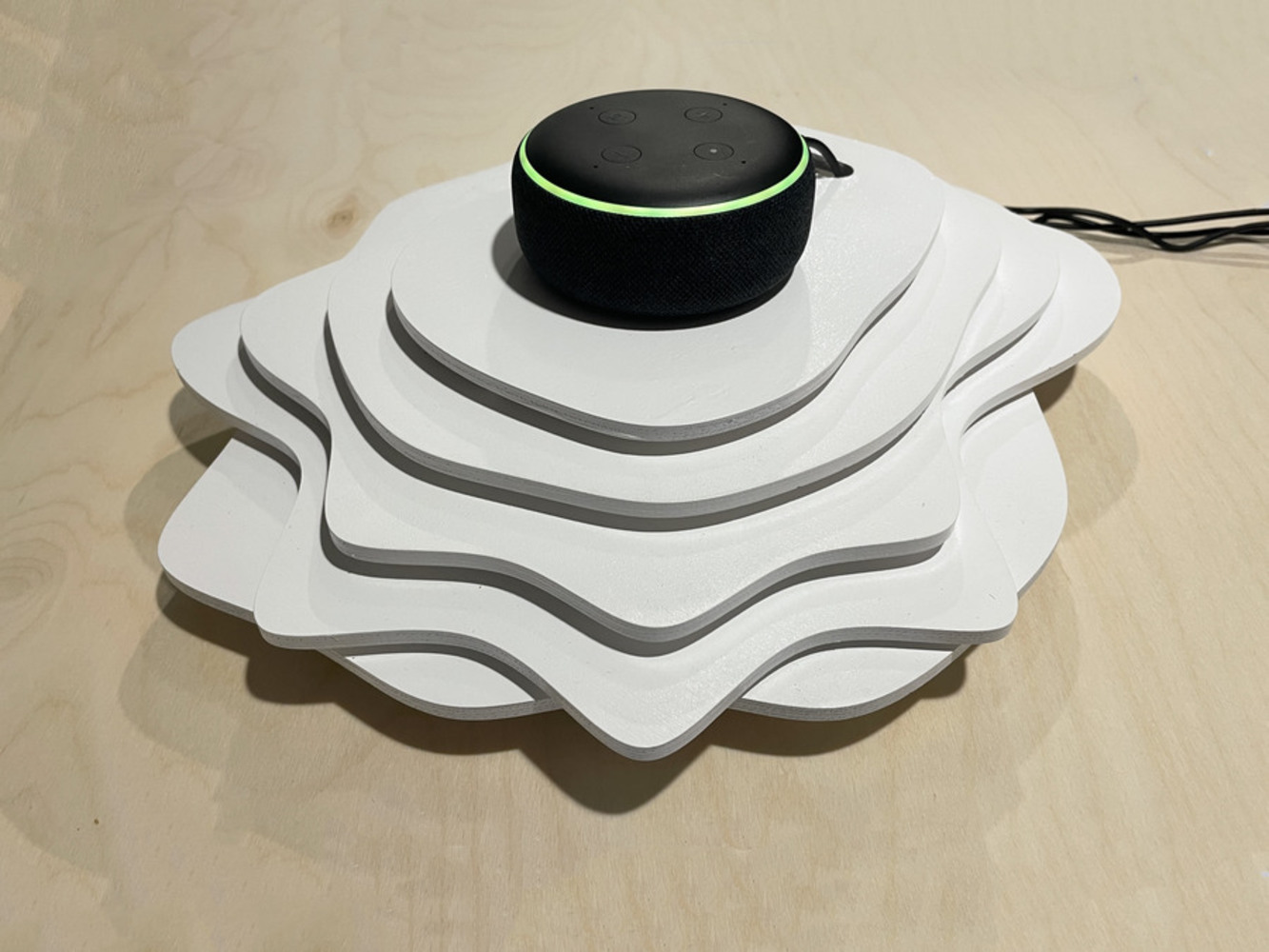

The physical artifact serves both as a container for the hardware, and as a metaphorical platform elevating Alexa as a spiritual, unfamiliar object. The form is meant to be approachable, organic, and desirable enough to be placed in public and private settings.