Intention

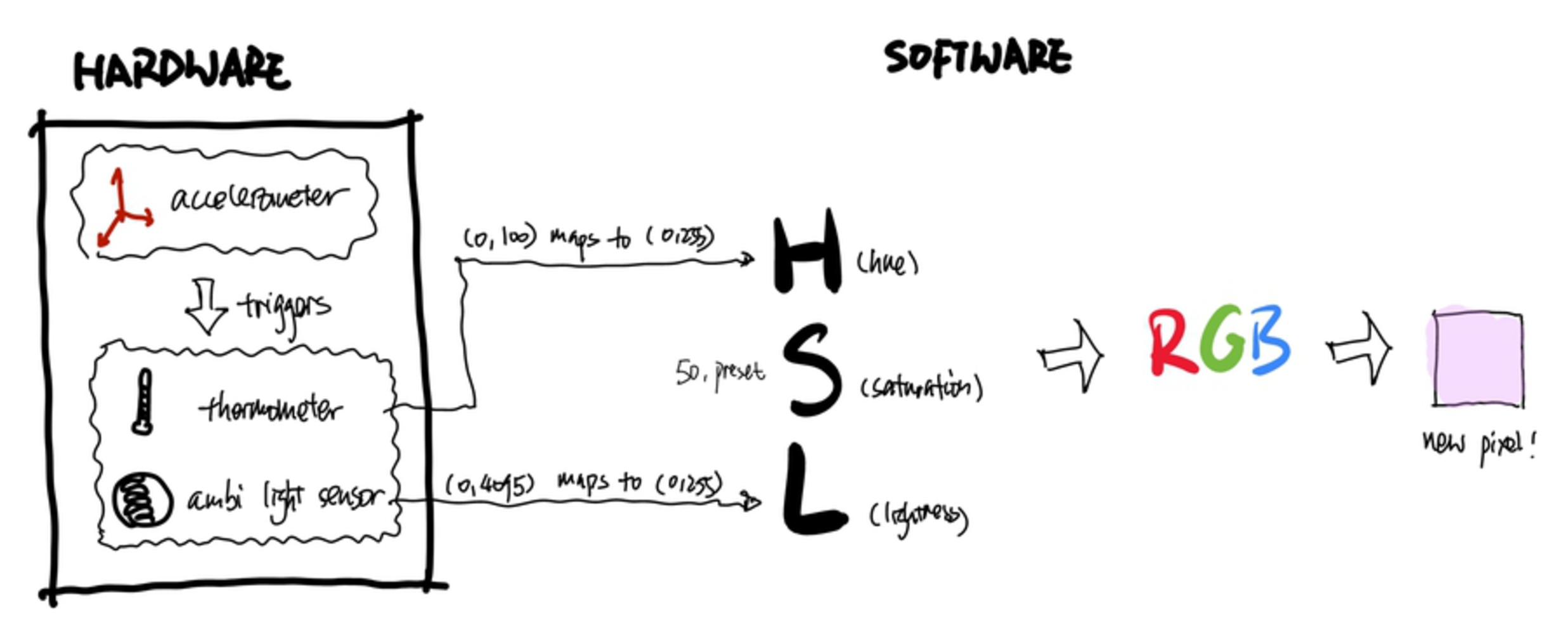

As we struggle to crawl through our daily chores, we could only focus on a few things at a time. Working, studying, making, learning, we almost never pay enough attention to our immediate environment and surroundings. Thus we are motivated to design and develop a solution that can keep track of what's happening around us when we go about with our daily routine. It should provide us a chance to self-reflect and encourage us to be mindful of what often gets neglected in life.