Process

To start off the project, we first focused on creating an ML model that is able to detect the various colors of our horoscopes. To do so, we first chose a specific color for each zodiac sign based on research we did about the different zodiac signs. We created cards of each color/zodiac pairing using Figma, which was used as the training data for the model. Finally, we used Edge Impulse to train a ML model that could detect the correct color out of the 12 available options. The model we trained was pretty accurate except for one of the colors. We kept this in mind when moving forward with the project.

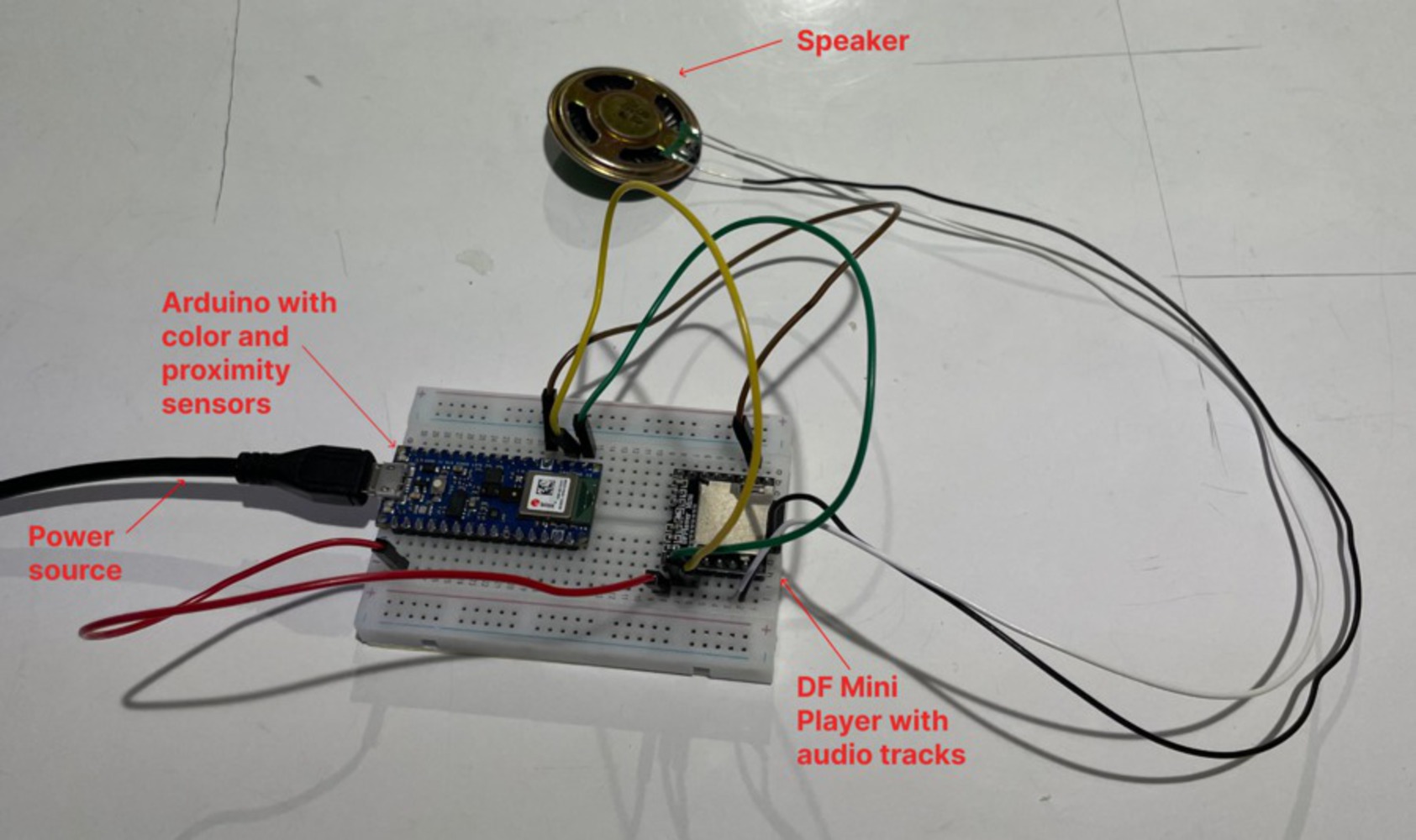

We then focused on getting acquainted with the mp3 player and its capabilities. We attached the mp3 player and speaker to our Arduino, got familiar with how to upload files to the SD card, and wrote out some basic code that played audio files in a loop.

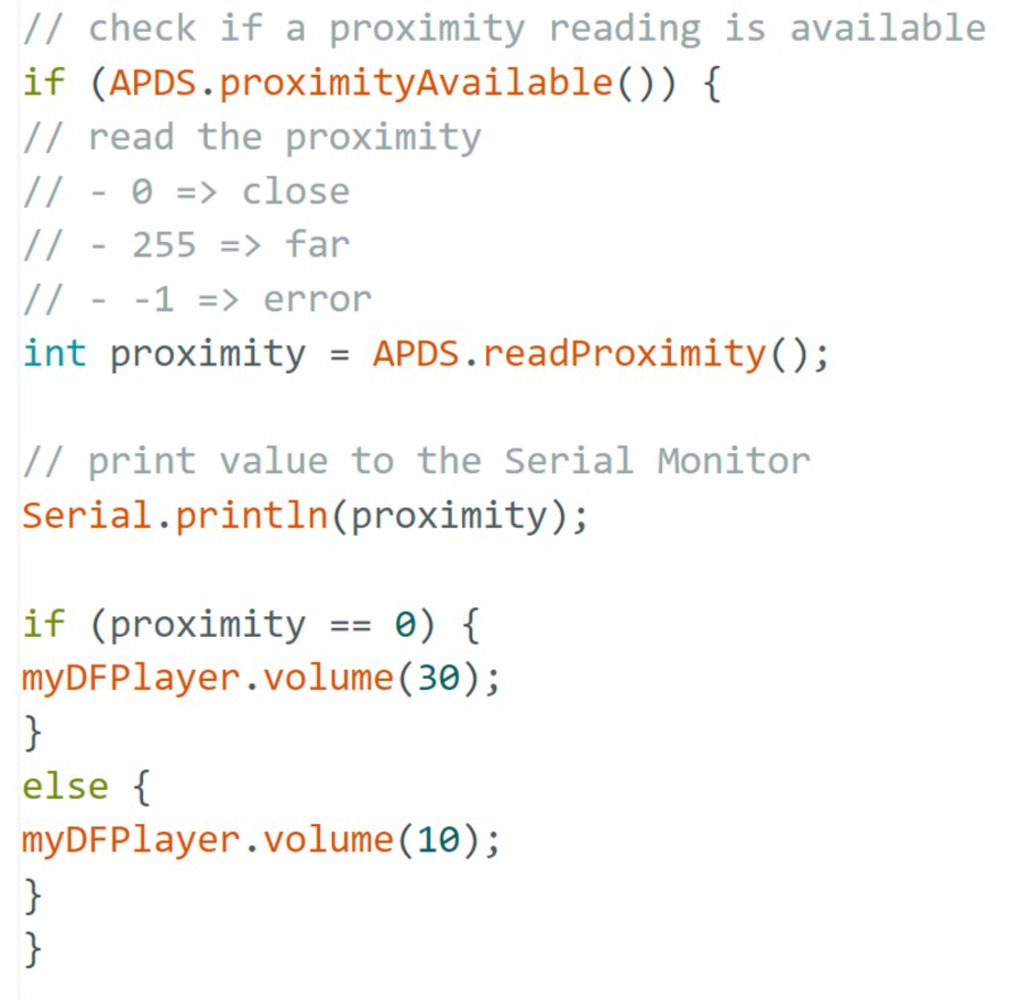

We then focused on experimenting with the proximity sensor and its capabilities. We first set up a simple loop that read and printed out proximity values read from the sensor. Next we added what we had learned about audio to create a relationship between proximity and sound. We created audio files of each horoscope reading and played them in a time based loop. We set the default volume to low and then programmed it so that if an object was detected at a close proximity, the volume of the audio would increase. If no object was at a close proximity, it would remain at the current volume.

We then worked on deploying the ML model to the Arduino and utilizing it to detect the correct color/zodiac sign. We ran into issues in this step. The Arduino with the deployed ML model was providing inaccurate readings even though it was working in Edge Impulse. After some debugging, we realized that the model only works if we input colors from the original device that the model was trained on (Kelli’s laptop). Due to varying saturations and brightnesses of different devices, the ML model is unable to provide accurate readings from other devices.

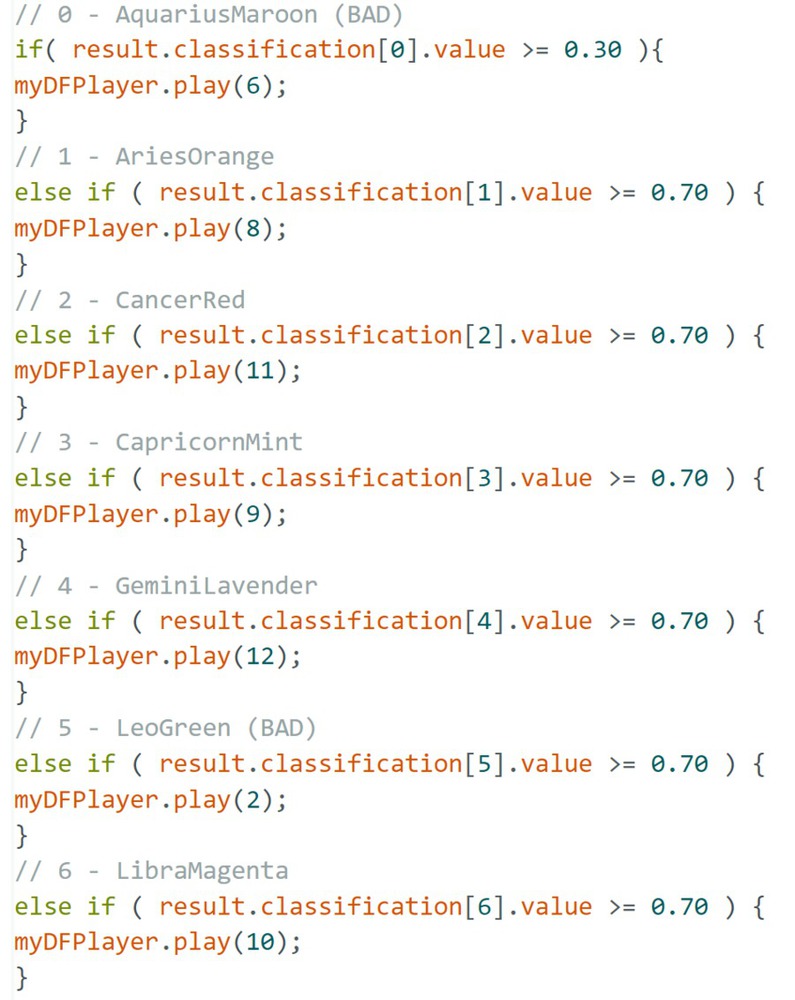

Finally, we worked on utilizing the color input to play a specific track while determining its volume based on proximity of the color. We set up a series of if statements that mapped out each possible color and called the mp3 player to play the horoscope reading corresponding to that color. In addition, we set up an if else statement that detected if the proximity of a color was close by. If a nearby object was detected, it would set the volume to high otherwise it would set the volume to low.