Intent

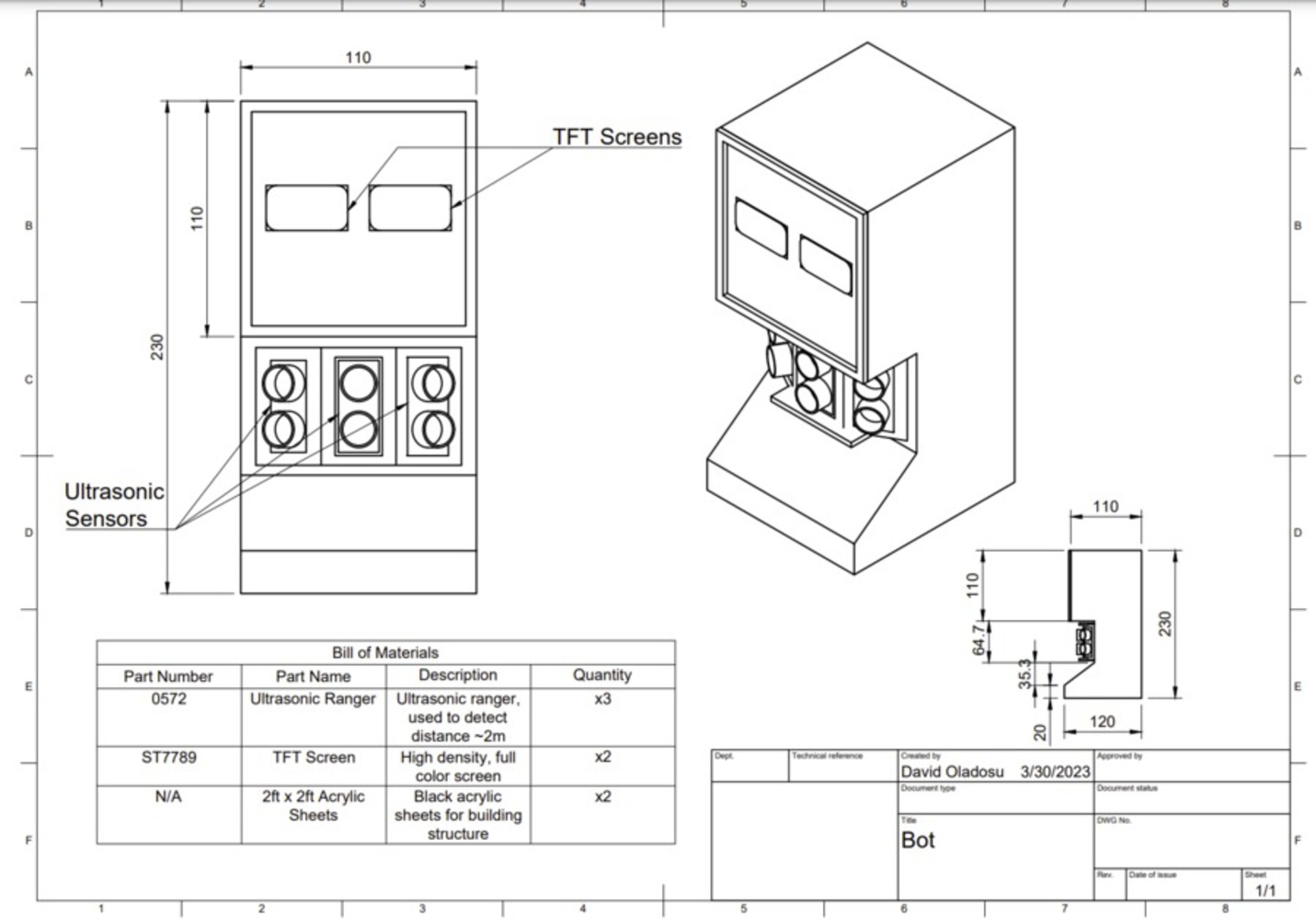

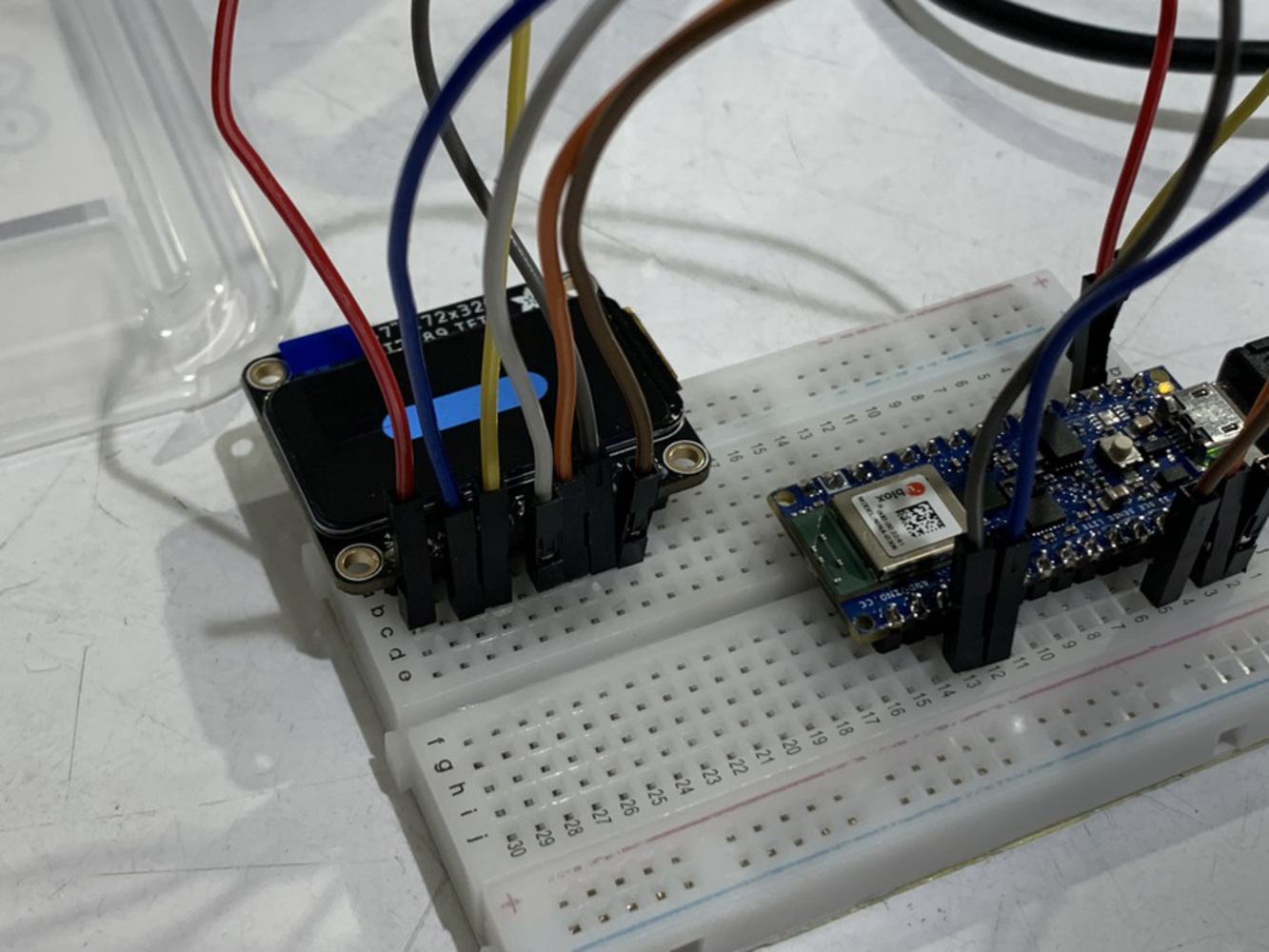

In this investigation, we were tasked with studying how A.I. and technology intersect with everyday interactions and domestic rituals. We approached this by exploring the ritualistic behavior of staring at a screen. In the past, when smart devices and televisions were nowhere to be found, people spent more time socializing, reading books, engaging in outdoor activities, pursuing the arts, and generally diversifying their time among a number of activities. Now, we live in a day and age where our devices captivate us and siphon significant portions of our time and attention. We readily spend hours staring at screens with little to no understanding about the innerworkings of the mechanisms and electronic systems that lie beneath. Our goal is to create an unsettling interaction between people and screens to provoke more intentional thinking about behaviors involving screens such as subconsciously checking your phone for new notifications.