Process

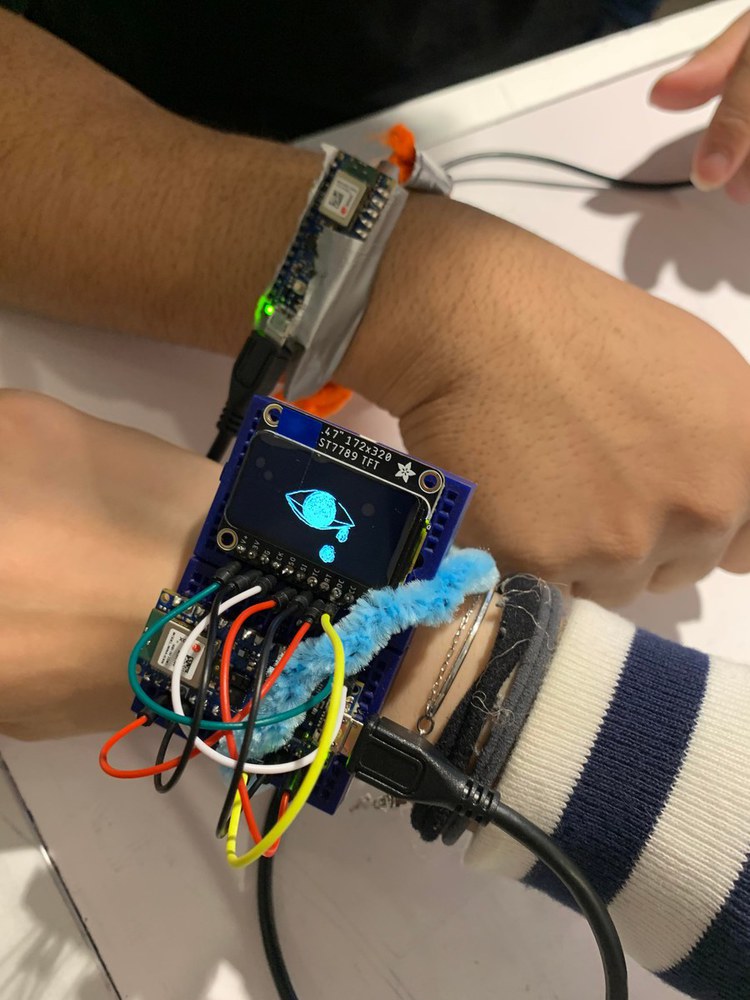

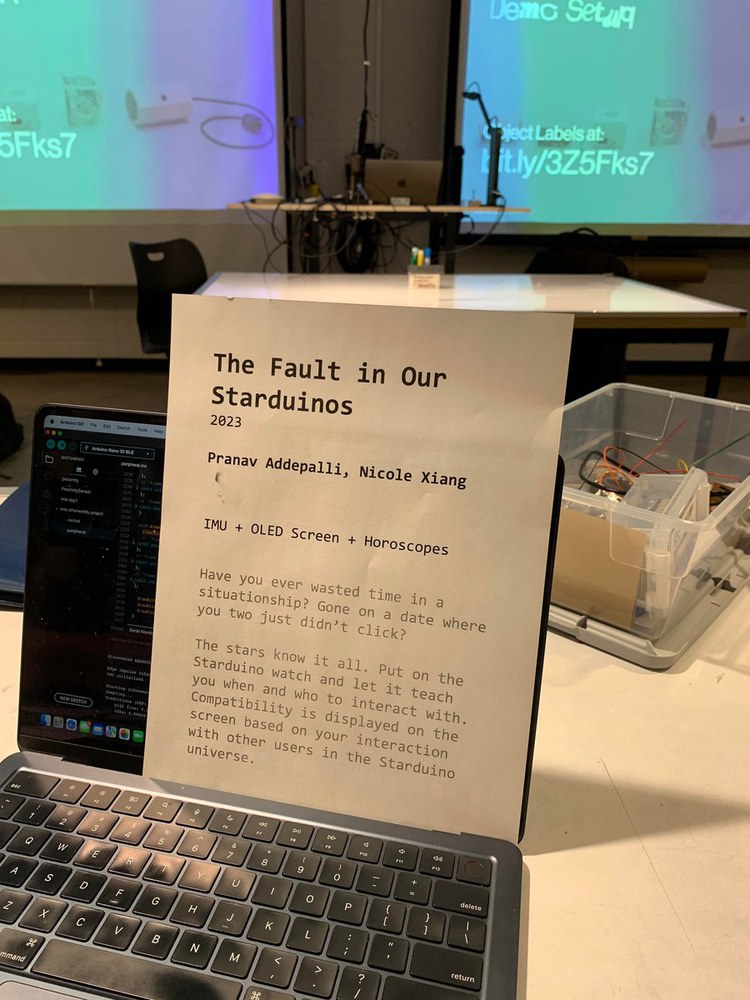

In our project, we began by exploring, building, and deploying models with Edge Impulse. We were able to work on a few examples with the IMU to understand how to recognize and classify different gestures. This opened a few opportunities for us in what we would be able to move towards, and we thought we could create a numerology-based wearable that promoted fitness. After creating a band for the Arduino and turning it into a watch, we found it difficult to classify the different activities someone could do with just the data from a single arm/wrist motion. As a result, we had to change ideas, and we thought of using two Arduino watches to communicate with each other. We were able to quickly figure out the Bluetooth Low Energy (BLE) capabilities of the boards, so we moved to using this.. After this, we experimented with different ways to display on the OLED screen, and realized that given the slow drawing time of the screen, it is best to use small images that don’t take up too much of the screen.

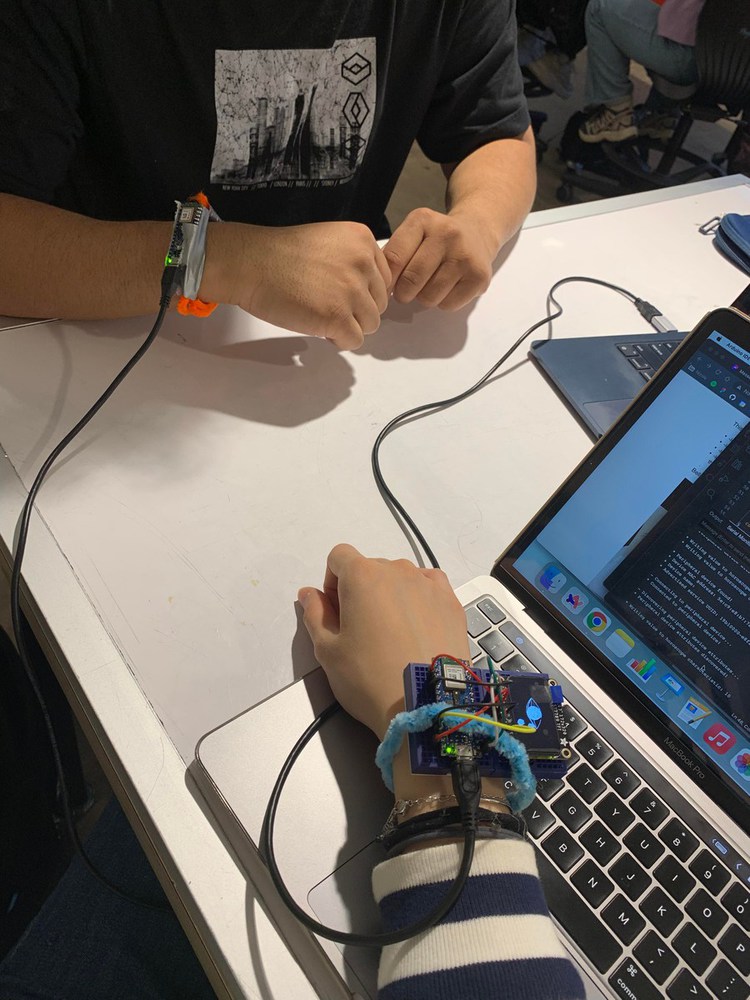

To integrate all these, we decided to build a system where two watches, each hardcoded with an astrological sign, connect using BLE and communicate the sign data to each other. We wanted to then track the interaction between the users using Edge Impulse and classify it as either a hug, holding hands, or nothing. Although we trained the model correctly, when deployed, the results were inaccurate. We moved to just tracking a high-five or no interaction, and used this to display on the OLED screen whether this was good or bad. For both of us, writing the code and the basics of the setup was quick, but learning the new technologies in this project took up most of our time. We spent most of the time working and understanding the different aspects that went into our project before combining everything in the end.