Process

Phase 1 - Collecting Data / Deciding Sensors for Input

Due to the small size and scope of the built-in sensor in the Arduino Nano 33 BLE Sense, we decided to use external sensors to widen the range of data collection.

We started off trying to use TCS34725 Color Sensors. While we were able to follow the pinout and successfully interface with one sensor, we ran into difficulties when trying to connect more. Not only was each sensor quite big, but also each had a fixed I2C address, meaning all the data was coming in under ‘one sensor’. In order to communicate with each sensor individually, we would need to use a multiplexer.

After meeting with Robert Zacharias, we took his advice and switched from using the larger color sensors to smaller individual IR sensors with the intention of building a 'primitive camera' out of an array of them. As we had little experience in the realm of physical computing, we took this work session to figure out:

- how to read the circuit diagram - in particular using ground and power lines and finding the right resistors

- how to interface with the IR sensors via Arduino and checking how different colors/ materials led to different values within the Serial Monitor

We started out by wiring a single IR sensor to the Arduino to see the difference in values read between dark and light colors, following the iDeaTe circuit diagram and tutorial.

Source: https://courses.ideate.cmu.edu/60-223/s2023/tutorials/IR-proximity-sensor

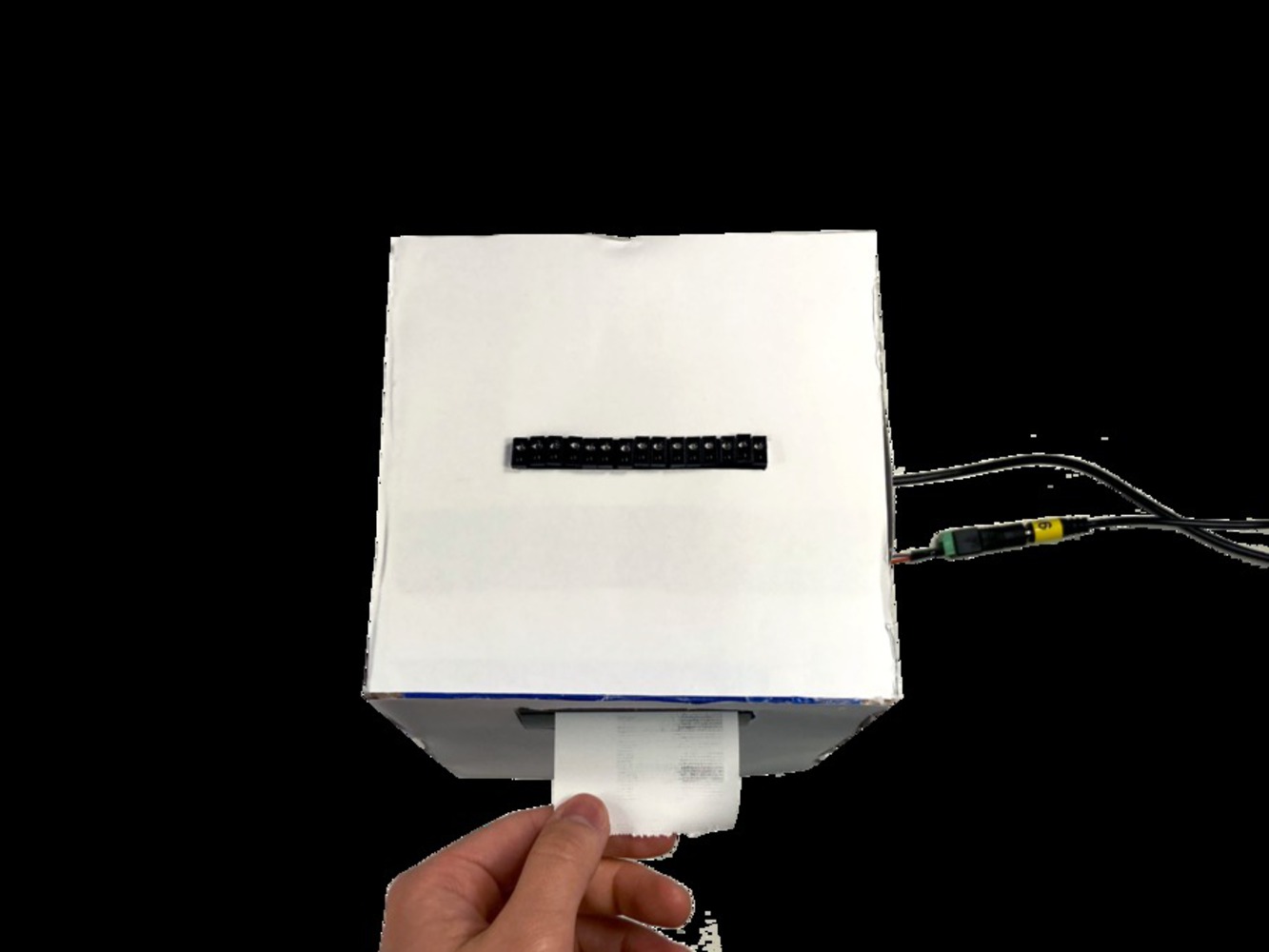

After testing each sensor to ensure it was functioning, we arranged 15 IR sensors in a line and soldered them to a protoboard, so we could record more data in order to determine the ‘length’ of the palm line. This part required a lot of discipline and diligence, not only was it Clover’s first time soldering, but also because of the large quantity and density of components. Each IR sensor had to be connected to 2 separate resistors, 1 Arduino/ Multiplexer Analog pin, a power source, and a ground line, totalling to 5 * 15 = 75 unique connections overall. To help legibility, Clover kept each resistor (220Ω on the left, 5.6kΩ on the right) and each power and ground connection on separate sides. The wires were color coded accordingly:

- Red (ground)

- Black (power)

- Blue (Multiplexer/ Arduino Analog pin)

Additionally, wire length was kept short to prevent tangles. This entire process took around 4 hours in the Digital Fabrication lab, as we took our time double checking at each step, and checking each sensor after it was soldered. We wanted to make sure we weren’t making major mistakes as we were aware that they would be difficult and even more time consuming to fix.

The wire endings on the other side were also trimmed down to ensure no accidental connections or shorting.

One difficulty that we encountered was after we had soldered all these sensors, we realized that there were 15 sensors that needed their own unique Analog pin, but there were only 8 Analog pins on the Arduino Nano. This meant we would need to use Multiplexers to increase the number of Analog pins in order to parse the data from each sensor. In another meeting, Robert Zacharias pointed us in the direction of the CD4051 which would act as a 8 pin expander. Since we had 15 sensors, we would need to use two. Here, the main difficulty was understanding how to call the 8 different channels via an array of 3 ints in the code: