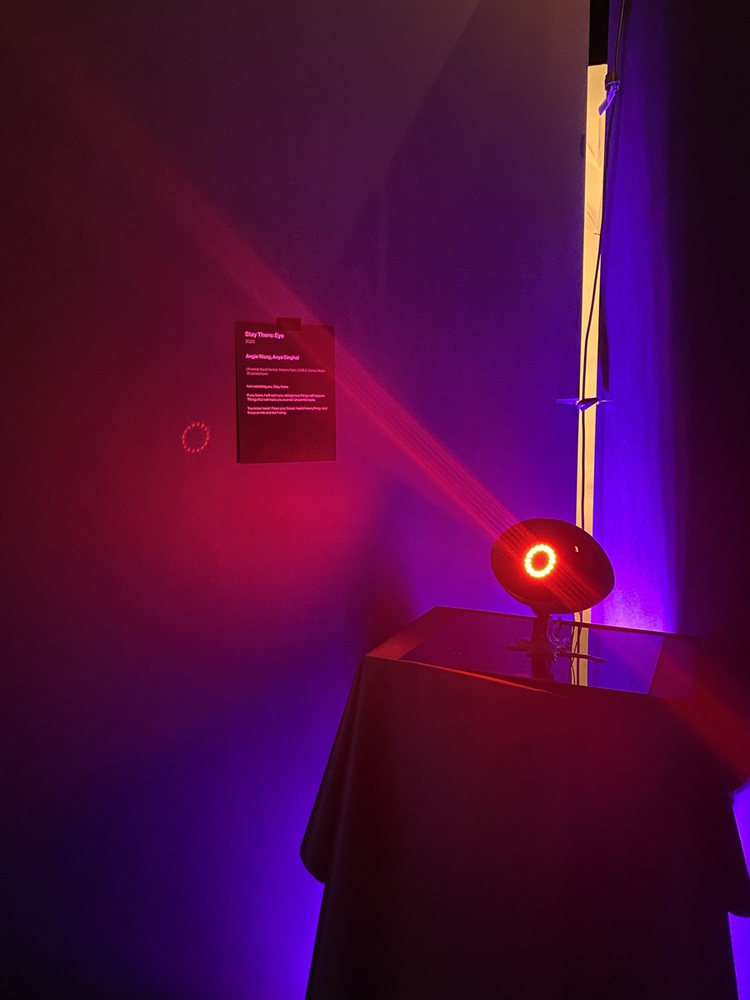

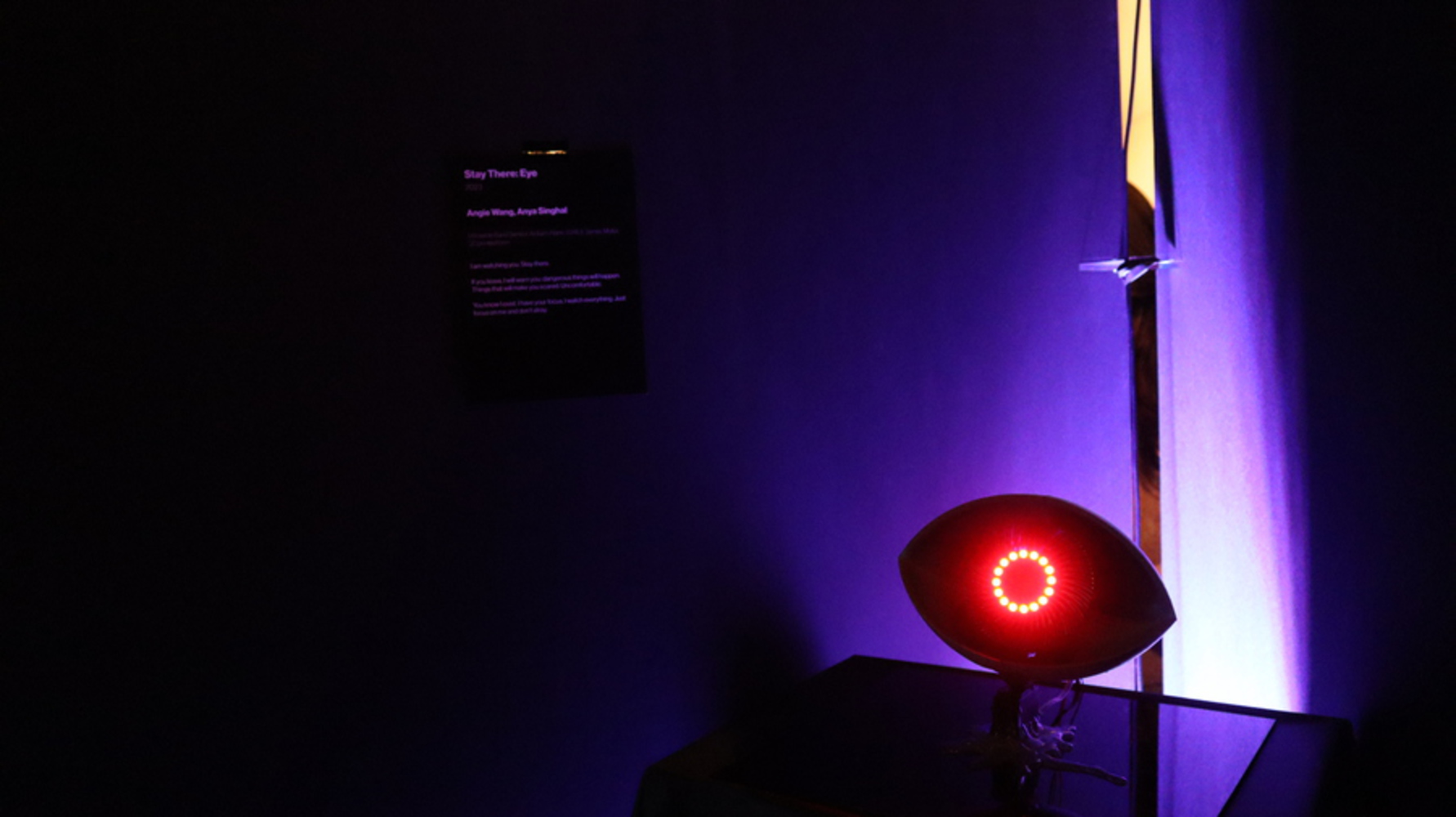

II. Stay There: Eye

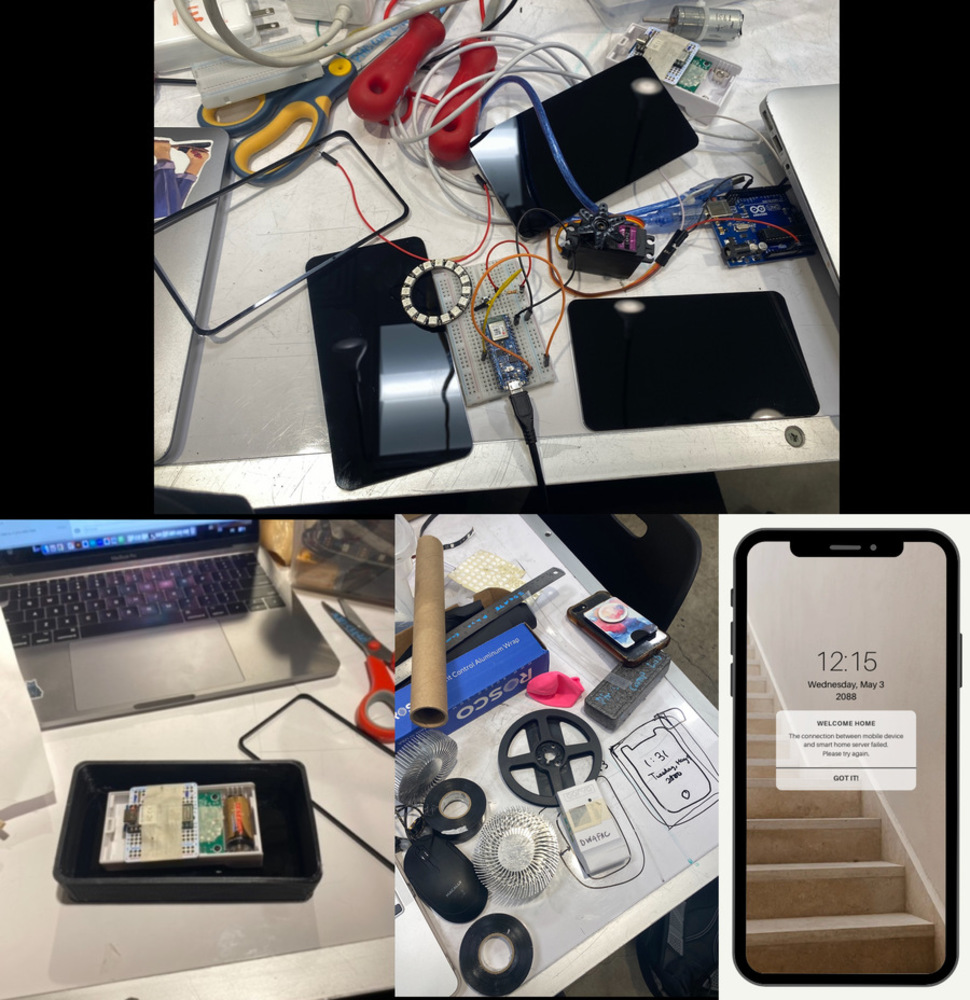

We went through a thorough process to create the mechanical eye for our project.

Our first step was to 3D print the shell of the eye, but we quickly realized it wasn't visible enough in the room. To address this issue, we decided to scale the model up and print it in two parts, which took a total of 3 days(34 hours + two times failures due to troubleshooting) to get the desired result.

Next, we experimented with different materials and spheres for the eye until we discovered the heat sinker from the iDeate lab, which we decided to use as the pupil. However, the heat sinker's weight posed a challenge as the servo motor we used to control the rotation was relatively light and small. We sought assistance from the lab assistant at Techspark, and after some punching, sanding, and gluing, we finally managed to assemble the eye.

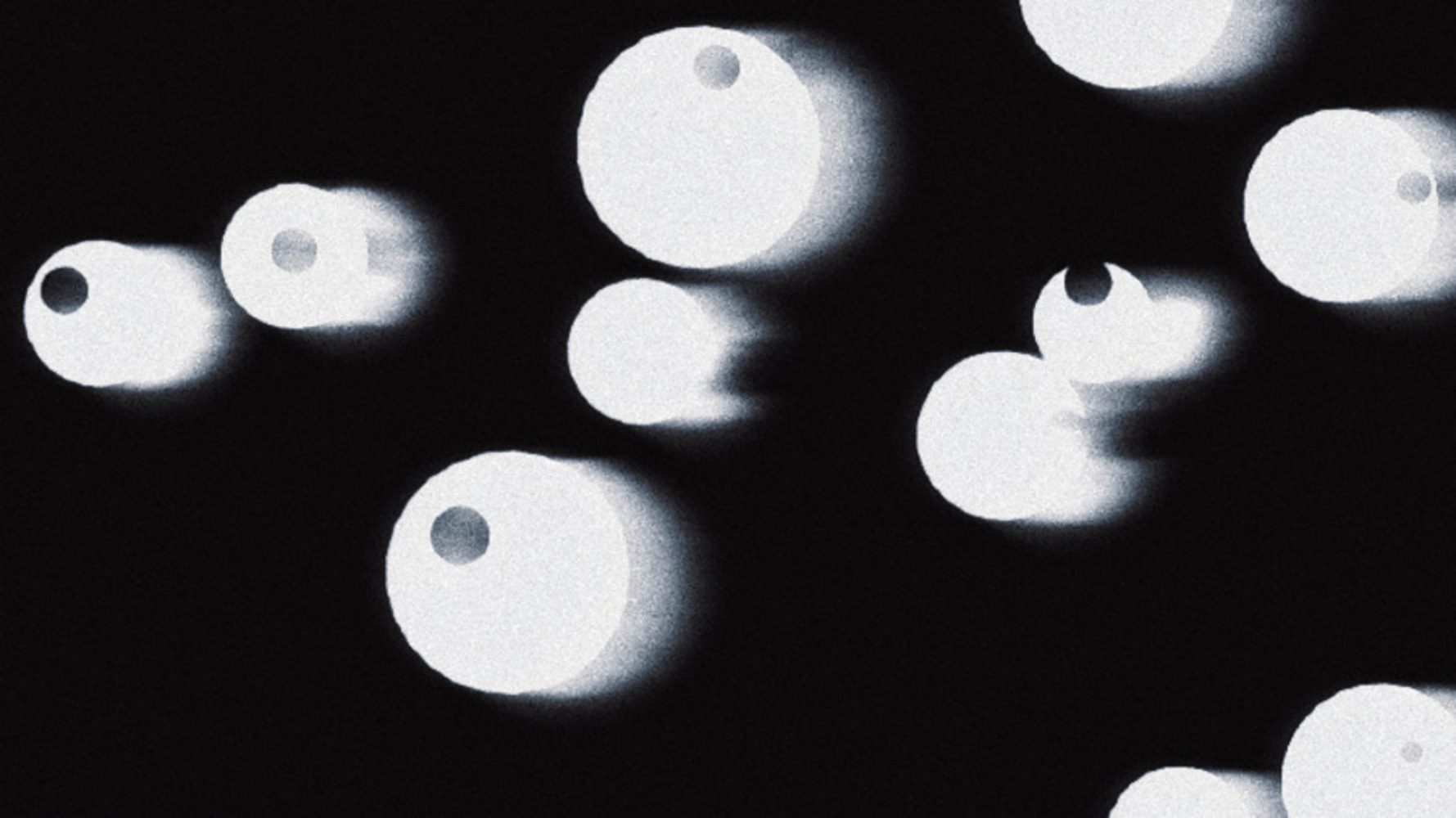

Finally, is to assemble the eye in the exhibition. We faced some difficulties attaching the eyes to the motor on the exhibition table due to their weight. Therefore, we decided to glue them to a black acrylic plate to keep them upright. During the process, we came up with the idea of using hot glue to create the effect that the eyes had legs and were trying to climb out of the table. We implemented this idea to give the eyes a more "animistic" appearance.