Project Development

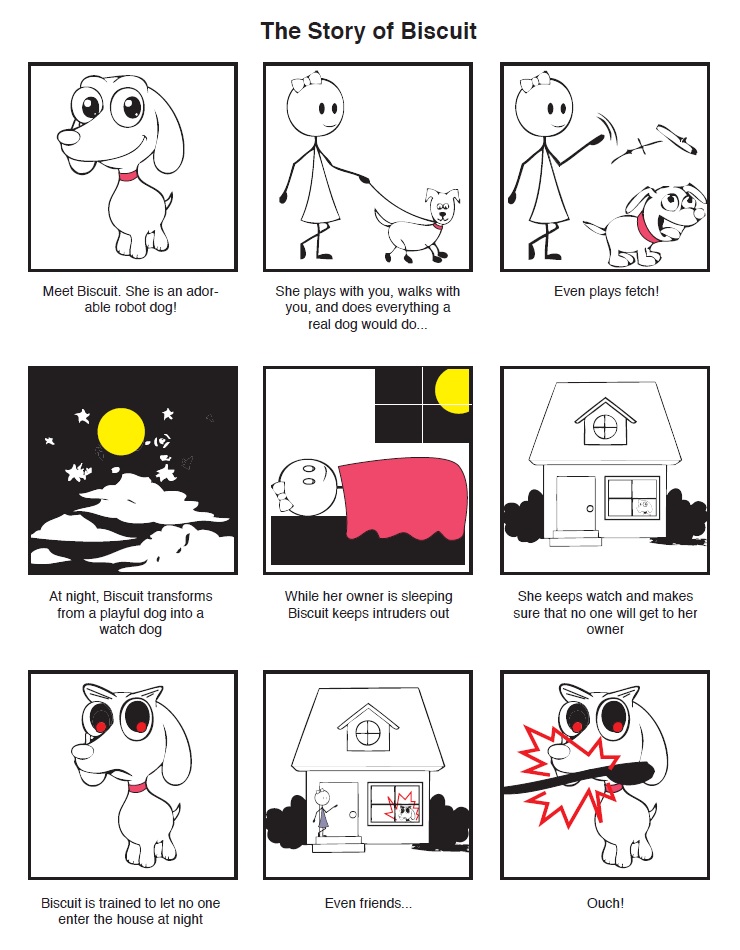

Robotic dogs already exist and make popular presents. Our team imagined what would happen if these dogs were integrated into the Internet of Things and became not only companions, but part of security systems. This is a logical extension of current functionality given that many people get dogs to help keep them safe. However, a robotic dog might have the same struggle that a normal one does: how to differentiate friend from foe and might be a lot harder to subdue or deactivate, if the owner is not capable of doing so for any reason. With this in mind we developed a scenario where a good technology might make the wrong decision: