Created: December 8th, 2017

One of our goals for this project is to create a space that shows empathy towards those who interact with it. We decided that one way of achieving this goal was to utilize text and sensor inputs to gain a better idea about how the user was feeling. We thought that it would be important to collect both information that the user provided about their own feelings as well as physiological indicators that the user can not control to get the best idea of the user’s feelings. (Since sometimes people don’t always say how they feel.) With this input, an algorithm was created to interpret the feelings of the user. Then, we wanted to play music in response to this mood. One of the questions we had was whether we should play music that matched the mood or music to better the mood (i.e., playing calming music to soothe someone who is stressed). The answer to our question came from the video, “Brené Brown on Empathy,” in which it was stressed that in order to show empathy, one must “connect with something in [oneself] that knows that feeling.” Empathy indicates a connection through the feeling. Thus, we decided to match the music to the feeling.

This project drew ideas from The Eyes of the Skin by Juhani Pallasmaa who talked about how many types of media today appeal to our sense of sight rather than other senses. We incorporated the idea of acoustic intimacy through creating a project that relied more on sound than sight and trying to create a welcoming space based on sound. We also built on the idea of bodily identification through using heart rate to help contribute to users’ experience in the space.

We also pulled ideas from Other Spaces by Michel Foucault, which talked about the idea of heterotopias. This space aims to create a meditative environment for the user to get more in touch with their feelings. Meditation and reflection can be seen as types of heterotopias. This is an “other” place that allows people to step away from other things in their lives and spend some time reflecting on their mood.

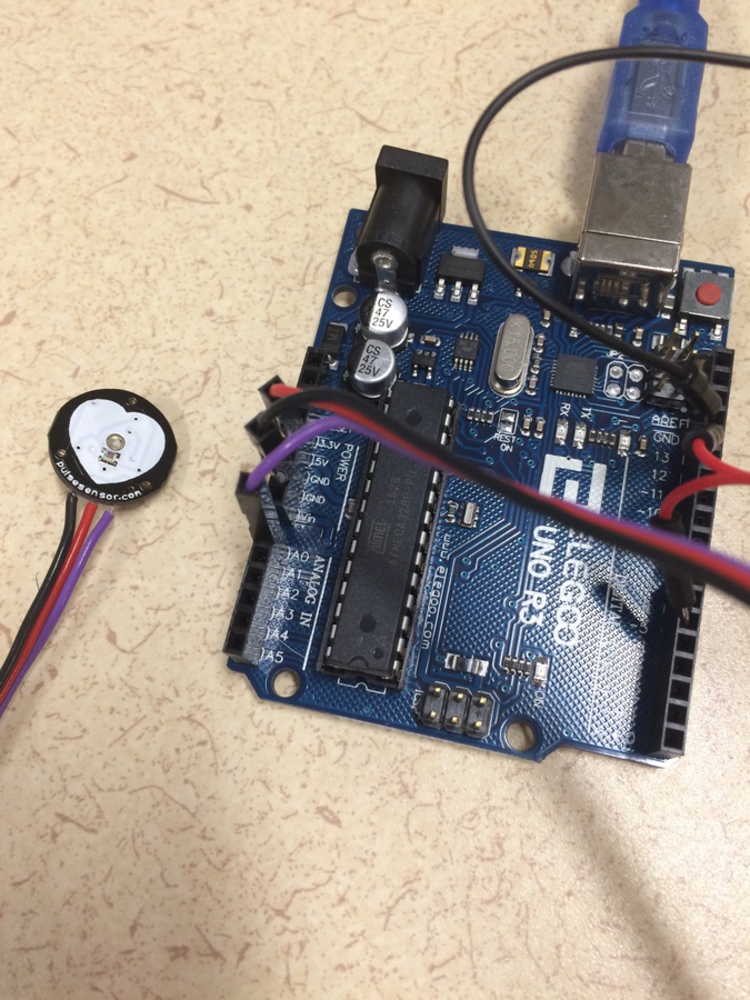

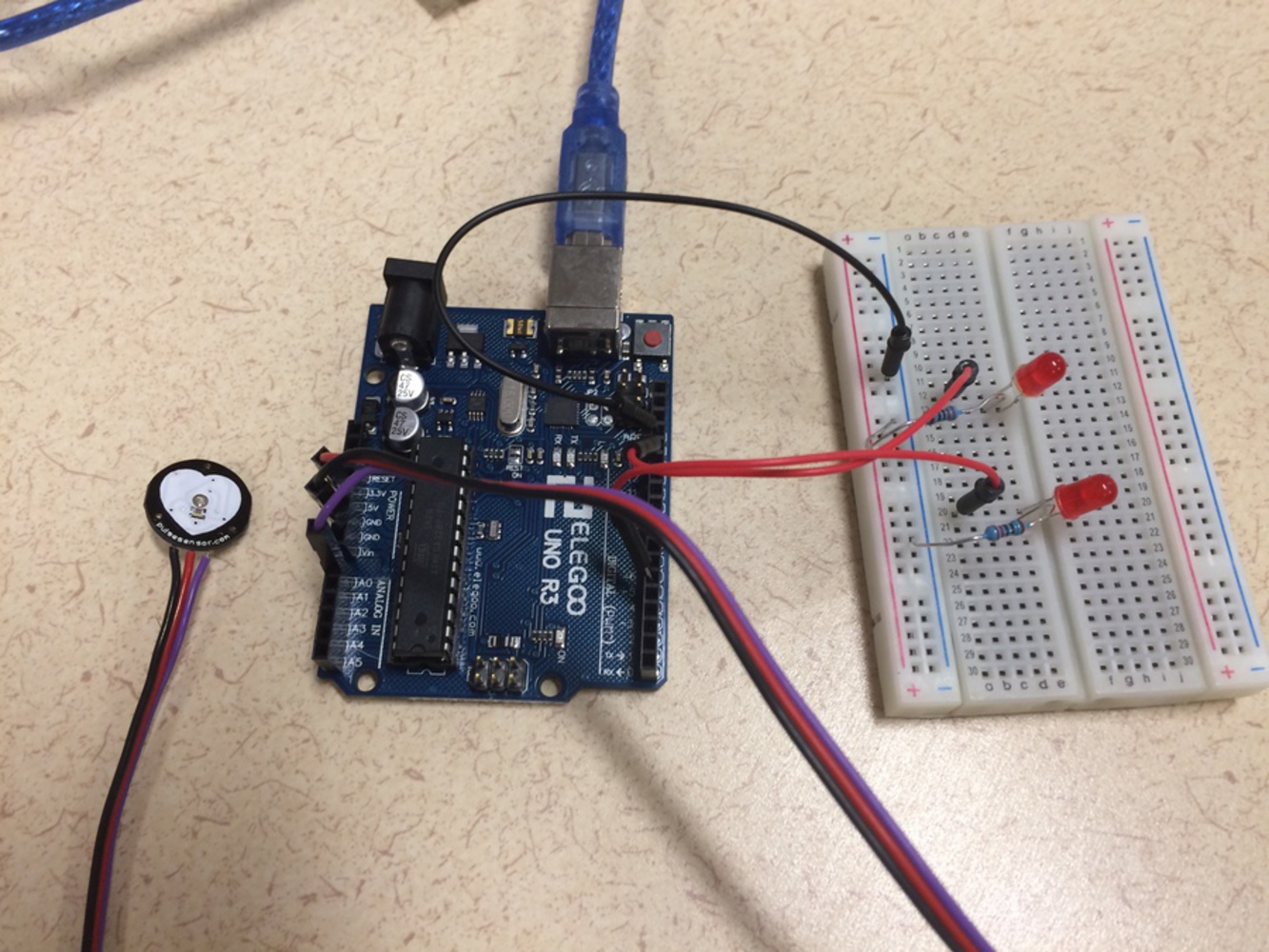

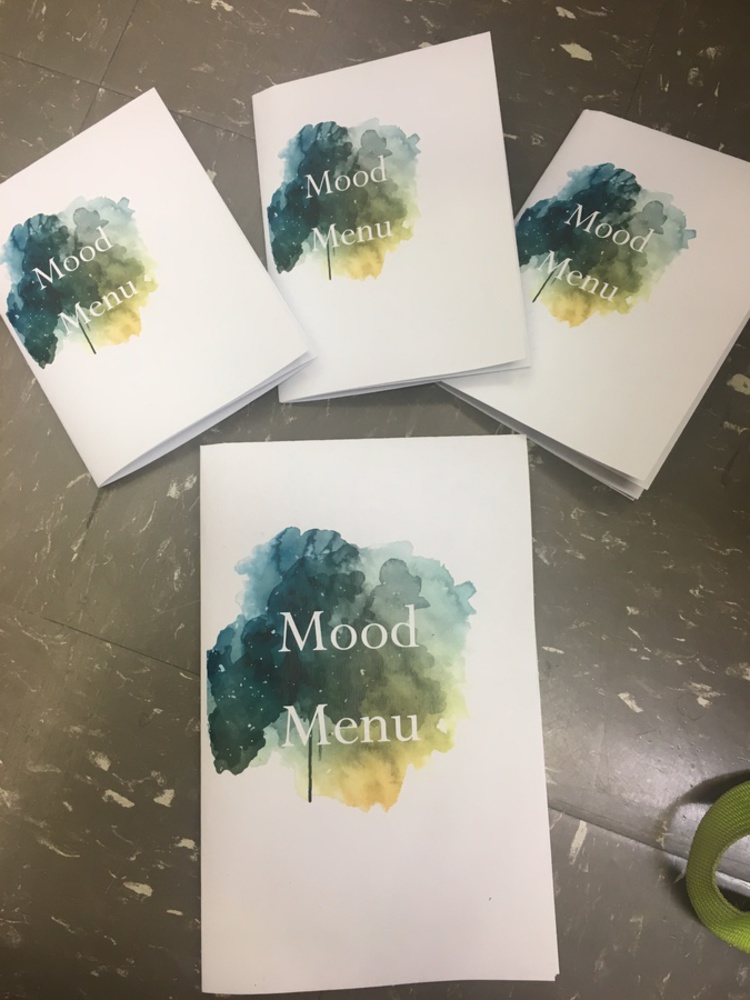

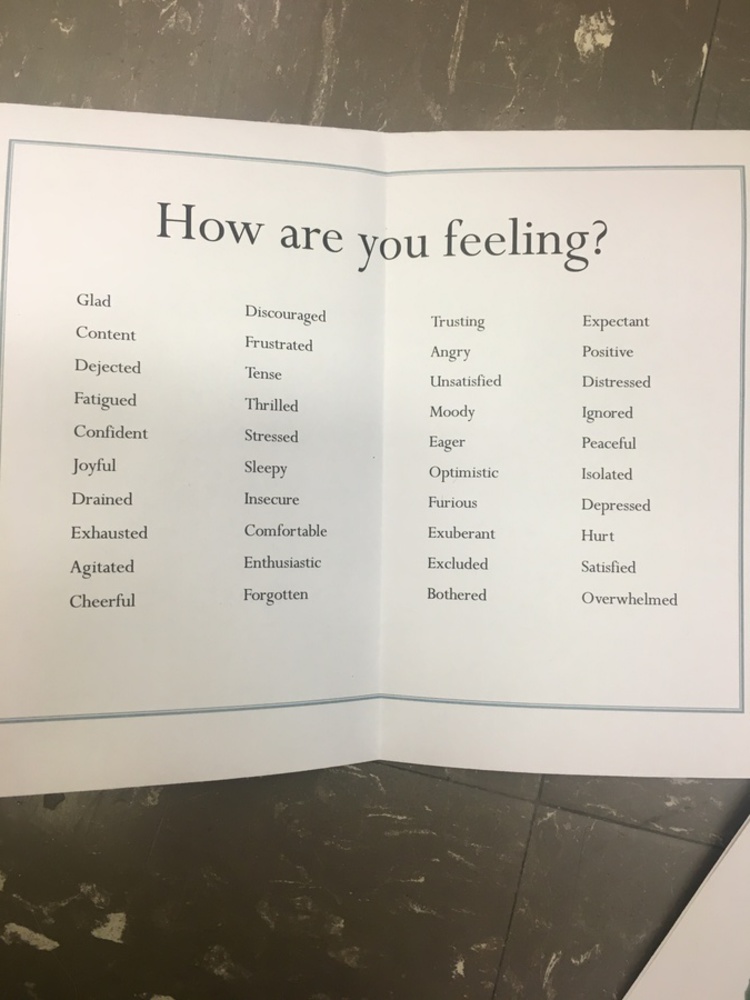

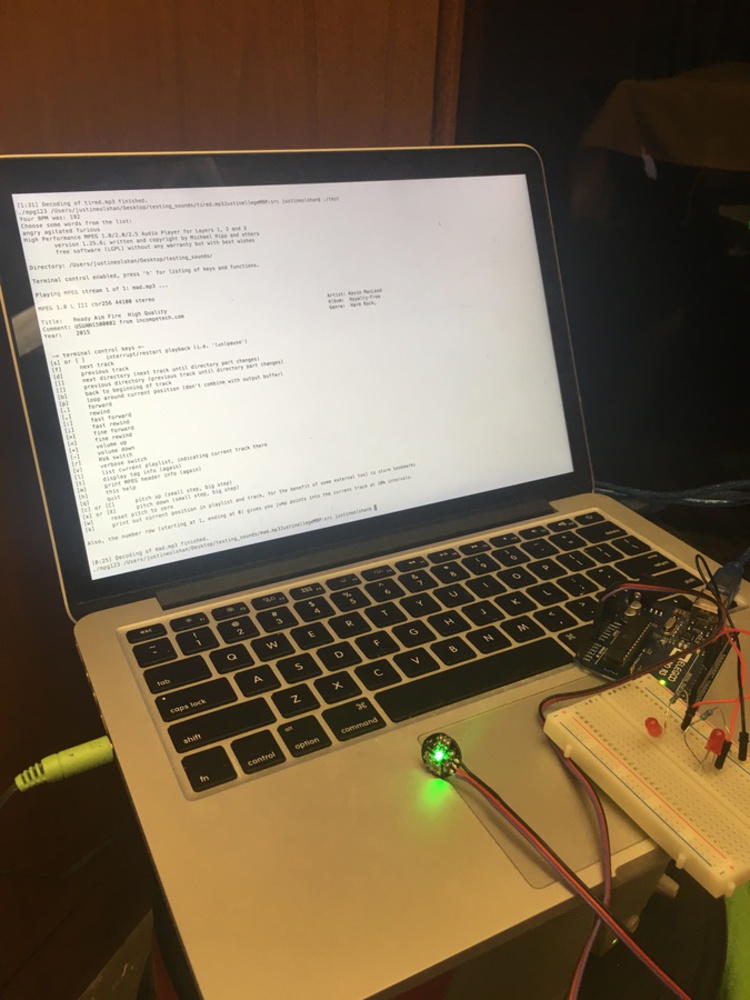

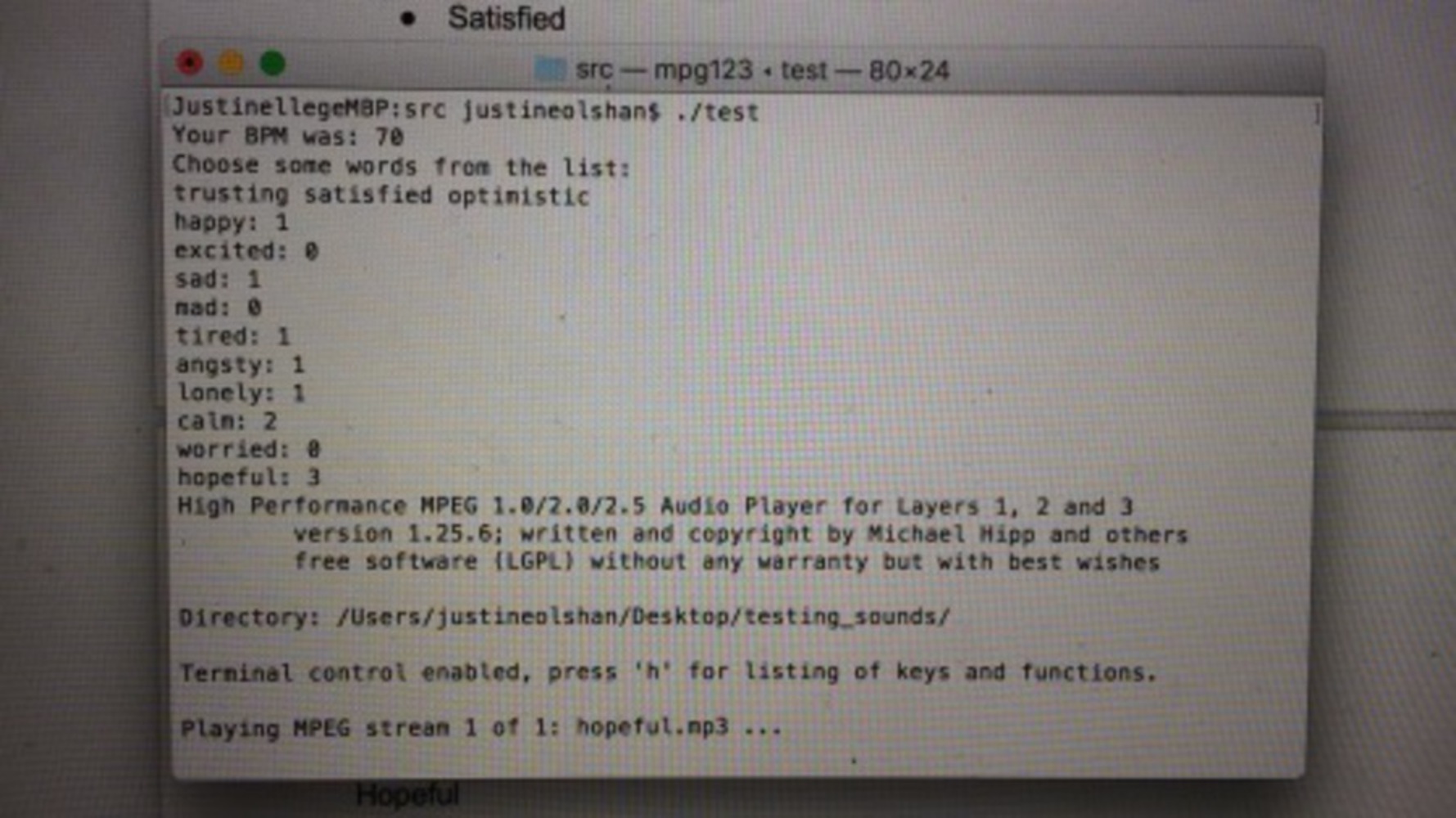

The algorithm we used to determine the feeling of the user was first finding the user’s heart rate with the sensor and the Arduino. Most of the computation was already done in the PulseSensor_Amped_Arduino code. We then allowed the user to input words from the mood menu that they felt best represented their mood. With these inputs, we assigned values to 10 main mood categories. For heart rate, if the BPM was over 100, we added a point to moods associated with elevated heart rate, and for under 100, we added a point to the other moods. Then, for each word the user inputted that was on the mood menu, we added a point to the mood category to which the word was associated. Then, we found the mood category with the maximum number of points and played the song in our files that was associated with the mood.

Sources for code:

mpg123-1.25.6 : https://www.mpg123.de

WorldFamousElectronics/PulseSensor_Amped_Arduino : https://github.com/WorldFamousElectronics/PulseSensor_Amped_Arduino

Other coding help : http://forum.arduino.cc/index.php?topic=306198.0

Some ideas we had to further develop the project if we had more time would be to do a more in depth study on how heart rate correlates with mood. We would also try to use word associations rather than explicit feeling words for the user input (for example maybe umbrella would correspond to sad). We would need to do more research into words that have actually been shown to be associated with various moods. Another idea we had was to somehow let heart rate control the beats per minute of the music or adding lights to the space that flashed in a pattern determined by the beat of the song.