Presence

2023

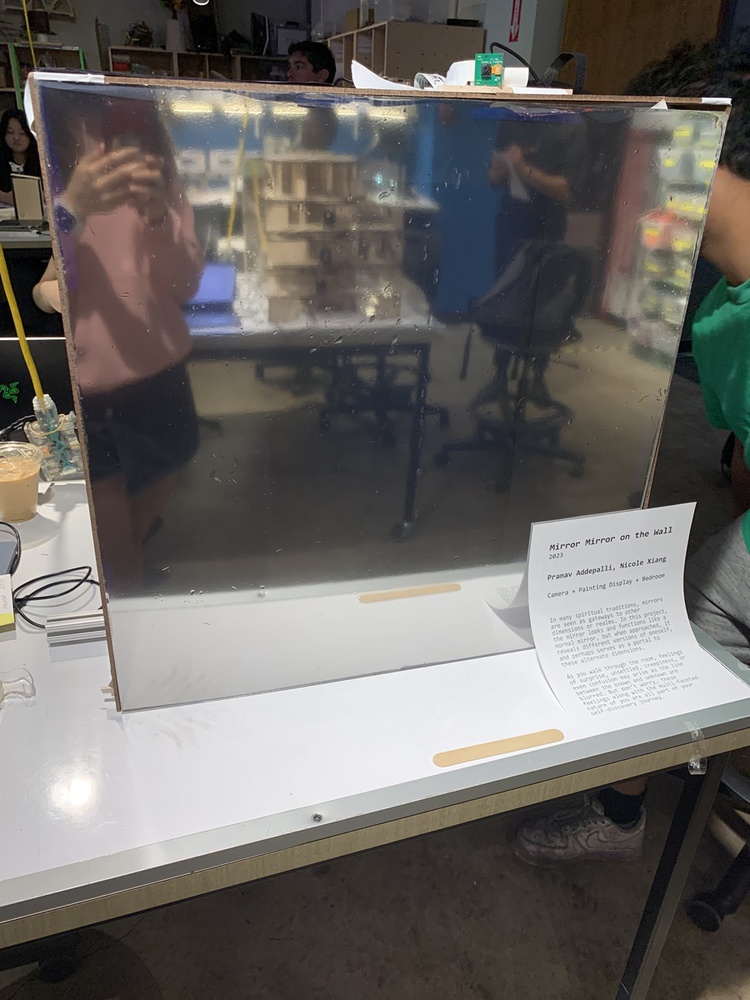

Pranav Addepalli, Nicole Xiang

Credits

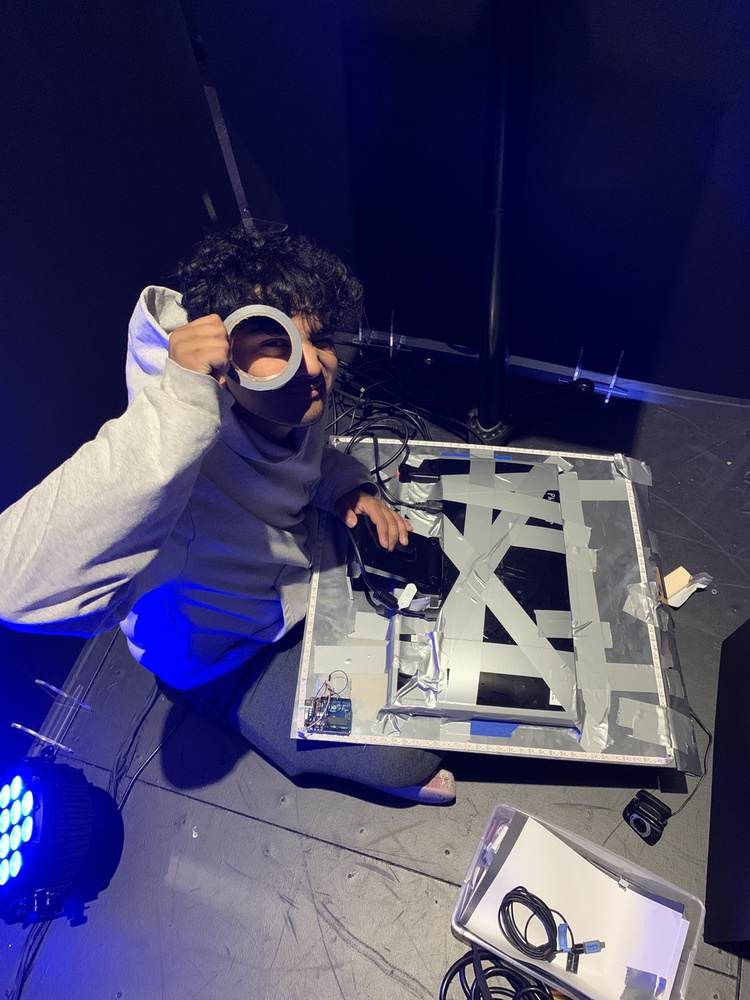

This project was built by Pranav Addepalli and Nicole Xiang in the 2023 edition of 48-528: Responsive Mobile Environments. Pranav and Nicole are both rising seniors studying Information Systems and Human-Computer Interaction. Special thanks goes to the professor, Daragh Byrne, and the teaching assistant, Zhenfang Chen, for their support throughout this project.

Description

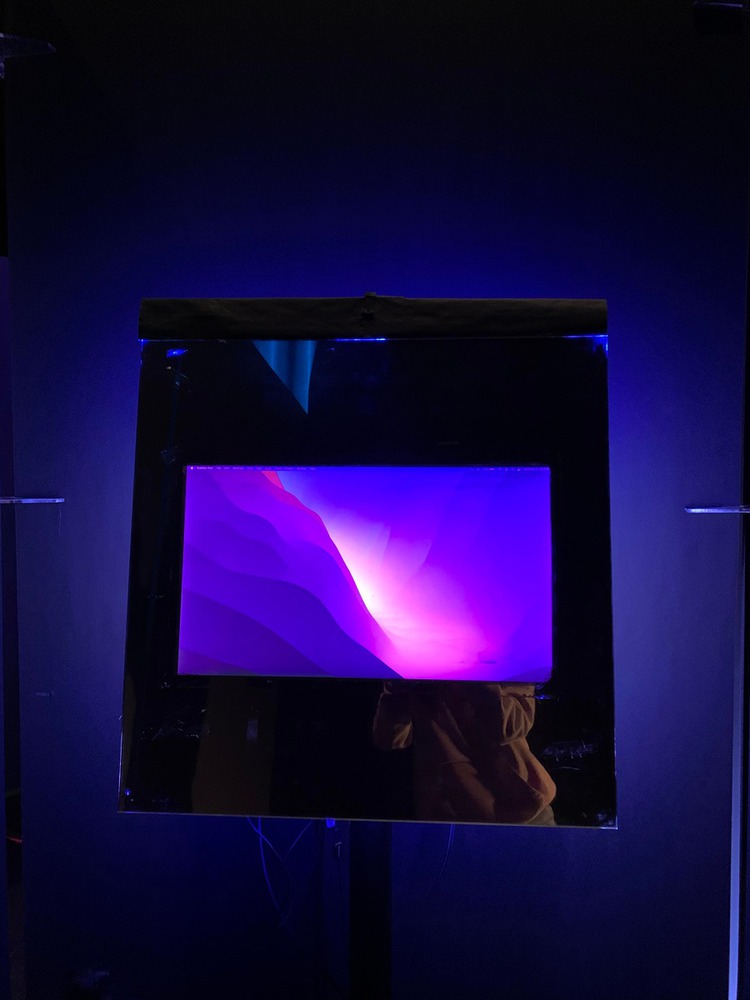

Presence aims to question the way we interact with everyday objects. Mirrors are rooted in reality, an object that is taken for granted to be a reflection of now. Especially in a fast-paced world today, our presence, or the state of existing, is often overlooked as we focus on everything that is and/or about to happen. But have you thought about what affects this current state?

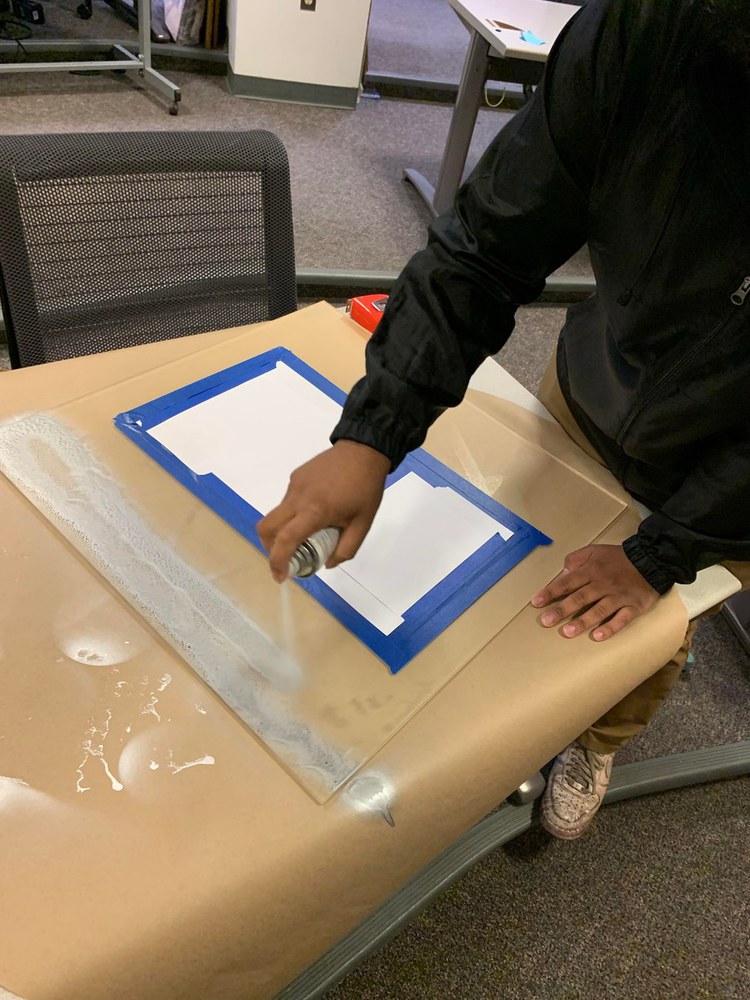

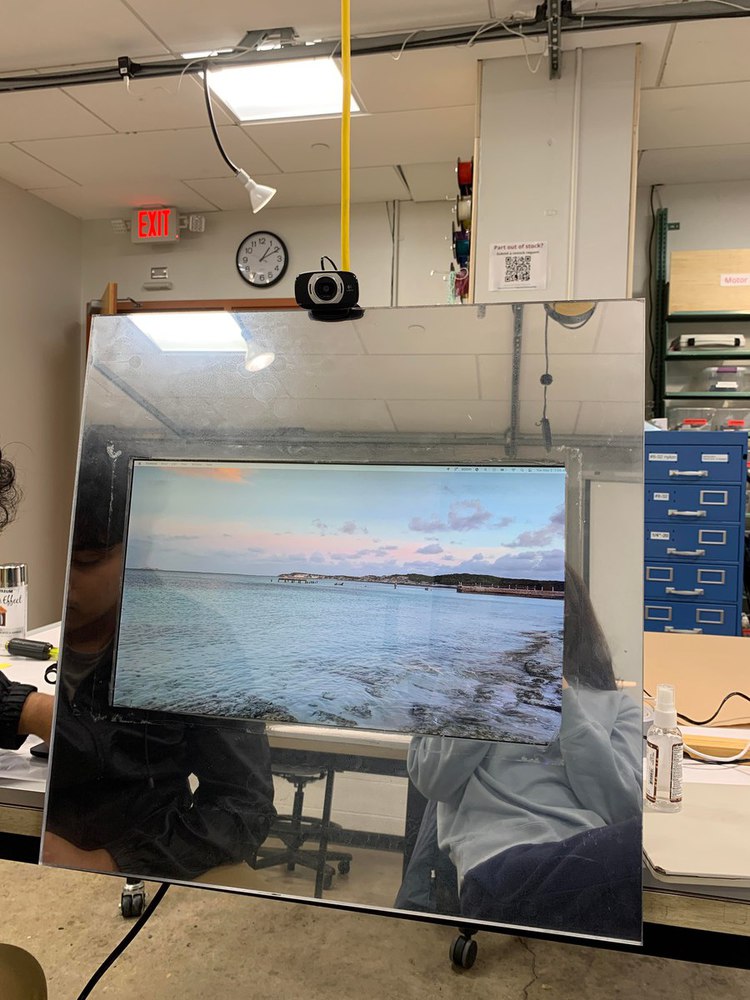

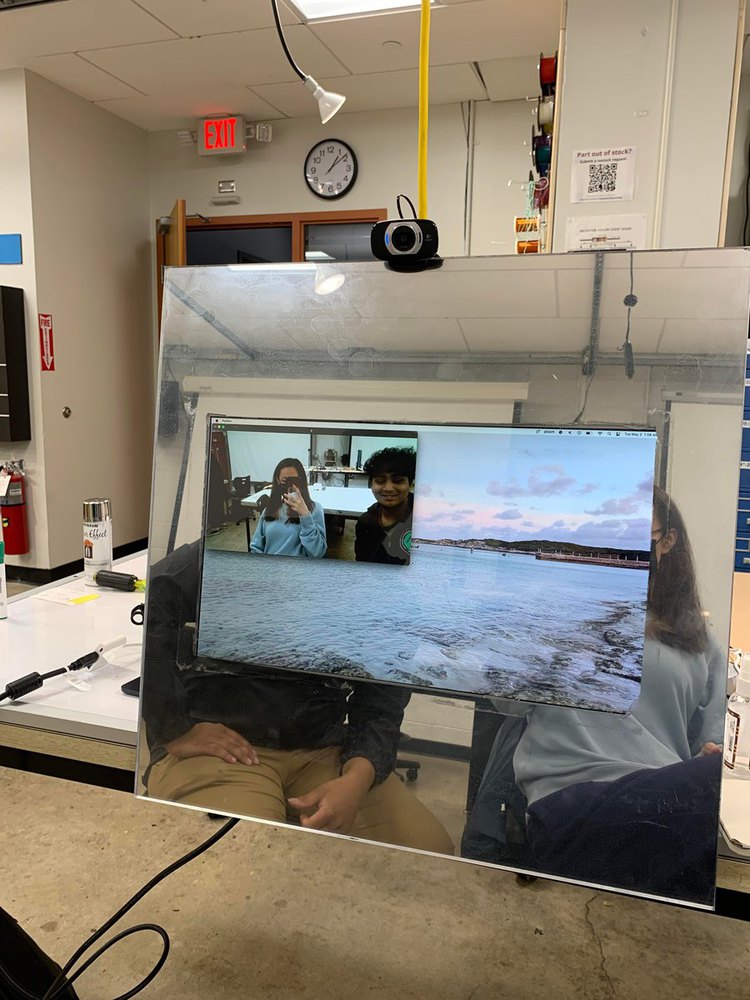

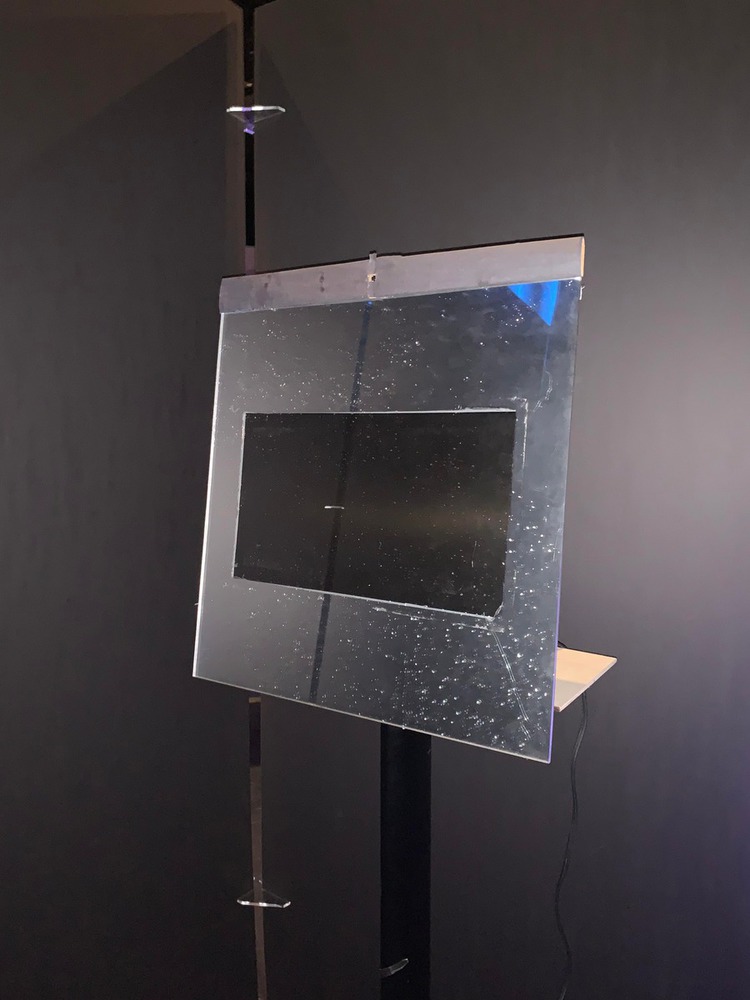

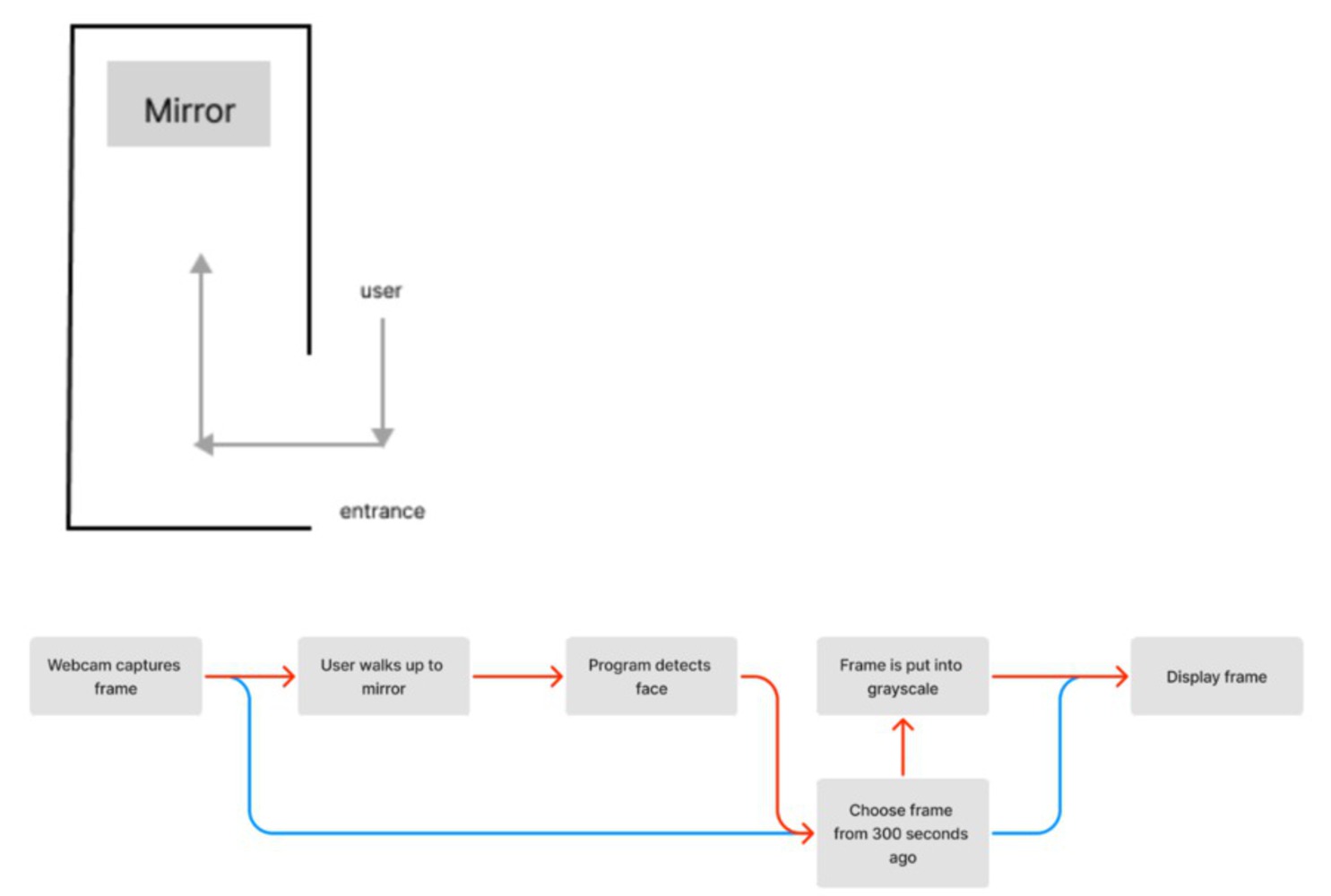

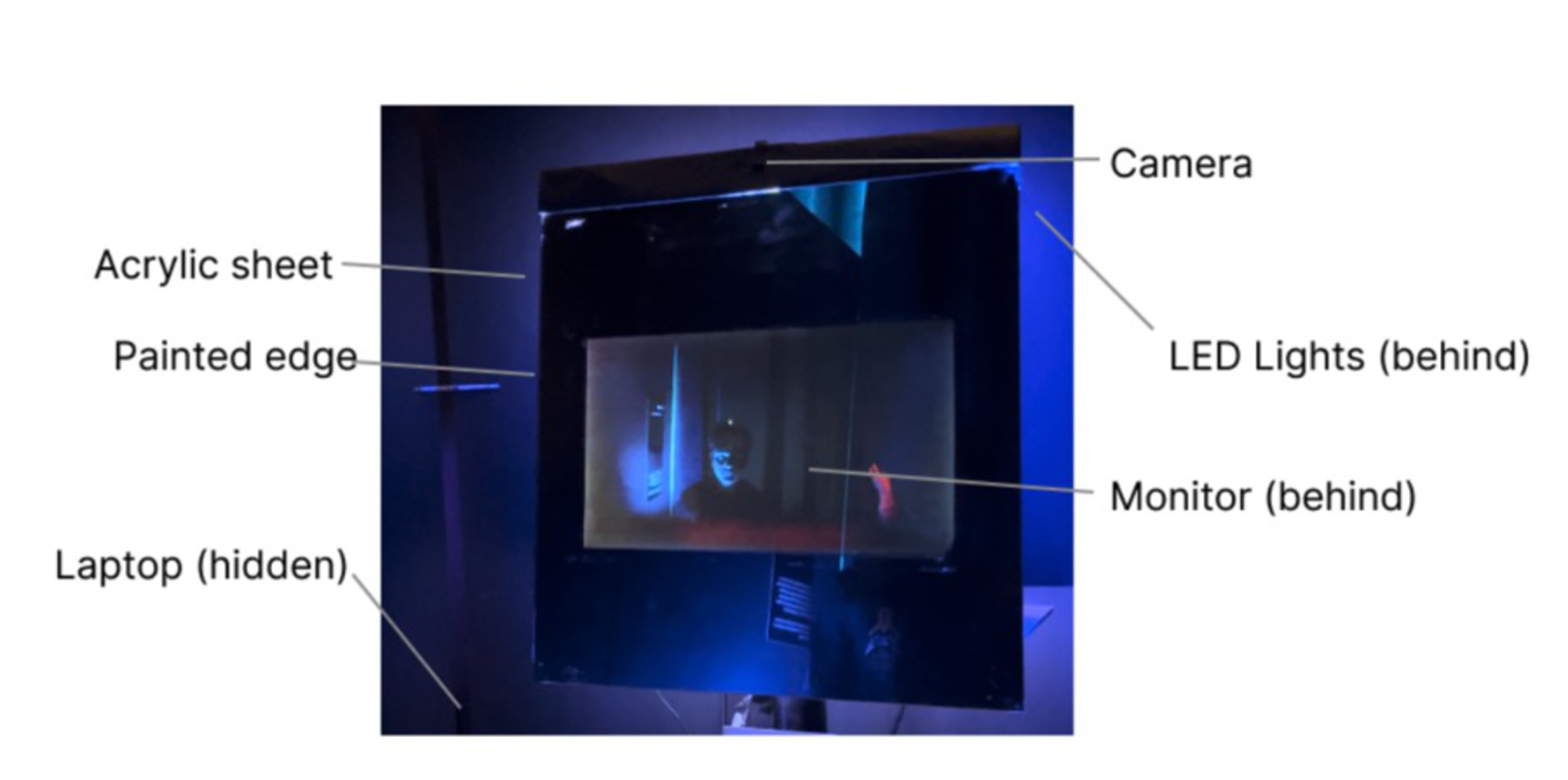

Our project aims to explore the concept of relativity, and show that the past and the now are interdependent. The project consists of a mirror with a built-in display that, when someone walks up to it and is detected by a hidden camera, will show the person reflected like a mirror, but also lagged behind and in grayscale. This intentional delay changes the way we interact with regular mirrors -- rather than see yourself, you see the version of you that led to this new perception of you.

Video demo: https://vimeo.com/824940337?share=copy