Ever jealous of those babies who get to know their prosperous career path through picking out of a pile of toys placed conveniently in front of them and then celebrated? Participate in the mid-life doljabi… and this time “hack” the system because as an adult, you know the meaning behind different symbols… or so you think. Technology and innovation bringing unprecedented change and uncertainty to the foreseeable future? Tell me something I don’t know.

Created: March 31st, 2023

Intent

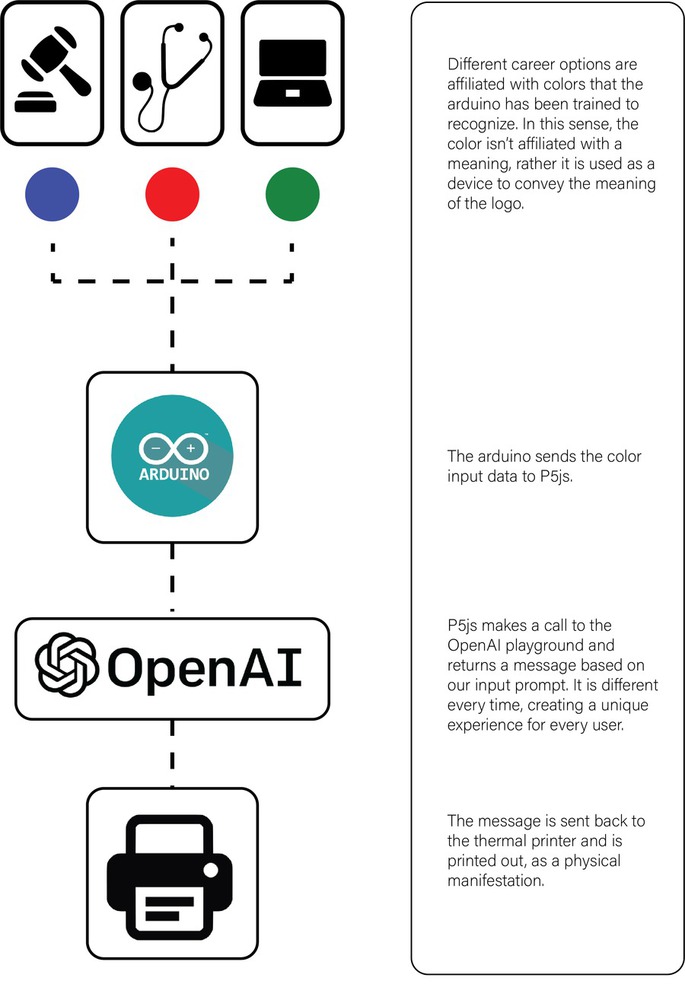

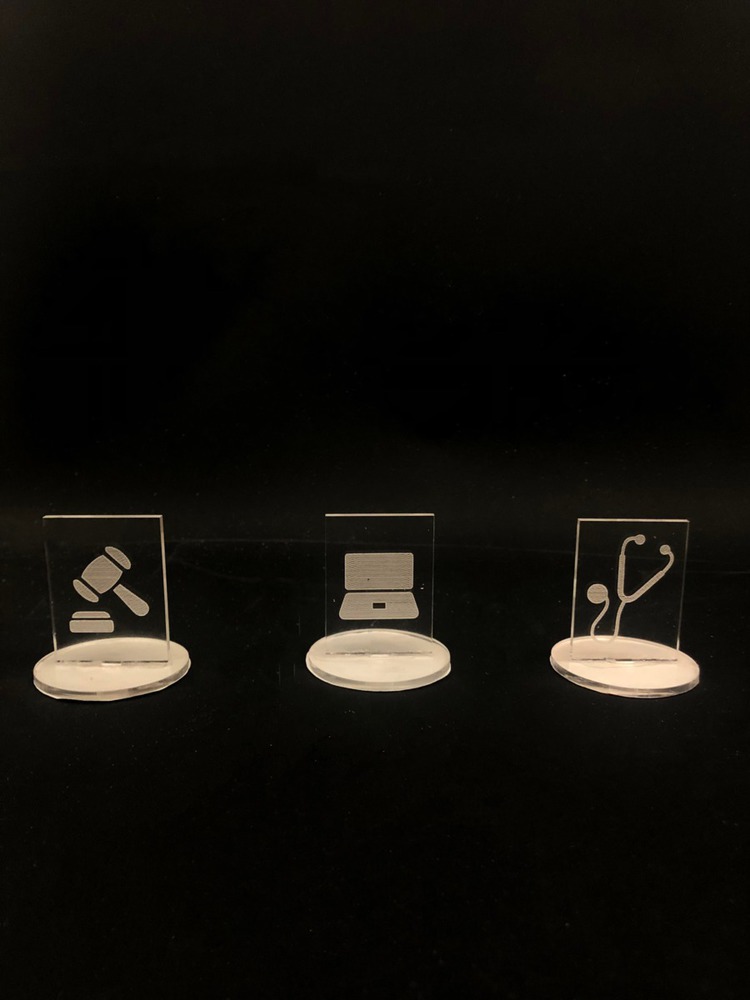

The intent of this project is to confront the changing relationship people have with their career expectations in light of an increasing role of technology and artificial intelligence in people’s lives. In conjunction with familial expectations of career choices, we wanted to create a device that would have people reflect on their work lives - what they chose to pursue, why they chose to pursue it, and what might the future bring for that work. Doljabi is a tradition in Korean culture where a baby chooses from a series of objects, and the object they choose predicts what their future career will be. Rather than having ambiguous items with symbolic meanings, we chose to carve out clear icons that people choose for their career - a gavel for law, a stethoscope for healthcare, and computer for computer science. This is because we wanted to subvert people’s preconceived notions of what those career paths would be. Our project offers a humorous, surprising, and unexpected perspective of what those traditional careers could evolve to in the future, in face of a changing workforce technologically, but also changing in the way that we perceive and value work.Context

A lot of our project inspiration came from the class exploration with chatGPT and the playground. Our project uses ChatGPT in a “madlibs”/Choose your own adventure fashion to fill in the blanks of a prompt that is printed out, each output being unique. After experimenting with playground and iterating on prompt wording, we realized the limitations and affordances of using ChatGPT.

We were inspired by the e-waste project to find an aspect of commenting on the future of technology in our concept.

Our concept was also driven by exercises in finding cultural rituals, which we eventually referenced the Doljabi from.

/* Edge Impulse ingestion SDK

* Copyright (c) 2022 EdgeImpulse Inc.

*

* Licensed under the Apache License, Version 2.0 (the "License");

* you may not use this file except in compliance with the License.

* You may obtain a copy of the License at

* http://www.apache.org/licenses/LICENSE-2.0

*

* Unless required by applicable law or agreed to in writing, software

* distributed under the License is distributed on an "AS IS" BASIS,

* WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

* See the License for the specific language governing permissions and

* limitations under the License.

*

*/

/* Includes ---------------------------------------------------------------- */

#include <investigation2FINAL_inferencing.h>

#include <Arduino_LSM9DS1.h> //Click here to get the library: http://librarymanager/All#Arduino_LSM9DS1

#include <Arduino_LPS22HB.h> //Click here to get the library: http://librarymanager/All#Arduino_LPS22HB

#include <Arduino_HTS221.h> //Click here to get the library: http://librarymanager/All#Arduino_HTS221

#include <Arduino_APDS9960.h> //Click here to get the library: http://librarymanager/All#Arduino_APDS9960

enum sensor_status {

NOT_USED = -1,

NOT_INIT,

INIT,

SAMPLED

};

/** Struct to link sensor axis name to sensor value function */

typedef struct{

const char *name;

float *value;

uint8_t (*poll_sensor)(void);

bool (*init_sensor)(void);

sensor_status status;

} eiSensors;

/* Constant defines -------------------------------------------------------- */

#define CONVERT_G_TO_MS2 9.80665f

#define MAX_ACCEPTED_RANGE 2.0f // starting 03/2022, models are generated setting range to +-2,

// but this example use Arudino library which set range to +-4g.

// If you are using an older model, ignore this value and use 4.0f instead

/** Number sensor axes used */

#define N_SENSORS 18

/* Forward declarations ------------------------------------------------------- */

float ei_get_sign(float number);

bool init_IMU(void);

bool init_HTS(void);

bool init_BARO(void);

bool init_APDS(void);

uint8_t poll_acc(void);

uint8_t poll_gyr(void);

uint8_t poll_mag(void);

uint8_t poll_HTS(void);

uint8_t poll_BARO(void);

uint8_t poll_APDS_color(void);

/* Private variables ------------------------------------------------------- */

static const bool debug_nn = false; // Set this to true to see e.g. features generated from the raw signal

static float data[N_SENSORS];

static bool ei_connect_fusion_list(const char *input_list);

static int8_t fusion_sensors[N_SENSORS];

static int fusion_ix = 0;

/** Used sensors value function connected to label name */

eiSensors sensors[] =

{

"accX", &data[0], &poll_acc, &init_IMU, NOT_USED,

"accY", &data[1], &poll_acc, &init_IMU, NOT_USED,

"accZ", &data[2], &poll_acc, &init_IMU, NOT_USED,

"gyrX", &data[3], &poll_gyr, &init_IMU, NOT_USED,

"gyrY", &data[4], &poll_gyr, &init_IMU, NOT_USED,

"gyrZ", &data[5], &poll_gyr, &init_IMU, NOT_USED,

"magX", &data[6], &poll_mag, &init_IMU, NOT_USED,

"magY", &data[7], &poll_mag, &init_IMU, NOT_USED,

"magZ", &data[8], &poll_mag, &init_IMU, NOT_USED,

"temperature", &data[9], &poll_HTS, &init_HTS, NOT_USED,

"humidity", &data[10], &poll_HTS, &init_HTS, NOT_USED,

"pressure", &data[11], &poll_BARO, &init_BARO, NOT_USED,

"red", &data[12], &poll_APDS_color, &init_APDS, NOT_USED,

"green", &data[13], &poll_APDS_color, &init_APDS, NOT_USED,

"blue", &data[14], &poll_APDS_color, &init_APDS, NOT_USED,

};

/**

* @brief Arduino setup function

*/

void setup()

{

/* Init serial */

Serial.begin(115200);

// comment out the below line to cancel the wait for USB connection (needed for native USB)

while (!Serial);

Serial.println("Edge Impulse Sensor Fusion Inference\r\n");

/* Connect used sensors */

if(ei_connect_fusion_list(EI_CLASSIFIER_FUSION_AXES_STRING) == false) {

ei_printf("ERR: Errors in sensor list detected\r\n");

return;

}

/* Init & start sensors */

for(int i = 0; i < fusion_ix; i++) {

if (sensors[fusion_sensors[i]].status == NOT_INIT) {

sensors[fusion_sensors[i]].status = (sensor_status)sensors[fusion_sensors[i]].init_sensor();

if (!sensors[fusion_sensors[i]].status) {

ei_printf("%s axis sensor initialization failed.\r\n", sensors[fusion_sensors[i]].name);

}

else {

ei_printf("%s axis sensor initialization successful.\r\n", sensors[fusion_sensors[i]].name);

}

}

}

}

/**

* @brief Get data and run inferencing

*/

void loop()

{

ei_printf("\nStarting inferencing in 2 seconds...\r\n");

delay(2000);

if (EI_CLASSIFIER_RAW_SAMPLES_PER_FRAME != fusion_ix) {

ei_printf("ERR: Sensors don't match the sensors required in the model\r\n"

"Following sensors are required: %s\r\n", EI_CLASSIFIER_FUSION_AXES_STRING);

return;

}

ei_printf("Sampling...\r\n");

// Allocate a buffer here for the values we'll read from the sensor

float buffer[EI_CLASSIFIER_DSP_INPUT_FRAME_SIZE] = { 0 };

for (size_t ix = 0; ix < EI_CLASSIFIER_DSP_INPUT_FRAME_SIZE; ix += EI_CLASSIFIER_RAW_SAMPLES_PER_FRAME) {

// Determine the next tick (and then sleep later)

int64_t next_tick = (int64_t)micros() + ((int64_t)EI_CLASSIFIER_INTERVAL_MS * 1000);

for(int i = 0; i < fusion_ix; i++) {

if (sensors[fusion_sensors[i]].status == INIT) {

sensors[fusion_sensors[i]].poll_sensor();

sensors[fusion_sensors[i]].status = SAMPLED;

}

if (sensors[fusion_sensors[i]].status == SAMPLED) {

buffer[ix + i] = *sensors[fusion_sensors[i]].value;

sensors[fusion_sensors[i]].status = INIT;

}

}

int64_t wait_time = next_tick - (int64_t)micros();

if(wait_time > 0) {

delayMicroseconds(wait_time);

}

}

// Turn the raw buffer in a signal which we can the classify

signal_t signal;

int err = numpy::signal_from_buffer(buffer, EI_CLASSIFIER_DSP_INPUT_FRAME_SIZE, &signal);

if (err != 0) {

ei_printf("ERR:(%d)\r\n", err);

return;

}

// Run the classifier

ei_impulse_result_t result = { 0 };

err = run_classifier(&signal, &result, debug_nn);

if (err != EI_IMPULSE_OK) {

ei_printf("ERR:(%d)\r\n", err);

return;

}

// print the predictions

ei_printf("Predictions (DSP: %d ms., Classification: %d ms., Anomaly: %d ms.):\r\n",

result.timing.dsp, result.timing.classification, result.timing.anomaly);

for (size_t ix = 0; ix < EI_CLASSIFIER_LABEL_COUNT; ix++) {

ei_printf("%s: %.5f\r\n", result.classification[ix].label, result.classification[ix].value);

}

#if EI_CLASSIFIER_HAS_ANOMALY == 1

ei_printf(" anomaly score: %.3f\r\n", result.anomaly);

#endif

}

#if !defined(EI_CLASSIFIER_SENSOR) || (EI_CLASSIFIER_SENSOR != EI_CLASSIFIER_SENSOR_FUSION && EI_CLASSIFIER_SENSOR != EI_CLASSIFIER_SENSOR_ACCELEROMETER)

#error "Invalid model for current sensor"

#endif

/**

* @brief Go through sensor list to find matching axis name

*

* @param axis_name

* @return int8_t index in sensor list, -1 if axis name is not found

*/

static int8_t ei_find_axis(char *axis_name)

{

int ix;

for(ix = 0; ix < N_SENSORS; ix++) {

if(strstr(axis_name, sensors[ix].name)) {

return ix;

}

}

return -1;

}

/**

* @brief Check if requested input list is valid sensor fusion, create sensor buffer

*

* @param[in] input_list Axes list to sample (ie. "accX + gyrY + magZ")

* @retval false if invalid sensor_list

*/

static bool ei_connect_fusion_list(const char *input_list)

{

char *buff;

bool is_fusion = false;

/* Copy const string in heap mem */

char *input_string = (char *)ei_malloc(strlen(input_list) + 1);

if (input_string == NULL) {

return false;

}

memset(input_string, 0, strlen(input_list) + 1);

strncpy(input_string, input_list, strlen(input_list));

/* Clear fusion sensor list */

memset(fusion_sensors, 0, N_SENSORS);

fusion_ix = 0;

buff = strtok(input_string, "+");

while (buff != NULL) { /* Run through buffer */

int8_t found_axis = 0;

is_fusion = false;

found_axis = ei_find_axis(buff);

if(found_axis >= 0) {

if(fusion_ix < N_SENSORS) {

fusion_sensors[fusion_ix++] = found_axis;

sensors[found_axis].status = NOT_INIT;

}

is_fusion = true;

}

buff = strtok(NULL, "+ ");

}

ei_free(input_string);

return is_fusion;

}

/**

* @brief Return the sign of the number

*

* @param number

* @return int 1 if positive (or 0) -1 if negative

*/

float ei_get_sign(float number) {

return (number >= 0.0) ? 1.0 : -1.0;

}

bool init_IMU(void) {

static bool init_status = false;

if (!init_status) {

init_status = IMU.begin();

}

return init_status;

}

bool init_APDS(void) {

static bool init_status = false;

if (!init_status) {

init_status = APDS.begin();

}

return init_status;

}

uint8_t poll_APDS_color(void) {

int temp_data[4];

if (APDS.colorAvailable()) {

APDS.readColor(temp_data[0], temp_data[1], temp_data[2], temp_data[3]);

data[12] = temp_data[0];

data[13] = temp_data[1];

data[14] = temp_data[2];

data[15] = temp_data[3];

}

}Our project evolved a lot over the course of the project’s development. We began thinking about how to have an ongoing conversation with ChatGPT, and decided on a CYOA mystical story-writing concept. However, after realizing the back-and-forth aspect of ChatGPT was technically out of scope for the project, we thought about how to scale back. Eventually, we landed on our current project state which is a “subverting-career-future-storytelling” device. Although a lot changed in the process, we retained: using ChatGPT in a madlibs prompt fashion and using color sensing to “magically” encode arbitrary tokens.

We also learned a lot through the technical development and making process. At first we thought the color sensor would be far more sensitive and so it was recommended we added a button to control the ON/OFF state of the color sensor so it wasn’t constantly reading. However, we realized that within our controlled box environment, the color sensor could be trained really accurately and there was no need for a color sensor.

Another notable technical challenge we encountered was with the printer printing dull pieces. After some research, we found the solution of switching from a 5v to 9v power source.

Open Questions and Next Steps

We received a lot of meaningful feedback from the guest crits.

One interesting comment we received from Mary-Lou was about using our project to make users question or uncomfortable. We had found accidentally that although our prompt purposely uses neutral adjectives, it generally evokes optimistic responses. Looking at the form of our project, however, as this machine that spits out fortunes upon a tangible activation, it would be interesting to think of it almost as a “gamble”. Mary-Lou brought up the question of making our machine almost “addictive”, something that users would get an adrenaline rush from using. Therefore, a more unpredictable and provoking nature to the responses would be an interesting future exploration.

Sinan also commented to the entire class about “how your project gets there” which is an interesting thought. We could definitely play around with the context our machine exists in, and draw out a user journey that’s much more expanded in scale — considering how a user first “stumbles upon” our artifact.

Reflection

We definitely had to kill a few darlings along the way, but our project worked really well in the way that it worked just well enough for us to demonstrate it smoothly and get meaningful feedback on it. By crit day, we weren’t able to connect our the color sensor to ChatGPT, so we wizard of oz’d the prompt to match the chosen token. However, this didn’t interfere with the user experience. We were also able to troubleshoot smaller problems that had a large impact by the end, such as printing out more saturated receipt slips, and adjusting the placement of the strip printing to be more ergonomic.

Share this Project

This project is only listed in this pool. Be considerate and think twice before sharing.

Found In

About

Ever jealous of those babies who get to know their prosperous career path through picking out of a pile of toys placed conveniently in front of them and then celebrated? Participate in the mid-life doljabi… and this time “hack” the system because as an adult, you know the meaning behind different symbols… or so you think. Technology and innovation bringing unprecedented change and uncertainty to the foreseeable future? Tell me something I don’t know.