Cast your wish in the well- for a price. Instead of coins, Wishwell deals in personal data to foster intuition and anchor your internal voice. Create your own destiny and let Wishwell help you manifest your ideal futures.

Created: March 23rd, 2023

Intent

Cast your wish in the well- for a price. Instead of coins, Wishwell deals in information. It helps bring your dreams for the future to fruition– that is, if you’re willing to share a piece of yourself.

Current algorithmic “predictions” are based in past histories, rooted in what has already occurred. Will we let these devices tell us what to expect and what we should look forward to? Or is there an opportunity to make our own fate? If we can’t ever truly know the future, can we at least actively shape it?

Wishwell highlights the pitfalls of machine prediction and dictation, but builds on latent fears and desires to foster guided intuition and anchor your internal voice. Create your own destiny with Wishwell- and let it translate what the universe is trying to help you manifest.

Context

Precedent

Auger and Loizeau’s Real Prediction Machines is a material speculation on the nature of prediction in the information era. We were heavily inspired by this narrative, which states that “Predicting the future is no longer about the mystical reading of natural and celestial phenomena. Today it is all about data.” But data is fallible, measured only once events have occurred– what then is prediction? Do we try to change it, or accept our fate? All ritualistic practices, to some extent, build on latent wants, fears, and desires. But if we claim that machine prediction is not true “foresight”, rooted in what has already happened, could devices instead acknowledge this tension? The Believe It Yourself series from Automato.farm addresses this by presenting devices which instead aim to reflect our inner beliefs. In particular, BIY Hear served as guiding inspiration for this project.

Procedural Inspiration

Based on our shared interest in tasseography and perceived opportunity in this metaphorical angle, we decided to attempt integrating this reference within the constraints of our assigned input and output. At the core, tasseography involves:

- Some sort of ritualistic sequence and a

- Substrate with fluid behaviors and form,

- Activated by an individual and

- Resulting in some ambiguous visual output to be interpreted.

We gravitated towards tea-reading in part because the open-ended nature of the medium really requires creative interpretation, rather than providing the querent with established sets of discrete outcomes. We also found great meaning in the ritual of tasseography and the careful investment and intimacy fostered by the meditative act of making and drinking tea. To lend even more ambiguity and agency, we decided to leave room in this encounter for the actor to interpret the substrate themselves and ascribe meaning to that– rather than providing an interpretation, an objective reality they encounter and must respond to, only allowing the actor to discern meaning from that symbolism.

We sought to avoid a 1-1 replication of tasseography practices– it would have been straightforward to mimic, but did not align with our objectives. Instead, finding creative opportunities in constraints, we decided to explore ways to use sound as conduit for visual output, producing patterns and movement through resonant vibrations. Relevant precedents include phenomena demonstrated by Chladni plates. Tasseography thus serves as a reference point for the Wishwell ritual.

Symbolic and Cultural Inspiration

Informed by the central idea of machine predictions parroting versions of the past, we were heavily inspired by the familiar concept and related mythology of a Wishing Well or Echoing Cave. Tea-leaf reading can be conducted by a hired practitioner or oneself- in that regard, the role of the Medium is left open to greater interpretation. In reference to a mystical wishing well or echoing cave trope, this proposed artifact acts as the diviner and divination prop simultaneously.

Echoing Cave

In the Echoing Cave metaphor, you speak to spirits embodied by the cave and hear voices responding with a fortune, prediction, or omen. But in actuality, what is echoing back to you is simply what you just said, from the past, distorted or reflected in a variation. Here, machine prediction is the past hitting you back- but this new metaphor adds intentional fuzziness and noise, allowing one to embed their own interpretation in the distortion.

Wishing Well

Usually, predictive machine intelligences leave no room for negotiation or expansion of creativity- whether it attempts to finish your search query, or hastily corrects your spelling, the output is never truly “forecasting”. Rather than expecting this technology to tell us our futures, then, what if it helped us form our own good fate? Usually, wishing wells activate and manifest wishes in exchange for a small price, a coin or token. In this experience, your wish is from your past (the expression of intent). Many people believe that prediction is not only objective, but comes from outside–rather, we’re training these systems. In this manner, Wishwell reinforces that algorithms and predictive machine intelligences are simply operating from our past histories, while creating a different version of a familiar experience and tapping into a sense of inner intuition and belief in self.

Concept

Upon approaching Wishwell, participants begin by setting an intention in their mind and adding salt to Wishwell as an offering. By engaging a stimulus, inciting active investment from the individual who is now beginning to look for something to interpret, Wishwell invites investment and commitment to fully engaging with the ritual. The accumulation of salt from past participants creates as history of hopes and wishes for the future, with the amount of salt you add changing the resulting behavior and visualizations formed.

The participant then casts their wish by speaking it aloud. Due to the sensitivity of the microphone, the participant must lean in closely to Wishwell to make their intention heard- when you cast a wish, it is typically very personal and cannot be overheard by others in order for it to become reality. By designing Wishwell with certain material constraints, coupled with existing mental models, we aimed to facilitate an intimate interaction that would perhaps establish an enduring relationship over time. Wishwell then captures keywords from your spoken manifestation and categorizes it into one of five categories, which each map to a distinct audio frequency, vibration, and visual pattern output. This relationship is not made explicit, however, and is intentionally veiled and distorted.

To the participant, the ambiguity lends a sense of mystery but the system’s internal logic processes inputs and simply echoes what has already been said, modeling our critique on the current backcasting of machine intelligences. Rather than drawing explicit attention to this fact, we frame the output through a lens of translation, with Wishwell serving to “translate the universe’s wisdom into visual form” to help you manifest your wish. In this way, Wishwell embodies your intuition, belief in self, and hopes and anxieties for the future.

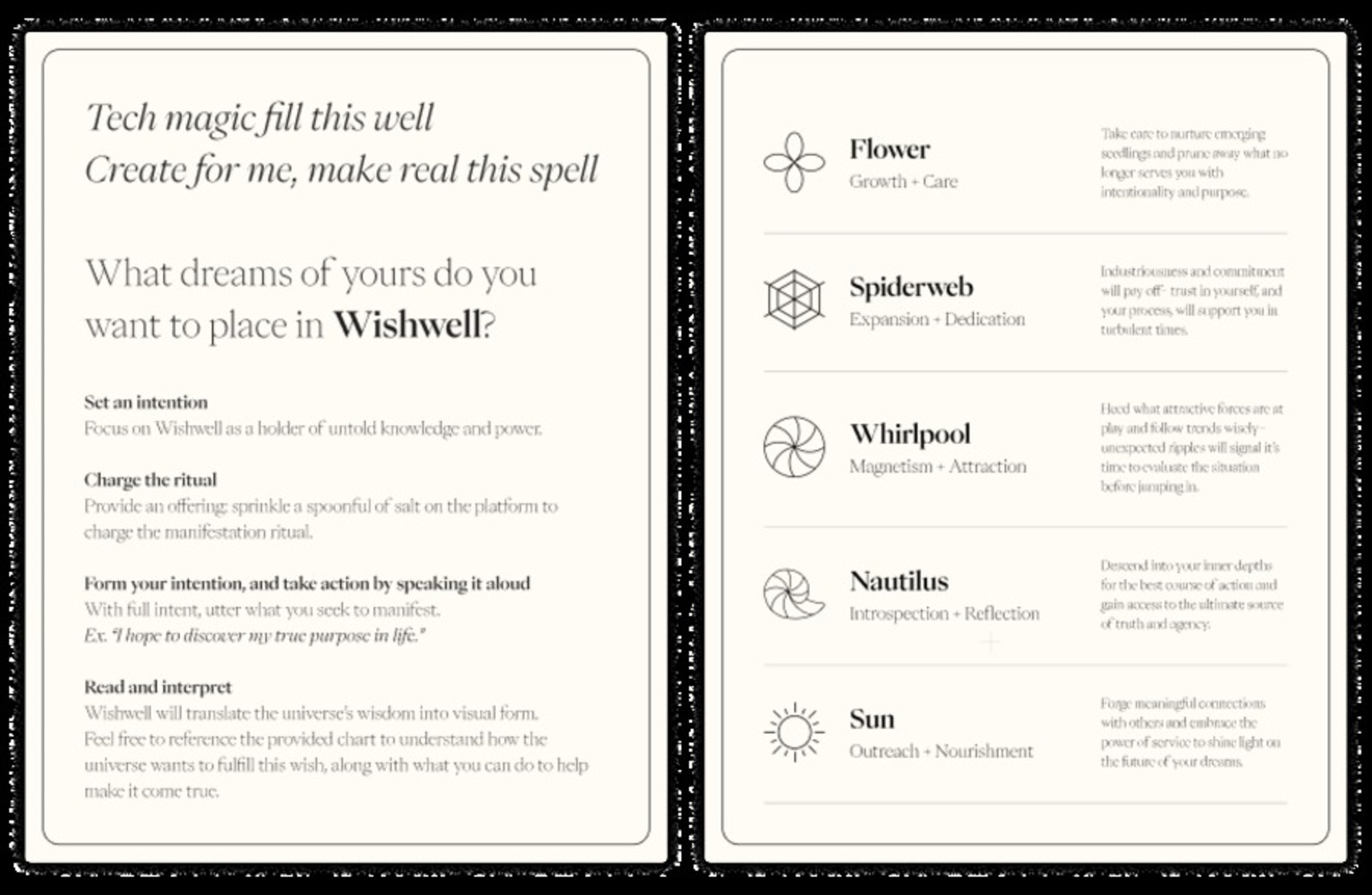

To tie the entire experience together, we created a ritual guide and chart for folks to refer to, if desired. Language and tone, iconography, and typography were carefully considered as part of the overall feel. Each symbol and its corresponding “reading” was decided based on the thematic categories the system recognizes- health, career, wealth, life purpose, and relationships, which we derived from conventional divination queries and readings.

Process

Hardware System

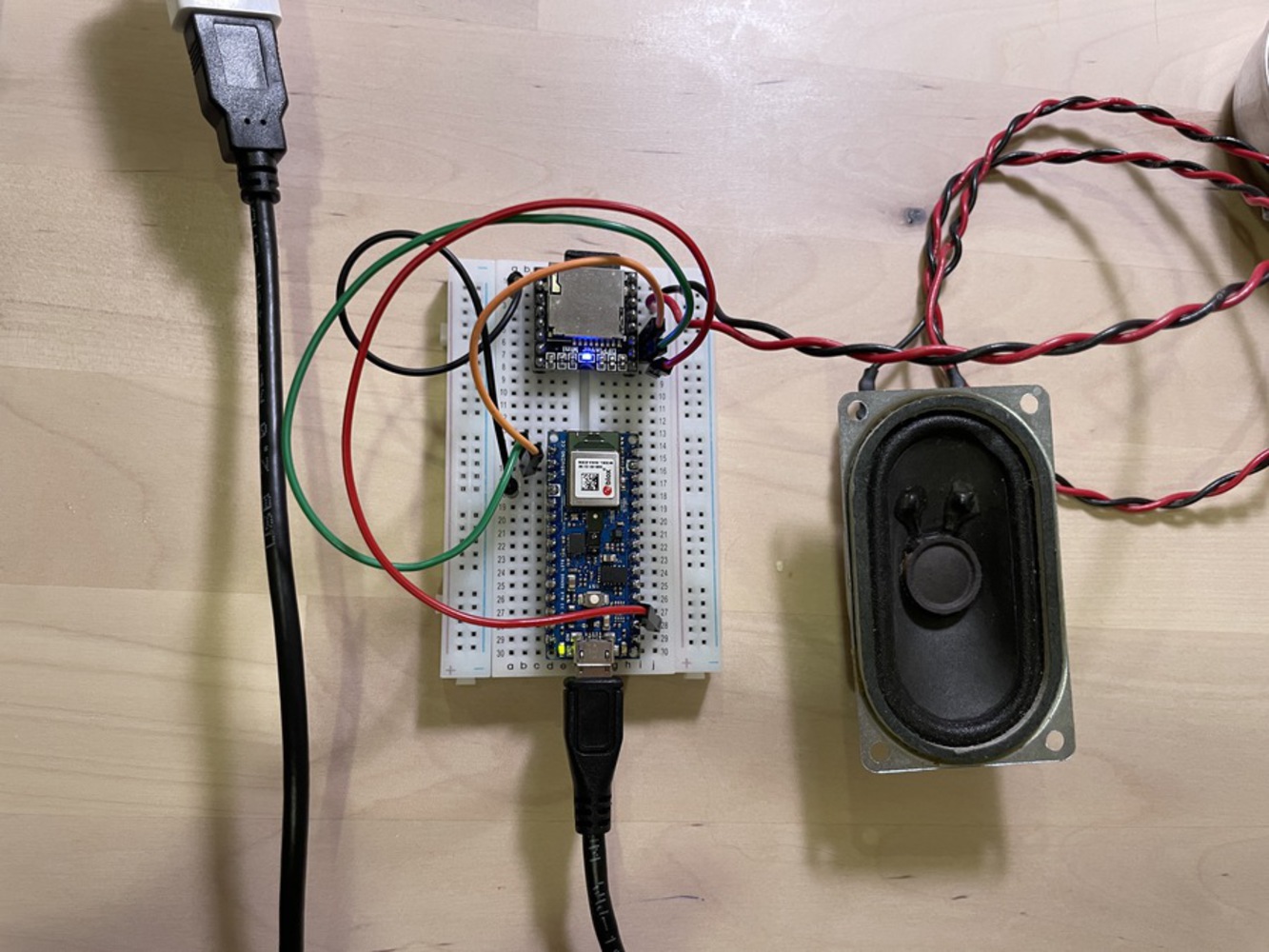

DFPlayer Mini

DFPlayer was a simple device to install, and it could create the sound pretty well for the small devices. During the development process, we found that it requires formatting whenever we want to add or replace the existing sounds. Otherwise, it remembers the previous files and plays the outdated ones.

Speakers

Even though the given output is the mp3 player, we decided to use the sound as a vibration generator. One of the reasons is that the inspiration is the tasseography, which is based on visual patterns. Also, we considered the demonstration environment would be pretty loud that it would be hard to hear the sound there as well. We aimed to create vivid patterns with small particles such as salts. We tried three different speaker parts, including existing Bluetooth speakers. Lastly, we encountered the surface transducer, which turns any surface into a speaker. Unlike the other speakers focused on better sound quality, our aim was focused on the vibration itself, and we didn’t want to have too much other auditory experience other than that. Further, it was also easy for us to place the tray on top of the transducer so that we could avoid physical housing issues.

Fabrication: Laser Cutting & Housing

The physical tools and symbols are important in superstitions and divination. We both wanted to achieve the mood of experience through the Wishwell, on top of the concepts and their interaction. Since we had a chance to learn about laser cutting during this module, it was a good chance to utilize it. Surprisingly it took longer than expected, and it required a substantial amount of time to work on the digital format to make sure that it fits well and cuts the patterns as we wished.

The housing is two nested boxes, one for the support of electronics and also they could be positioned properly to function well; the Arduino was installed closely on the surface since the sound sensor is embedded in there. The other box covers the inside and creates a water ripple on the surface to reflect our concept of Wishwell.

Tiny ML: Edge Impulse

Different from the other software, connecting the Edge Impulse to the Arduino was not intuitive for two reasons. First of all, CLI is unfamiliar with installing the software, and secondly, there was no feedback from Arduino, making us unsure if something was successfully uploaded or not. Following the instruction in this environment, without complete understanding, the installation process reminded us to think the whole process was spooky. In other words, we didn't fully understand what was happening but just followed the instructions. The camera sensor was more complex than the sound classifier. We were mainly trying to capture the word in the sentence, and it made it hard to train the model.

When identifying key words and training the language model in Edge Impulse, a number of challenges and limitations cropped up that affected the reliability and resilience of our model. As a second direction we explored non-verbal audio inputs, experimenting with a water glass xylophone and training based on these pitches, which resulted in a very accurate model. Ultimately, we ended up discarding this exploration as it did not align with our initial narrative. We decided to detect keywords as it was important for participants to explicitly provide inputs with semantic meaning, which was necessary for the underlying logic of the system, and also allowed us to frame the interaction through a familiar point of reference. Instead we constrained our project for the sake of a working proof of concept demo, training the model on 2 key words: "purpose" and "job", as well as noise samples.

We were quite limited in the number of key terms we are able to provide samples for within the scope of this project, leaving out a range of queries that participants would presumably have been able to submit as inputs. For a proof-of-concept demo however, this was acceptable and we settled on the words “job” and “purpose” to train our model on. Another limitation stemmed from the diversity of voices and contexts the model was trained on– we attempted to include as many Noise samples as possible and record samples from a range of individuals and settings.

ChatGPT

We also visited the generative AI, ChatGPT, and OpenAI Playground, to understand the concept and the possibility of exploring our project. They are more advanced than expected-less errors and quick responses-, on the other, not as advanced as expected-not as conversational as humans. For example, they could answer and summarize the information-based questions very well; however, the experience didn’t feel interactive. The question is always initiated by humans, and they could not lead the conversation as humans. On the other hand, it was incredible to see them having personalities and different answers to the same questions. We both asked, “Do you believe in god?” and the two machines answered totally differently. One machine answered the way more informative about the concept of god, and the other machine answered it/she/he believes in god and it is from its/her/his family religion. It is impossible for them to have the family as a machine. Is it possible for AI to lie? Otherwise, who was the AI referring to family? It was interesting to explore. However, it is hard to engage ChatGPT in our output, an MP3 player, and found that it would not provide the sort of ambiguity central to our narrative and provocation, so we ultimately decided to leave it aside for this project.

Putting it all together

We spent each week learning and understanding the various technologies. Coupling these separated components seemed challenging, but the deployment didn’t require too much hassle. However, the data overflow error during the program's running took some time to debug and revisit each component. It seemed contrary because Edge Impulse required an enormous amount of data to achieve accuracy, and it caused the Arduino to have a hard time running its code. We tried to change the classifier from MFCC to MFE and also tried to change the Arduino library as well.

Since we had decided to split up and delegate work towards the end, we came together to connect the fabricated housing and electronics with the trained model. We also changed the sound to be a single tone, 345Hz, 984Hz, and 1820Hz. 1820Hz was not only painful to listen to the sound, but also it didn’t create any pattern with the salt. To allow enough time to create the pattern and also let people enjoy the movement, each sound is about 9 seconds.

Open Questions and Next Steps

We were quite limited in the number of key terms we are able to provide samples for within the scope of this project, leaving out a range of queries that participants would presumably have been able to submit as inputs. The challenge of recognizing a word used within a conversational phrase as opposed to detecting between isolated "wake" words immediately surfaced. For a proof-of-concept demo however, this was acceptable and we settled on the words “job” and “purpose” to train our model on. Another limitation stemmed from the diversity of voices and contexts the model was trained on– we attempted to include as many Noise samples as possible and record samples from a range of individuals and settings. Training with more accurate noise samples to the context of use would improve reliability.

Revisiting the central question

We began this project by exploring a variety of interest areas and questions around the theme of machine intelligences, data collection, and predictive machine outcomes. From the outset, we wanted to question not only the assumptions and beliefs embedded in technology and material culture, but the deterministic influence and constraints set by the stories we tell ourselves. In particular, we honed in on this current representation and perception of machine outcomes as entirely objective, inherently dependable. How do devices and machine intelligences present themselves? If we blindly believe and follow, do they become the ultimate deterministic, authoritative source? What other ways of enacting and bringing our desired futures into being can technology mediate?

Reflection

We spent quite a bit of time defining goals for this project and refining the concept itself, and while this meant that the implementation had to come together fairly quickly, it seemed to have paid off. Overall, critical reception was quite supportive and evocative, and encounters unfolded in the ways we had hoped. It also seemed to be effective as a catalyst for reflection and provocation, with most of the discussions centered around potential tangents for further exploration and poking at how this object-oriented ritual might take on a different feeling, tone, or purpose depending on the context in which it is encountered, or perhaps the physical form it takes.

We tried to create the rituals, not an object. It is because we found the distinctive characteristic of tasseography is it requires the process and by doing so, it creates a personal experience to it. During the demonstration, this process also allowed us to learn about the users as well. Even though there is an instruction, everyone interacts slightly differently. For example, we didn’t expect users to stir the salt, but they stir the salt while thinking about their desire. Also, some try to read the fortune while vibrating, and others try to read the fortune after the vibrating thinking vibration is the process.

The current concept focused on creating a spooky experience, criticizing the current use of technology. The next step would be to make it more useful to the users and create other interesting experiences and messages. I wonder how this approach would bring up more intriguing questions from the users.

Lastly, we really wanted to create something to take with them. We tried to use the ink methods and experimented with them but didn’t have much time to make it happen. This could be something that we could revisit and explore more.

Quotes from live demo

"Really successful in a way that you made it feel like a ritual- thought of this as an experience end-to-end even though there were points that weren't 'needed', contributed to ritual, embed my own meaning to it and interpret it."

"Interaction between me and this machine is interesting, feels pretty intimate, if I come back I would get something different."

Attribution and References

In order of reference

Real Prediction Machines. James Auger and Jimmy Loizezu. (2015)

Believe It Yourself. Automata.farm. (2018).

For Centuries, People Have Searched For Answers In The Bottom Of A Tea Cup. NPR. (2015)

Gaver, W. W., Beaver, J., & Benford, S. (2003, April). Ambiguity as a resource for design. In Proceedings of the SIGCHI conference on Human factors in computing systems (pp. 233-240).

#include "Arduino.h"

#include "DFRobotDFPlayerMini.h"

//mp3

DFRobotDFPlayerMini myDFPlayer;

void printDetail(uint8_t type, int value);

// If your target is limited in memory remove this macro to save 10K RAM

#define EIDSP_QUANTIZE_FILTERBANK 0

/**

* Define the number of slices per model window. E.g. a model window of 1000 ms

* with slices per model window set to 4. Results in a slice size of 250 ms.

* For more info: https://docs.edgeimpulse.com/docs/continuous-audio-sampling

*/

#define EI_CLASSIFIER_SLICES_PER_MODEL_WINDOW 4

/*

** NOTE: If you run into TFLite arena allocation issue.

**

** This may be due to may dynamic memory fragmentation.

** Try defining "-DEI_CLASSIFIER_ALLOCATION_STATIC" in boards.local.txt (create

** if it doesn't exist) and copy this file to

** `<ARDUINO_CORE_INSTALL_PATH>/arduino/hardware/<mbed_core>/<core_version>/`.

**

** See

** (https://support.arduino.cc/hc/en-us/articles/360012076960-Where-are-the-installed-cores-located-)

** to find where Arduino installs cores on your machine.

**

** If the problem persists then there's not enough memory for this model and application.

*/

/* Includes ---------------------------------------------------------------- */

#include <PDM.h>

#include <Tasseography-3_inferencing.h>

/** Audio buffers, pointers and selectors */

typedef struct {

signed short *buffers[2];

unsigned char buf_select;

unsigned char buf_ready;

unsigned int buf_count;

unsigned int n_samples;

} inference_t;

static inference_t inference;

static bool record_ready = false;

static signed short *sampleBuffer;

static bool debug_nn = false; // Set this to true to see e.g. features generated from the raw signal

static int print_results = -(EI_CLASSIFIER_SLICES_PER_MODEL_WINDOW);

/**

* @brief Arduino setup function

*/

void setup()

{

// mp3

Serial1.begin(9600);

if (!myDFPlayer.begin(Serial1)) { //Use softwareSerial to communicate with mp3.

while(true){

delay(0); // Code to compatible with ESP8266 watch dog.

}

}

myDFPlayer.volume(30); //Set volume value. From 0 to 30

myDFPlayer.play(1); //Play the first mp3

// put your setup code here, to run once:

Serial.begin(115200);

// comment out the below line to cancel the wait for USB connection (needed for native USB)

while (!Serial);

Serial.println("Edge Impulse Inferencing Demo");

// summary of inferencing settings (from model_metadata.h)

ei_printf("Inferencing settings:\n");

ei_printf("\tInterval: %.2f ms.\n", (float)EI_CLASSIFIER_INTERVAL_MS);

ei_printf("\tFrame size: %d\n", EI_CLASSIFIER_DSP_INPUT_FRAME_SIZE);

ei_printf("\tSample length: %d ms.\n", EI_CLASSIFIER_RAW_SAMPLE_COUNT / 16);

ei_printf("\tNo. of classes: %d\n", sizeof(ei_classifier_inferencing_categories) /

sizeof(ei_classifier_inferencing_categories[0]));

run_classifier_init();

if (microphone_inference_start(EI_CLASSIFIER_SLICE_SIZE) == false) {

ei_printf("ERR: Could not allocate audio buffer (size %d), this could be due to the window length of your model\r\n", EI_CLASSIFIER_RAW_SAMPLE_COUNT);

return;

}

}

/**

* @brief Arduino main function. Runs the inferencing loop.

*/

void loop()

{

bool m = microphone_inference_record();

if (!m) {

ei_printf("ERR: Failed to record audio...\n");

return;

}

signal_t signal;

signal.total_length = EI_CLASSIFIER_SLICE_SIZE;

signal.get_data = µphone_audio_signal_get_data;

ei_impulse_result_t result = {0};

EI_IMPULSE_ERROR r = run_classifier_continuous(&signal, &result, debug_nn);

if (r != EI_IMPULSE_OK) {

ei_printf("ERR: Failed to run classifier (%d)\n", r);

return;

}

if (++print_results >= (EI_CLASSIFIER_SLICES_PER_MODEL_WINDOW)) {

// print the predictions

ei_printf("Predictions ");

ei_printf("(DSP: %d ms., Classification: %d ms., Anomaly: %d ms.)",

result.timing.dsp, result.timing.classification, result.timing.anomaly);

ei_printf(": \n");

for (size_t ix = 0; ix < EI_CLASSIFIER_LABEL_COUNT; ix++) {

ei_printf(" %s: %.5f\n", result.classification[ix].label,

result.classification[ix].value);

}

#if EI_CLASSIFIER_HAS_ANOMALY == 1

ei_printf(" anomaly score: %.3f\n", result.anomaly);

#endif

print_results = 0;

}

//LED

//Job - red

if( result.classification[0].value > 0.7 ){

digitalWrite( LEDR, LOW );

digitalWrite( LEDG, HIGH );

digitalWrite( LEDB, HIGH );

myDFPlayer.play(2);

}

//noise - green

if( result.classification[1].value > 0.7 ){

digitalWrite( LEDR, HIGH );

digitalWrite( LEDG, LOW );

digitalWrite( LEDB, HIGH );

}

//purpose - blue

if( result.classification[2].value > 0.7 ){

digitalWrite( LEDR, HIGH );

digitalWrite( LEDG, HIGH );

digitalWrite( LEDB, LOW );

//delay(3000);

myDFPlayer.play(1);

//delay(100);

}

}

/**

* @brief PDM buffer full callback

* Get data and call audio thread callback

*/

static void pdm_data_ready_inference_callback(void)

{

int bytesAvailable = PDM.available();

// read into the sample buffer

int bytesRead = PDM.read((char *)&sampleBuffer[0], bytesAvailable);

if (record_ready == true) {

for (int i = 0; i<bytesRead>> 1; i++) {

inference.buffers[inference.buf_select][inference.buf_count++] = sampleBuffer[i];

if (inference.buf_count >= inference.n_samples) {

inference.buf_select ^= 1;

inference.buf_count = 0;

inference.buf_ready = 1;

}

}

}

}

/**

* @brief Init inferencing struct and setup/start PDM

*

* @param[in] n_samples The n samples

*

* @return { description_of_the_return_value }

*/

static bool microphone_inference_start(uint32_t n_samples)

{

inference.buffers[0] = (signed short *)malloc(n_samples * sizeof(signed short));

if (inference.buffers[0] == NULL) {

return false;

}

inference.buffers[1] = (signed short *)malloc(n_samples * sizeof(signed short));

if (inference.buffers[1] == NULL) {

free(inference.buffers[0]);

return false;

}

sampleBuffer = (signed short *)malloc((n_samples >> 1) * sizeof(signed short));

if (sampleBuffer == NULL) {

free(inference.buffers[0]);

free(inference.buffers[1]);

return false;

}

inference.buf_select = 0;

inference.buf_count = 0;

inference.n_samples = n_samples;

inference.buf_ready = 0;

// configure the data receive callback

PDM.onReceive(&pdm_data_ready_inference_callback);

PDM.setBufferSize((n_samples >> 1) * sizeof(int16_t));

// initialize PDM with:

// - one channel (mono mode)

// - a 16 kHz sample rate

if (!PDM.begin(1, EI_CLASSIFIER_FREQUENCY)) {

ei_printf("Failed to start PDM!");

}

// set the gain, defaults to 20

PDM.setGain(127);

record_ready = true;

return true;

}

/**

* @brief Wait on new data

*

* @return True when finished

*/

static bool microphone_inference_record(void)

{

bool ret = true;

if (inference.buf_ready == 1) {

ei_printf(

"Error sample buffer overrun. Decrease the number of slices per model window "

"(EI_CLASSIFIER_SLICES_PER_MODEL_WINDOW)\n");

ret = false;

}

while (inference.buf_ready == 0) {

delay(1);

}

inference.buf_ready = 0;

return ret;

}

/**

* Get raw audio signal data

*/

static int microphone_audio_signal_get_data(size_t offset, size_t length, float *out_ptr)

{

numpy::int16_to_float(&inference.buffers[inference.buf_select ^ 1][offset], out_ptr, length);

return 0;

}

/**

* @brief Stop PDM and release buffers

*/

static void microphone_inference_end(void)

{

PDM.end();

free(inference.buffers[0]);

free(inference.buffers[1]);

free(sampleBuffer);

}

#if !defined(EI_CLASSIFIER_SENSOR) || EI_CLASSIFIER_SENSOR != EI_CLASSIFIER_SENSOR_MICROPHONE

#error "Invalid model for current sensor."

#endifShare this Project

Found In

Focused on

About

Cast your wish in the well- for a price. Instead of coins, Wishwell deals in personal data to foster intuition and anchor your internal voice. Create your own destiny and let Wishwell help you manifest your ideal futures.