For those looking for a ‘soulmate’, we offer a ‘palm-mate’ instead! Palmistry is the belief that one’s future is encoded within the palm of our hands. The length, depth and curvature of the crease lines within one’s hand tell of your prospects in love, money, career. Simply place your palm on the sensor and receive a printed ‘oracle’ symbol indicating your fortune. Find someone else with the same symbol to find your compatible ‘palm-mate’!

Created: March 31st, 2023

Intent

We are interested in exploring the concept of divination and fortune-telling, especially how that holds the power to influence relationships between individual humans, or even change how people view each other (ie. matchmaking and compatibility tests). The fact that people willingly believe (to varying degrees) the results from such tests is closely related to System 1 thinking in psychology. The small, happy coincidences in a fortune-telling context are interpreted as signs from unseen forces directing people’s fate.

Another relevant concept we explore with this project is illusory correlation. This is an idea in psychology that describes the phenomenon where people perceive relationships between variables when no relationships actually exist. It points out the fact that correlation is not equal to causation.

We find similar themes in the widespread use (and overuse) of machine learning in today’s society to power decision-making, be it policy, economic, or even personal. As people aim for efficiency and neglect proper design of models or data curation, they blindly follow machine predictions in a manner similar to superstition, or exaggerate the capabilities of large language models like GPT4 which still produces groundless or biased statements.

Context

We were inspired by projects like BIY™- Believe it Yourself, which raised questions around the issue of arbitrary data collection practices and training of machine learning models, as the technology becomes more easily accessible.

Another inspiration comes from the fact that large language models like GPT3 or 4 are “high capability, but low alignment”, which means that they are highly capable of generating human-like text, but are less good at producing output that is consistent with human’s desired outcome. However, reaction from the general public reveals blind trust or overhype of such models, overshadowing their important limitations. This was something we wanted to question with the introduction of superstition (compatibility test and fortune generation) as well.

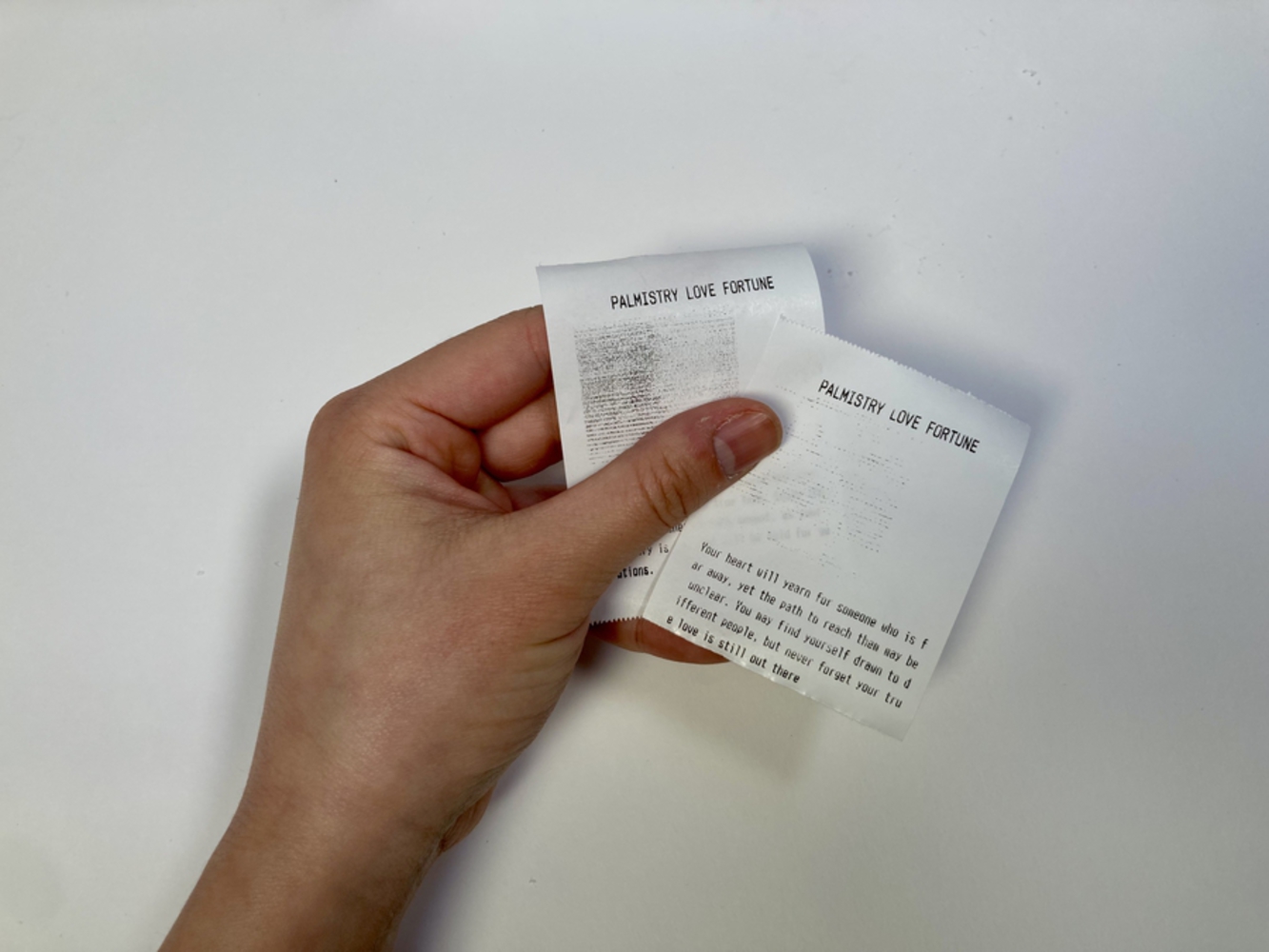

For our project, we decided to create a “palm reader" that would read the length of the user's ‘love line’ (i.e. the uppermost palm crease line) and print an ‘oracle symbol’ and a short piece of text describing the user’s love fortune. With the fortune slip in hand, the user would then look for their ‘palm-mate’ who had received the same ‘oracle symbol’.

(System Diagram)

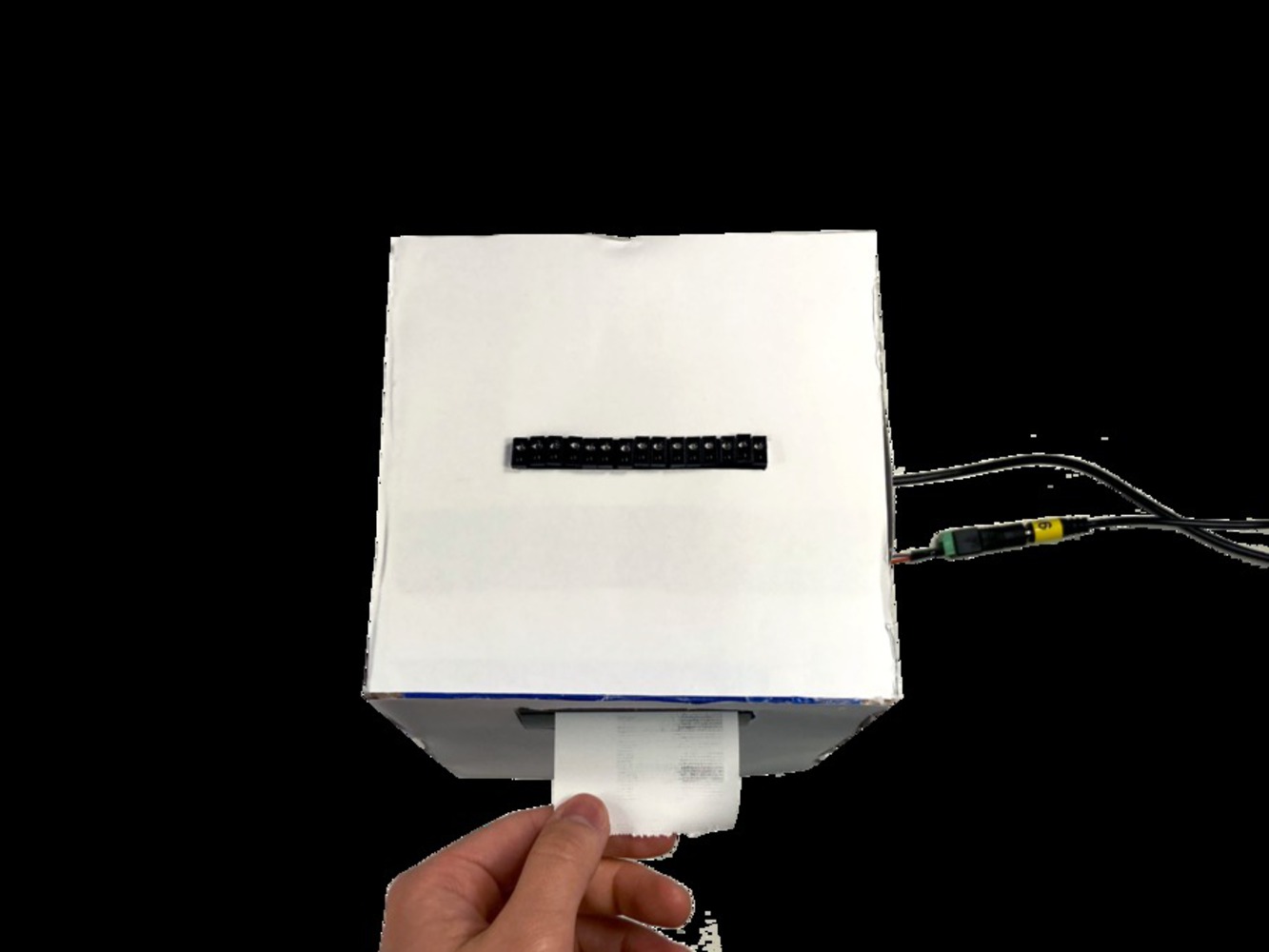

(Final Working Prototype)

On the top, we have a line of IR proximity sensors which would read values from the person’s palm. Each of the IR sensors are soldered onto a protoboard which connects each sensor to a power line, ground line, one of two CD4051 multiplexers, and 220Ω and 5.6kΩ resistors respectively. On the side is the thermal printer which would print the ‘love fortune’. This is connected to the Arduino Nano 33 BLE and an external 9V power supply. Due to the physical complexity of the project, installation into the physical housing was split into two compartments. The IR sensors and protoboard and the thermal printer sit in a smaller top level. All of the wires thread through a hole down to a lower compartment that houses two breadboards - one for the multiplexers, the other for the Arduino BLE.

(Before Assembly)

(Internal housing structure)

In order to parse the values read by the IR sensors, we had to split them up and connect them to two CD4051 multiplexers. In the code this required writing our own ‘readMux’ function that would iterate through each of the pins on the multiplexer and compile the values from both multiplexers into an array.

Additionally, we use ML twice. First, we pass the array of values from the IR sensors into an Edge Impulse model that returns a prediction of the current user’s palm length, and a reading of ‘good’, ‘bad’ or ‘ambiguous’. Depending on the reading (i.e. ‘good’, ‘bad’, ‘ambiguous’), one of 9 ‘oracle images’ is chosen. The reading is then sent as a prompt to OpenAI via the OpenAI API, asking it to generate a ‘good love fortune’, ‘ambiguous love fortune’ or ‘bad love fortune’ within 100 words. This is printed below the image.

Bill of Materials:

Working Code:

#include <Adafruit_Thermal.h>

#include <Arduino_APDS9960.h>

#include <project_2_rme_inferencing.h>

#include "edge-impulse-sdk/classifier/ei_classifier_types.h"

//IMAGE BITMAPS

#include "symbol1.h"

#include "symbol2.h"

#include "symbol3.h"

#include "symbol4.h"

#include "symbol5.h"

#include "symbol6.h"

#include "symbol7.h"

#include "symbol8.h"

#define BAUDRATE 9600

/**************************************************************************

This is a library for several Adafruit displays based on ST77* drivers.

The 1.47" TFT breakout

----> https://www.adafruit.com/product/5393

Check out the links above for our tutorials and wiring diagrams.

These displays use SPI to communicate, 4 or 5 pins are required to

interface (RST is optional).

Adafruit invests time and resources providing this open source code,

please support Adafruit and open-source hardware by purchasing

products from Adafruit!

Written by Limor Fried/Ladyada for Adafruit Industries.

MIT license, all text above must be included in any redistribution

**************************************************************************/

#include <Adafruit_GFX.h> // Core graphics library

#include <SPI.h>

// EDGE IMPULSE VARS

const char* model_name = "model";

ei_impulse_result_t result;

Adafruit_Thermal printer(&Serial1);

float p = 3.1415926;

const int nSensors = 15;

const int nSensorsPerMux = 8;

int sensorVals[15];

//Mux control pins

int s0 = D12;

int s1 = D11;

int s2 = D10;

int t0 = D9;

int t1 = D8;

int t2 = D7;

//Mux in "Z" pin

const int comPin1 = A0;

const int comPin2 = A1;

int total = 0;

int avg;

float avg_sensor_val = 0.0;

int line_length_band = 0; // For determining what kind of text prompt to write to user

float max_thresh = 80; // For checking if hand too far away

bool beginPrint = false;

bool isPrinting = false;

String goodSymbols[3] = {

"symbol1",

"symbol2",

"symbol3"

};

String neutralSymbols[3] = {

"symbol6",

"symbol7",

"symbol8"

};

String badSymbols[2] = {

"symbol4",

"symbol5"

};

String prediction;

/////////////// SETUP FUNCTION /////////////

void setup(void) {

//SENSORS

pinMode(s0, OUTPUT);

pinMode(s1, OUTPUT);

pinMode(s2, OUTPUT);

pinMode(t0, OUTPUT);

pinMode(t1, OUTPUT);

pinMode(t2, OUTPUT);

digitalWrite(s0, LOW);

digitalWrite(s1, LOW);

digitalWrite(s2, LOW);

digitalWrite(t0, LOW);

digitalWrite(t1, LOW);

digitalWrite(t2, LOW);

//THERMAL PRINTER

Serial.begin(9600);

Serial1.begin(9600);

printer.begin();

Serial.println(F("Initialized"));

run_classifier_init();

delay(2000);

}

int test_get_data(int offset, int length, float* out_ptr) {

// sanity check

float training_short[] = {100., 121., 153., 139., 175., 144., 164., 144., 96., 116., 137., 127., 143., 128., 137.};

float training_medium[] = {103., 122., 169., 146., 191., 160., 172., 150., 121., 128., 145., 136., 151., 145., 148.};

float training_long[] = {86., 99., 146., 121., 179., 136., 165., 128., 139., 132., 157., 287., 164., 113., 754.};

avg_sensor_val = 0.0;

for (int i = 0; i < nSensors; i++) {

out_ptr[i] = float(sensorVals[i]); // / 1024.0 * 100 + 100);

avg_sensor_val += float(sensorVals[i]);

}

// Serial.println("\n");

avg_sensor_val /= nSensors;

// Update line lnegth band

line_length_band = max(1, min(ceil(avg_sensor_val / (1024.0 / 4) * 3), 3)) - 1;

return 0;

}

/////////////// LOOP FUNCTION /////////////

void loop() {

readMux(sensorVals);

printSensorVals(sensorVals);

delay(200);

// Edge impulse inference

ei::signal_t signal;

signal.total_length = nSensors;

signal.get_data = &test_get_data;

//ei_impulse_result_t result;

int err = run_classifier(&signal, &result, false); // False to not print debug info

if (err != EI_IMPULSE_OK) {

Serial.println("Classification failed");

} else { // Print edge impulse results

//NO READING

Serial.println(avg_sensor_val);

if (avg_sensor_val < max_thresh) {

Serial.println("Hand is too far away; please move closer to sensors.");

beginPrint = false;

//PRINT FORTUNE

} else {

//Serial.print("Inference result: ");

const char* fortune = result.classification[line_length_band].label;

Serial.println(fortune);

if (!isPrinting){ //if there is no ongoing print, then start new print

if (fortune == "short"){

prediction = "bad";

} else if (fortune == "medium"){

prediction = "ambiguous";

} else if (fortune == "long"){

prediction = "good";

}

Serial.println(String("ASK_OPENAI")+String(" ") + prediction);

delay(20000);

String imgPrint = chooseImage(fortune);

isPrinting = true;

Serial.println("Chosen Image");

printImage(imgPrint);

//isPrinting = false;

printer.justify('L');

printer.println();

if( Serial.available() ){ //print fortune generated by openAI

String incoming = Serial.readString();

Serial.println("RECEIVED DATA =>");

Serial.println(incoming);//display same r

printer.setSize('S'); //small text size

printReceivedText( incoming );

}

}

}

}

}

//change it to thermal print

void printReceivedText( String text ){

printer.setFont('B');

printer.println(text);

}

//////////SENSOR/////////////////

void readMux( int sensorVals[]) {

int controlPin1[] = {s0, s1, s2};

int controlPin2[] = {t0, t1, t2};

int muxChannel[8][3]={

{0,0,0}, //channel 0

{1,0,0}, //channel 1

{0,1,0}, //channel 2

{1,1,0}, //channel 3

{0,0,1}, //channel 4

{1,0,1}, //channel 5

{0,1,1}, //channel 6

{1,1,1}, //channel 7

};

//loop through every pin

for (int j = 0; j < nSensorsPerMux; j++){

//loop through the 3 sig

for(int i = 0; i < 3; i ++){

digitalWrite(controlPin1[i], muxChannel[j][i]);

digitalWrite(controlPin2[i], muxChannel[j][i]);

}

//read the value at the Z pin

int val1 = analogRead(comPin1);

int val2 = analogRead(comPin2);

//populate array

sensorVals[j] = val1;

total += val1;

if (j != nSensorsPerMux -1){

sensorVals[j + nSensorsPerMux] = val2;

total += val2;

}

}

}

void printSensorVals(int sensorVals[]){

for (int i = 0; i < nSensors; i++){

Serial.print(sensorVals[i]); Serial.print(", ");

}

Serial.println("\n");

}

//////////PRINTER/////////////////

//algorithm to choose image to print

String chooseImage(const char* fortune){

String img;

if (fortune == "long"){

//choose a symbol from 'good' symbols

long imgN = random(0, 3);

img = goodSymbols[imgN];

Serial.print("long symbol"); Serial.println(imgN);

} else if (fortune == "medium"){

//choose a symbol from 'neutral' symbols

long imgN = random(0, 2);

img = neutralSymbols[imgN];

Serial.print("medium symbol"); Serial.println(imgN);

} else {

//choose a symbol from 'bad' symbols

long imgN = random(0, 3);

img = badSymbols[imgN];

Serial.print("short symbol"); Serial.println(imgN);

}

return img;

}

void printImage(String img){

Serial.print("sending img "+img);

printer.justify('C');

printer.boldOn();

printer.println("PALMISTRY LOVE FORTUNE");

printer.boldOff();

if (img == "symbol1"){

printer.printBitmap(256, 256, heart1);

} else if (img == "symbol2") {

printer.printBitmap(256, 256, heart2);

} else if (img == "symbol3") {

printer.printBitmap(256, 256, heart);

} else if (img == "symbol4") {

printer.printBitmap(256, 256, knot1);

} else if (img == "symbol5") {

printer.printBitmap(256, 256, knot);

} else if (img == "symbol6") {

printer.printBitmap(256, 256, arrow1);

} else if (img == "symbol7") {

printer.printBitmap(256, 256, arrow2);

} else if (img == "symbol8") {

printer.printBitmap(256, 256, neutral);

}

}Process

Phase 1 - Collecting Data / Deciding Sensors for Input

Due to the small size and scope of the built-in sensor in the Arduino Nano 33 BLE Sense, we decided to use external sensors to widen the range of data collection.

We started off trying to use TCS34725 Color Sensors. While we were able to follow the pinout and successfully interface with one sensor, we ran into difficulties when trying to connect more. Not only was each sensor quite big, but also each had a fixed I2C address, meaning all the data was coming in under ‘one sensor’. In order to communicate with each sensor individually, we would need to use a multiplexer.

After meeting with Robert Zacharias, we took his advice and switched from using the larger color sensors to smaller individual IR sensors with the intention of building a 'primitive camera' out of an array of them. As we had little experience in the realm of physical computing, we took this work session to figure out:

- how to read the circuit diagram - in particular using ground and power lines and finding the right resistors

- how to interface with the IR sensors via Arduino and checking how different colors/ materials led to different values within the Serial Monitor

We started out by wiring a single IR sensor to the Arduino to see the difference in values read between dark and light colors, following the iDeaTe circuit diagram and tutorial.

Source: https://courses.ideate.cmu.edu/60-223/s2023/tutorials/IR-proximity-sensor

After testing each sensor to ensure it was functioning, we arranged 15 IR sensors in a line and soldered them to a protoboard, so we could record more data in order to determine the ‘length’ of the palm line. This part required a lot of discipline and diligence, not only was it Clover’s first time soldering, but also because of the large quantity and density of components. Each IR sensor had to be connected to 2 separate resistors, 1 Arduino/ Multiplexer Analog pin, a power source, and a ground line, totalling to 5 * 15 = 75 unique connections overall. To help legibility, Clover kept each resistor (220Ω on the left, 5.6kΩ on the right) and each power and ground connection on separate sides. The wires were color coded accordingly:

- Red (ground)

- Black (power)

- Blue (Multiplexer/ Arduino Analog pin)

Additionally, wire length was kept short to prevent tangles. This entire process took around 4 hours in the Digital Fabrication lab, as we took our time double checking at each step, and checking each sensor after it was soldered. We wanted to make sure we weren’t making major mistakes as we were aware that they would be difficult and even more time consuming to fix.

The wire endings on the other side were also trimmed down to ensure no accidental connections or shorting.

One difficulty that we encountered was after we had soldered all these sensors, we realized that there were 15 sensors that needed their own unique Analog pin, but there were only 8 Analog pins on the Arduino Nano. This meant we would need to use Multiplexers to increase the number of Analog pins in order to parse the data from each sensor. In another meeting, Robert Zacharias pointed us in the direction of the CD4051 which would act as a 8 pin expander. Since we had 15 sensors, we would need to use two. Here, the main difficulty was understanding how to call the 8 different channels via an array of 3 ints in the code:

After asking a friend in Robotics to explain, we wrote a custom function called readMux (i.e. void readMux) that was a nested loop. The outer loop would iterate through each of the sensors, and set the channel via digitalWrite to the associated control pin by iterating through an inner loop of length 3. It would then read the value at the associated control pin and add it to an array in the same position as the sensor.

//loop through every pin

for (int j = 0; j < nSensorsPerMux; j++){

//loop through the 3 sig

for(int i = 0; i < 3; i ++){

digitalWrite(controlPin1[i], muxChannel[j][i]);

digitalWrite(controlPin2[i], muxChannel[j][i]);

}

//read the value at the Z pin

int val1 = analogRead(comPin1);

int val2 = analogRead(comPin2);

//populate array

sensorVals[j] = val1;

total += val1;

if (j != nSensorsPerMux -1){

sensorVals[j + nSensorsPerMux] = val2;

total += val2;

}Phase 2 - Thermal Printer (output)

While Yvonne had gotten the thermal printer to print previously, Clover could not get the thermal printer to physically print. After struggling for several hours over zoom with Yvonne, we decided that Clover would go in early before the demo and try to get it fixed in person. It turned out that the TX and RX cables were switched and the paper was stuck. Fortunately, these were easily fixed.

Phase 3 - ML model

This part was more complicated than either of us had expected, due to our lack of experience in interfacing with ML using Arduino.

Firstly, we couldn't import the Edge Impulse model into the Arduino. The zip file that Yvonne downloaded wasn't the correct one so when we tried to add it as a library to the Arduino file, it resulted in errors such as: "Error 13 INTERNAL: Library install failed: archive not valid: multiple files found in zip file top level"

Secondly, we only had 20 samples with which to train our model. This meant that the predictions our model were giving were not very accurate, but we decided this uncertainty would only add to the charm of the project as well as the idea of the ‘supernatural’. After looking at the examples given in the Edge Impulse Examples library and asking Zhenfang (TA) and other friends to explain parts that we did not understand, we were able to get parse the data so that it printed the length of the palm line when a hand was in range and the message "Hand is too far away; please move closer to sensors." when a hand was not.

Based on the length, we were able to allocate one of the following predictions: ‘good’, ‘ambiguous’, ‘bad’. Yvonne worked on interfacing with the OpenAI API to get it to generate a text fortune based on the ML prediction, while Clover worked on a function that would choose one of the symbols (associated with that prediction) to print on the thermal printer.

//////////PRINTER/////////////////

//algorithm to choose image to print

String chooseImage(const char* fortune){

String img;

if (fortune == "long"){

//choose a symbol from 'good' symbols

long imgN = random(0, 3);

img = goodSymbols[imgN];

Serial.print("long symbol"); Serial.println(imgN);

} else if (fortune == "medium"){

//choose a symbol from 'neutral' symbols

long imgN = random(0, 2);

img = neutralSymbols[imgN];

Serial.print("medium symbol"); Serial.println(imgN);

} else {

//choose a symbol from 'bad' symbols

long imgN = random(0, 3);

img = badSymbols[imgN];

Serial.print("short symbol"); Serial.println(imgN);

}

return img;

}Open Questions and Next Steps:

There are many different areas of palmistry we have yet to explore. For instance, there are the other palm lines, such as the Head Line, Life Line, Marriage Line, Fate Line and Sun Line. There are also broader areas of the palm which are each associated with a planet, which could tie into some concepts from astrology.

Furthermore, Golan Levin proposed a really interesting idea we would love to follow up on which gave a ‘Missed Connections’ - type spin to the project. He really liked the idea of a ‘palm-mate’/ soulmate and that despite the information given by the fortune teller, there was no guarantee you would find someone with a matching symbol. He proposed that we should install the machine at a bus stop next to a bulletin board. The machine would print 2 copies: one for the user to keep and carry around, the other for the user to pin up on the bulletin board, waiting for its future matching ‘partner’. This narrative of ‘fate’ ties really well into the core concept of palmistry which believes that our fates are encoded in our hands. Moreover, it was ‘spooky’ because there were so many uncertainties - you never really knew if there was ever going to be a ‘palm-mate’, whether they would ever respond to your posting, or whether they actually were ‘compatible’ with you, yet you couldn’t help but hope.

Reflection

This project ended up being significantly more complicated than either of us had anticipated. Despite our best efforts to start early and remain open minded, we ran into major complications at almost every step of the way, resulting in us being unable to present a working demo at the live demo session. Soldering the physical computing units was a new workflow, and communicating with multiplexers required learning new coding concepts. Integrating the ML model into the Arduino code was something very new and unfamiliar to us, and required a lot of cross-referencing examples and seeking external help.

Furthermore, Yvonne had to leave for a conference the week before the demo meaning that all work on site could only be done by Clover. As testing the code required uploading and running on the Arduino, and the wiring had to be done physically in person, our progress was capped by the limitations in manpower. However, once she returned, we were able to divide the tasks up and progress as much faster.

Overall, this project was very difficult and took a lot of time to complete. While it may not have been the resounding success at the live demo that we wanted. Nevertheless, we did manage to complete it in all its necessary complexity, and learned a lot of different skills along the way.

Share this Project

Found In

Focused on

About

For those looking for a ‘soulmate’, we offer a ‘palm-mate’ instead! Palmistry is the belief that one’s future is encoded within the palm of our hands. The length, depth and curvature of the crease lines within one’s hand tell of your prospects in love, money, career. Simply place your palm on the sensor and receive a printed ‘oracle’ symbol indicating your fortune. Find someone else with the same symbol to find your compatible ‘palm-mate’!